Deep learning researchers are hitting the books.

By building AI tools to transcribe historical texts in antiquated scripts letter by letter, they’re creating an invaluable resource for researchers who study centuries-old documents.

Many old documents have been digitized as scans or photographs of physical pages. But while obsolete scripts like Greek miniscule or German Fraktur may be readable by experts, the text on these scanned pages is neither legible to a broad audience nor searchable by computers.

Hiring transcribers to turn manuscripts into typed text is a lengthy and expensive process. So developers have built digital tools for optical character recognition, the process of converting printed or written characters into machine-readable form.

And deep learning dramatically increases the accuracy of these tools.

Humanities researchers can use these AI-parsed texts to search for specific words in a book, see how a popular narrative changed over time, analyze the evolution of a language, or trace an individual’s background with census and business records.

Another benefit of this research: images of text make an ideal test ground for deep learning networks learning to recognize objects. That’s because, unlike identifying images of animals or the various elements in street scenes, there’s only one correct answer when determining whether a printed letter reads “c” or “o.”

“If you point your deep learning model at a photograph of a dog, it’s not clear what the right answer is,” said NVIDIA researcher Thomas Breuel. “It could be ‘dog’ or ‘animal’ or ‘short-haired corgi.’ For printed texts, it’s crystal clear — we know when it’s right and when it’s wrong.”

Getting on the Same Page

Breuel has been using deep learning to analyze historical texts since 2004 — leading the first research group to do so with LSTMs, a kind of recurrent neural network.

“That was really a breakthrough in terms of recognition rates and error rates,” said Breuel, who at the time was a professor at the University of Kaiserslautern in Germany.

As the birthplace of the Gutenberg printing press, Germany was a natural place to look at historical document data, Breuel says. The invention led to the spread of printing presses across Europe during the Renaissance.

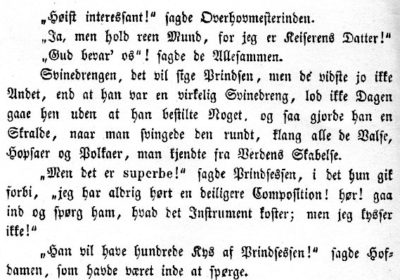

Many German printed texts from the 16th to early 20th centuries are written in an artistic script called Fraktur (depicted to the right). “It’s a script that people can’t read anymore,” Breuel said.

His team developed in 2007 an open-source system called OCRopus (“OCR” for optical character recognition) to digitally transcribe Fraktur texts.

Breuel’s latest iteration of the software, ocropus3, is available on GitHub. Its error rate is just 0.1 percent on Latin scripts. By using training data for other languages and scripts, researchers have used OCRopus on Latin, Greek and Sanskrit texts.

For another German researcher, Uwe Springmann, finding OCRopus transformed his digital humanities research. The character recognition rate for the 15th to 18th century Latin and German printings he was working with jumped from 85 percent to 98 percent.

“That’s not just incremental progress,” he said. “That was a huge step forward.”

Springmann and his frequent co-author Christian Reul now work with NVIDIA GPUs and an open-source deep learning OCR engine called Calamari, which combines LSTMs and convolutional neural networks.

Using GPUs led to a 10x speedup for training and inference, says Reul, acting director of the digitization unit at the University of Würzburg’s Center for Philology and Digitality.

The Printed Page

Years ago, historians digitized millions of pages as scanned images, “but transcribing everything was just impossible,” said Marcus Liwicki, machine learning professor at Sweden’s Luleå University of Technology.

With deep learning-powered OCR tools, a scholar interested in a specific political figure can now query machine-readable versions of historical texts and find every mention of that person.

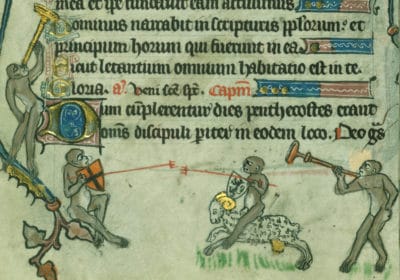

But many documents have more than printed words on them — like artwork, marginalia or watermarks. Liwicki is building deep learning tools to analyze these features of historical texts.

“The entire process of digital humanities research was transformed by GPUs,” he says.

His project, called HisDoc, uses neural networks to identify high-level features of a document like the time period of publication and font used, as well as analyzing individual pages to determine which areas contain text and which contain images.

Using a cluster of NVIDIA GPUs, Liwicki trained a neural network on a database of 80,000 watermarks. Scholars are interested in documents with matching watermarks because it hints that the texts were produced in the same region and time period.

Beyond Printed Documents

Historical records weren’t just created by printing presses — many writings of interest to scholars were created by hand. This makes them trickier to read with a machine because writers often used abbreviations, a person’s handwriting varies across a page, and handwritten words may not fall in perfect horizontal lines like a printed text.

Here, too, neural networks are being used as effective transcription tool.

Paolo Merialdo, Donatella Firmani and Elena Nieddu, researchers from Italy’s Roma Tre University, are using deep learning to transcribe 12th century papal correspondence from the Vatican Secret Archives, the largest historical archive in the world.

Using an NVIDIA Quadro GPU and convolutional neural networks, they developed a system that recognizes handwritten characters with 96 percent accuracy and determines the most likely transcription for each word based on a Latin language model.

Student researchers at Ukraine’s Igor Sikorsky Kyiv Polytechnic Institute are going a step further, developing a neural network to interpret medieval graffiti carved into the stone walls of Kiev’s St. Sophia Cathedral.

Scholars have been debating the interpretations of some of the inscriptions, said paper co-author Yuri Gordienko. Powered by NVIDIA GPUs, the team’s deep learning model achieved 99 percent accuracy in recognizing individual characters.

Document analysis is an active research field, with work presented at dedicated conferences, as well as major computer vision and machine learning gatherings like CVPR and NeurIPS.