AI is spreading from agriculture to X-rays thanks to its uncanny ability to quickly infer smart choices based on mounds of data.

As the datasets and the neural networks analyzing them grow, users are increasingly turning to NVIDIA GPUs to accelerate AI inference.

To see inference at work, just look under the hood of widely used products from companies that are household names.

GE Research, for example, deploys AI models accelerated with GPUs in aviation, healthcare, power and transportation industries. They automate the inspection of factories, enable smart trains, monitor power stations and interpret medical images.

GE runs these AI models in data center servers on NVIDIA DGX systems with V100 Tensor Core GPUs and in edge-computing networks with Jetson AGX Xavier modules. The hardware runs NVIDIA’s TensorRT inference engine and its CUDA/cuDNN acceleration libraries for deep learning as well as the NVIDIA JetPack tool kit for Jetson modules.

Video Apps, Contracts Embrace Inference

In the consumer market, two of the world’s most popular mobile video apps run AI inference on NVIDIA GPUs.

TikTok and its forerunner in China, Douyin, together hit 1 billion downloads globally in February 2019. The developer and host of the apps, ByteDance, uploads a staggering 50 million new videos a day for 400 million active daily users.

ByteDance runs TensorRT on thousands of NVIDIA T4 and P4 GPU servers so users can search and get recommendations about cool videos to watch. The company estimates it has saved millions of dollars using the NVIDIA products while slashing in half the latency of its online services.

In business, Deloitte uses AI inference in its dTrax software to help companies manage complex contracts. For instance, dTrax can find and update key passages in lengthy agreements when regulations change or when companies are planning a big acquisition.

Several companies around the world use dTrax today. The software — running on NVIDIA DGX-1 systems in data centers and AWS P3 instances in the cloud — won a smart-business award from the Financial Times in 2019.

Inference Runs 2-10x Faster on GPUs

Inference jobs on average-size models run twice as fast on GPUs than CPUs and on large models such as RoBERTa they run 10x faster, according to tests done by Square, a financial services company.

That’s why NVIDIA GPUs are key to its goal of spreading the use of its Square Assistant from a virtual scheduler to a conversational AI engine driving all the company’s products.

BMW Group just announced it’s developing five new types of robots using the NVIDIA Isaac robotics platform to enhance logistics in its car manufacturing plants. One of the new bots, powered by NVIDIA Jetson AGX Xavier, delivers up to 32 trillion operations per second of performance for computer vision tasks such as perception, pose estimation and path planning.

AI inference is happening inside cars, too. China’s Xpeng unveiled in late April its P7 all-electric sports sedan using the NVIDIA DRIVE AGX Xavier to help deliver level 3 automated driving features, using inference on data from a suite of sensors.

Medical experts from around the world gave dozens of talks at GTC 2020 about use of AI in radiology, genomics, microscopy and other healthcare fields. In one talk, Geraldine McGinty, chair of the American College of Radiology, called AI a “once-in-a-generation opportunity” to improve the quality of care while driving down costs.

Down on the farm, a growing crop of startups are using AI to increase efficiency. For example, Rabbit Tractors, an NVIDIA Inception program member, uses Jetson Nano modules on multifunction robots to infer from camera and lidar data their way along a row they need to seed, spray or harvest.

The list of companies with use cases for GPU-accelerated inference goes on. It includes fraud detection at American Express, industrial inspection for P&G and search engines for web giants.

Inference Gets Up to 7x Gains on A100

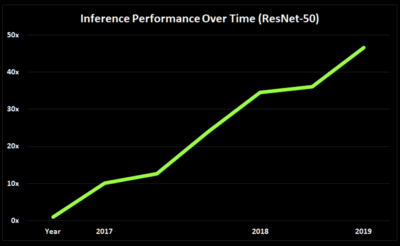

The potential for inference on GPUs is headed up and to the right.

The NVIDIA Ampere architecture gives inference up to a 7x speedup thanks to the multi-instance GPU feature. Support in the A100 GPUs for a new approach to sparsity in deep neural networks promises even further gains. It’s one of several new features of the architecture discussed in a technical overview of the A100 GPUs.

There are plenty of resources to discover where inference could go next and how to get started on the journey.

A webinar describes in more detail the potential for inference on the A100. Tutorials, more customer stories and a white paper on NVIDIA’s Triton Inference Server for deploying AI models at scale can all be found on a page dedicated to NVIDIA’s inference platform.

Users can find an inference runtime, optimization tools and code samples on the NVIDIA TensorRT page. Pre-trained models and containers that package up the code needed to get started are in the NGC software catalog.