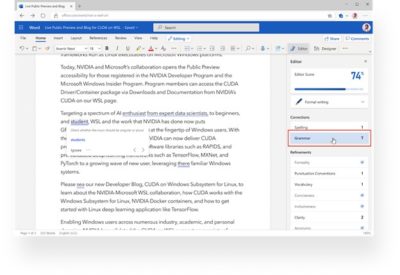

It’s been said that good writing comes from editing. Fortunately for discerning readers everywhere, Microsoft is putting an AI-powered grammar editor at the fingertips of millions of people.

Like any good editor, it’s quick and knowledgeable. That’s because Microsoft Editor’s grammar refinements in Microsoft Word for the web can now tap into NVIDIA Triton Inference Server, ONNX Runtime and Microsoft Azure Machine Learning, which is part of Azure AI, to deliver this smart experience.

Speaking at the digital GPU Technology Conference, NVIDIA CEO Jensen Huang announced the news during the keynote presentation on October 5.

Everyday AI in Office

Microsoft is on a mission to wow users of Office productivity apps with the magic of AI. New, time-saving experiences will include real-time grammar suggestions, question-answering within documents — think Bing search for documents beyond “exact match” — and predictive text to help complete sentences.

Such productivity-boosting experiences are only possible with deep learning and neural networks. For example, unlike services built on traditional rules-based logic, when it comes to correcting grammar, Editor in Word for the web is able to understand the context of a sentence and suggest the appropriate word choices.

And these deep learning models, which can involve hundreds of millions of parameters, must be scalable and provide real-time inference for an optimal user experience. Microsoft Editor’s AI model for grammar checking in Word on the web alone is expected to handle more than 500 billion queries a year.

Deployment at this scale could blow up deep learning budgets. Thankfully, NVIDIA Triton’s dynamic batching and concurrent model execution features, accessible through Azure Machine Learning, slashed the cost by about 70 percent and achieved a throughput of 450 queries per second on a single NVIDIA V100 Tensor Core GPU, with less than 200-millisecond response time. Azure Machine Learning provided the required scale and capabilities to manage the model lifecycle such as versioning and monitoring.

High Performance Inference with Triton on Azure Machine Learning

Machine learning models have expanded in size, and GPUs have become necessary during model training and deployment. For AI deployment in production, organizations are looking for scalable inference serving solutions, support for multiple framework backends, optimal GPU and CPU utilization and machine learning lifecycle management.

The NVIDIA Triton and ONNX Runtime stack in Azure Machine Learning deliver scalable high-performance inferencing. Azure Machine Learning customers can take advantage of Triton’s support for multiple frameworks, real time, batch and streaming inferencing, dynamic batching and concurrent execution.

Writing with AI in Word

Author and poet Robert Graves was quoted as saying, “There is no good writing, only good rewriting.” In other words, write, and then edit and improve.

Editor in Word for the web lets you do both simultaneously. And while Editor is the first feature in Word to gain the speed and breadth of advances enabled by Triton and ONNX Runtime, it is likely just the start of more to come.

It’s not too late to get access to hundreds of live and on-demand talks at GTC. Register now through Oct. 9 using promo code CMB4KN to get 20 percent off.