Reflections have never looked so good.

Artists are using NVIDIA RTX GPUs to take real-time graphics to the next level, creating visuals with rendered surfaces and light reflections to produce incredible photorealistic details.

The Marbles RTX technology demo, first previewed at GTC in March, ran on a single NVIDIA RTX 8000 GPU. It showcased how complex physics can be simulated in a real-time, ray-traced world.

During the GeForce RTX 30 Series launch event in September, NVIDIA CEO Jensen Huang unveiled a more challenging take on the NVIDIA Marbles RTX project: staging the scene to take place at night and illustrate the effect of hundreds of dynamic, animated lights.

Marbles at Night is a physics-based demo created with dynamic, ray-traced lights and over 100 million polygons. Built in NVIDIA Omniverse and running on a single GeForce RTX 3090 GPU, the final result showed hundreds of different light sources at night, with each marble reflecting lights differently and all happening in real time.

Beyond demonstrating the latest technologies for content creation, Marbles at Night showed how creative professionals can now seamlessly collaborate and design simulations with incredible lighting, accurate reflections and real-time ray tracing with path tracing.

Pushing the Limits of Creativity

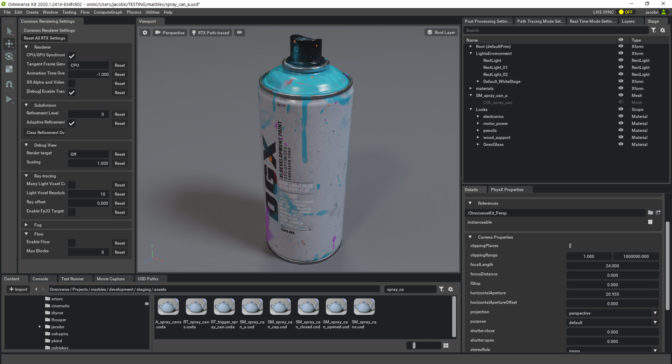

A team of artists from NVIDIA collaborated and built the project in NVIDIA Omniverse, the real-time graphics and simulation platform based on NVIDIA RTX GPUs and Pixar’s Universal Scene Description.

Working in Omniverse, the artists were able to upload, store and access all the assets in the cloud, allowing them to easily share files across teams. They could send a link, open the file and work on the assets at the same time.

Every single asset in Marbles at Night was hand-made, modeled and textured from scratch. Marbles RTX Creative Director Gavriil Klimov bought over 200 art supplies and took reference photos of each to capture realistic details, from paint splatter to wear and tear. Texturing — a process that allows artists to transfer details from one model to another — was done entirely in Substance Painter, with multiple variations for each asset.

In Omniverse, the artists manually crafted everything in the Marbles project using RTX Renderer and a variety of creative applications like 3ds Max, Maya, Cinema 4D, ZBrush and Blender. The simulation platform enabled the creative team to view all content at the highest possible quality in real time, resulting in shorter cycles and more iterations.

Nearly a dozen people were working on the project remotely from locations as far afield as California, New York, Australia and Russia. Although the team members were located around the world, Omniverse allowed them to work on scenes simultaneously thanks to Omniverse Nucleus. Running on premises or in the cloud, the module enabled the teams to collaborate in real time across vast distances.

The collaboration-based workflow, combined with the fact the project’s assets were stored in the cloud, made it easier for everyone to access the files and edit in real time.

The final technology demo completed in Omniverse resulted in over 500GB worth of texture data, over 100 unique objects, more than 5,000 meshes and about 100 million polygons.

The Research Behind the Project

NVIDIA Research recently released a paper on the reservoir-based spatiotemporal importance resampling (ReSTIR) technique, which details how to render dynamic direct lighting and shadows from millions of area lights in real time. Inspired by this technique, the NVIDIA rendering team, led by distinguished engineer Ignacio Llamas, implemented an algorithm that allowed Klimov and team to place as many lights as they wanted for the Marbles demo, without being constrained by lighting limits.

“Before, we were limited to using less than 10 lights. But today with Omniverse capabilities using RTX, we were able to place as many lights as we wanted,” said Klimov. “That’s the beauty of it — you can creatively decide what the limit is that works for you.”

Traditionally, artists and developers achieved complex lighting using baked solutions. NVIDIA Research, in collaboration with the Visual Computing Lab at Dartmouth College, produced the research paper that dives into how artists can enable direct lighting from millions of moving lights.

The approach requires no complex light structure, no baking and no global scene parameterization. All the lights can cast shadows, everything can move arbitrarily and new emitters can be added dynamically. This technique is implemented using DirectX Ray Tracing accelerated by NVIDIA RTX and NVIDIA RT Cores.

Get more insights into the NVIDIA Research that’s helping professionals simplify complex design workflows, and learn about the latest announcement of Omniverse, now in open beta.

Additional Resources: