NVIDIA’s latest academic collaborations in graphics research have produced a reinforcement learning model that smoothly simulates athletic moves, ultra-thin holographic glasses for virtual reality, and a real-time rendering technique for objects illuminated by hidden light sources.

These projects — and over a dozen more — will be on display at SIGGRAPH 2022, taking place Aug. 8-11 in Vancouver and online. NVIDIA researchers have 16 technical papers accepted at the conference, representing work with 14 universities including Dartmouth College, Stanford University, the Swiss Federal Institute of Technology Lausanne and Tel Aviv University.

The papers span the breadth of graphics research, with advancements in neural content creation tools, display and human perception, the mathematical foundations of computer graphics and neural rendering.

Neural Tool for Multi-Skilled Simulated Characters

When a reinforcement learning model is used to develop a physics-based animated character, the AI typically learns just one skill at a time: walking, running or perhaps cartwheeling. But researchers from UC Berkeley, the University of Toronto and NVIDIA have created a framework that enables AI to learn a whole repertoire of skills — demonstrated above with a warrior character who can wield a sword, use a shield and get back up after a fall.

Achieving these smooth, life-like motions for animated characters is usually tedious and labor intensive, with developers starting from scratch to train the AI for each new task. As outlined in this paper, the research team allowed the reinforcement learning AI to reuse previously learned skills to respond to new scenarios, improving efficiency and reducing the need for additional motion data.

Tools like this one can be used by creators in animation, robotics, gaming and therapeutics. At SIGGRAPH, NVIDIA researchers will also present papers about 3D neural tools for surface reconstruction from point clouds and interactive shape editing, plus 2D tools for AI to better understand gaps in vector sketches and improve the visual quality of time-lapse videos.

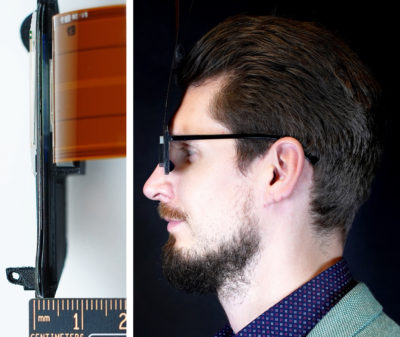

Bringing Virtual Reality to Lightweight Glasses

Most virtual reality users access 3D digital worlds by putting on bulky head-mounted displays, but researchers are working on lightweight alternatives that resemble standard eyeglasses.

A collaboration between NVIDIA and Stanford researchers has packed the technology needed for 3D holographic images into a wearable display just a couple millimeters thick. The 2.5-millimeter display is less than half the size of other thin VR displays, known as pancake lenses, which use a technique called folded optics that can only support 2D images.

A collaboration between NVIDIA and Stanford researchers has packed the technology needed for 3D holographic images into a wearable display just a couple millimeters thick. The 2.5-millimeter display is less than half the size of other thin VR displays, known as pancake lenses, which use a technique called folded optics that can only support 2D images.

The researchers accomplished this feat by approaching display quality and display size as a computational problem, and co-designing the optics with an AI-powered algorithm.

While prior VR displays require distance between a magnifying eyepiece and a display panel to create a hologram, this new design uses a spatial light modulator, a tool that can create holograms right in front of the user’s eyes, without needing this gap. Additional components — a pupil-replicating waveguide and geometric phase lens — further reduce the device’s bulkiness.

It’s one of two VR collaborations between Stanford and NVIDIA at the conference, with another paper proposing a new computer-generated holography framework that improves image quality while optimizing bandwidth usage. A third paper in this field of display and perception research, co-authored with New York University and Princeton University scientists, measures how rendering quality affects the speed at which users react to on-screen information.

Lightbulb Moment: New Levels of Real-Time Lighting Complexity

Accurately simulating the pathways of light in a scene in real time has always been considered the “holy grail” of graphics. Work detailed in a paper by the University of Utah’s School of Computing and NVIDIA is raising the bar, introducing a path resampling algorithm that enables real-time rendering of scenes with complex lighting, including hidden light sources.

Think of walking into a dim room, with a glass vase on a table illuminated indirectly by a street lamp located outside. The glossy surface creates a long light path, with rays bouncing many times between the light source and the viewer’s eye. Computing these light paths is usually too complex for real-time applications like games, so it’s mostly done for films or other offline rendering applications.

This paper highlights the use of statistical resampling techniques — where the algorithm reuses computations thousands of times while tracing these complex light paths — during rendering to approximate the light paths efficiently in real time. The researchers applied the algorithm to a classic challenging scene in computer graphics, pictured below: an indirectly lit set of teapots made of metal, ceramic and glass.

Related NVIDIA-authored papers at SIGGRAPH include a new sampling strategy for inverse volume rendering, a novel mathematical representation for 2D shape manipulation, software to create samplers with improved uniformity for rendering and other applications, and a way to turn biased rendering algorithms into more efficient unbiased ones.

Neural Rendering: NeRFs, GANs Power Synthetic Scenes

Neural rendering algorithms learn from real-world data to create synthetic images — and NVIDIA research projects are developing state-of-the-art tools to do so in 2D and 3D.

In 2D, the StyleGAN-NADA model, developed in collaboration with Tel Aviv University, generates images with specific styles based on a user’s text prompts, without requiring example images for reference. For instance, a user could generate vintage car images, turn their dog into a painting or transform houses to huts:

And in 3D, researchers at NVIDIA and the University of Toronto are developing tools that can support the creation of large-scale virtual worlds. Instant neural graphics primitives, the NVIDIA paper behind the popular Instant NeRF tool, will be presented at SIGGRAPH.

NeRFs, 3D scenes based on a collection of 2D images, are just one capability of the neural graphics primitives technique. It can be used to represent any complex spatial information, with applications including image compression, highly accurate representations of 3D shapes and ultra-high resolution images.

This work pairs with a University of Toronto collaboration that compresses 3D neural graphics primitives just as JPEG is used to compress 2D images. This can help users store and share 3D maps and entertainment experiences between small devices like phones and robots.

There are more than 300 NVIDIA researchers around the globe, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics. Learn more about NVIDIA Research.