If the King of Sweden wants help drafting his annual Christmas speech this year, he could ask the same AI model that’s available to his 10 million subjects.

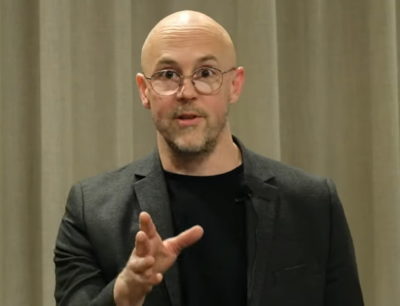

As a test, researchers prompted the model, called GPT-SW3, to draft one of the royal messages, and it did a pretty good job, according to Magnus Sahlgren, who heads research in natural language understanding at AI Sweden, a consortium kickstarting the country’s journey into the machine learning era.

“Later, our minister of digitalization visited us and asked the model to generate arguments for political positions and it came up with some really clever ones — and he intuitively understood how to prompt the model to generate good text,” Sahlgren said.

Early successes inspired work on an even larger and more powerful version of the language model they hope will serve any citizen, company or government agency in Scandinavia.

A Multilingual Model

The current version packs 3.6 billion parameters and is smart enough to do a few cool things in Swedish. Sahlgren’s team aims to train a state-of-the-art model with a whopping 175 billion parameters that can handle all sorts of language tasks in the Nordic languages of Swedish, Danish, Norwegian and, it hopes, Icelandic, too.

For example, a startup can use it to automatically generate product descriptions for an e-commerce website given only the products’ names. Government agencies can use it to quickly classify and route questions from citizens.

Companies can ask it to rapidly summarize reports so they can react fast. Hospitals can run distilled versions of the model privately on their own systems to improve patient care.

“It’s a foundational model we will provide as a service for whatever tasks people want to solve,” said Sahlgren, who’s been working at the intersection of language and machine learning since he earned his Ph.D. in computational linguistics in 2006.

Permission to Speak Freely

It’s a capability increasingly seen as a strategic asset, a keystone of digital sovereignty in a world that speaks thousands of languages across nearly 200 countries.

Most language services today focus on Chinese or English, the world’s two most-spoken tongues. They’re typically created in China or the U.S., and they aren’t free.

“It’s important for us to have models built in Sweden for Sweden,” Sahlgren said.

Small Team, Super System

“We’re a small country and a core team of about six people, yet we can build a state-of-the-art resource like this for people to use,” he added.

That’s because Sweden has a powerful engine in BerzeLiUs, a 300-petaflops AI supercomputer at Linköping University. It trained the initial GPT-SW3 model using just 16 of the 60 nodes in the NVIDIA DGX SuperPOD.

The next model may exercise all the system’s nodes. Such super-sized jobs require super software like the NVIDIA NeMo Megatron framework.

“It lets us scale our training up to the full supercomputer, and we’ve been lucky enough to have access to experts in the NeMo development team — without NVIDIA it would have been so much more complicated to come this far,” he said.

A Workflow for Any Language

NVIDIA’s engineers created a recipe based on NeMo and an emerging process called p-tuning that optimizes massive models fast, and it’s geared to work with any language.

In one early test, a model nearly doubled its accuracy after NVIDIA engineers applied the techniques.

What’s more, it requires one-tenth the data, slashing the need for tens of thousands of hand-labeled records. That opens the door for users to fine-tune a model with the relatively small, industry-specific datasets they have at hand.

“We hope to inspire a lot of entrepreneurship in industry, startups and the public using our technology to develop their own apps and services,” said Sahlgren.

Writing the Next Chapter

Meanwhile, NVIDIA’s developers are already working on ways to make the enabling software better.

One test shows great promise for training new capabilities using widely available English datasets into models designed for any language. In another effort, they’re using the p-tuning techniques in inference jobs so models can learn on the fly.

Zenodia Charpy, a senior solutions architect at NVIDIA based in Gothenburg, shares the enthusiasm of the AI Sweden team she supports. “We’ve only just begun trying new and better methods to tackle these large language challenges — there’s much more to come,” she said.

The GPT-SW3 model will be made available by the end of year via an early access program. To apply, contact francisca.hoyer@ai.se.