AI has transformed synthesized speech from the monotone of robocalls and decades-old GPS navigation systems to the polished tone of virtual assistants in smartphones and smart speakers.

But there’s still a gap between AI-synthesized speech and the human speech we hear in daily conversation and in the media. That’s because people speak with complex rhythm, intonation and timbre that’s challenging for AI to emulate.

The gap is closing fast: NVIDIA researchers are building models and tools for high-quality, controllable speech synthesis that capture the richness of human speech, without audio artifacts. Their latest projects are now on display in sessions at the Interspeech 2021 conference, which runs through Sept. 3.

These models can help voice automated customer service lines for banks and retailers, bring video-game or book characters to life, and provide real-time speech synthesis for digital avatars.

NVIDIA’s in-house creative team even uses the technology to produce expressive narration for a video series on the power of AI.

Expressive speech synthesis is just one element of NVIDIA Research’s work in conversational AI — a field that also encompasses natural language processing, automated speech recognition, keyword detection, audio enhancement and more.

Optimized to run efficiently on NVIDIA GPUs, some of this cutting-edge work has been made open source through the NVIDIA NeMo toolkit, available on our NGC hub of containers and other software.

Behind the Scenes of I AM AI

NVIDIA researchers and creative professionals don’t just talk the conversational AI talk. They walk the walk, putting groundbreaking speech synthesis models to work in our I AM AI video series, which features global AI innovators reshaping just about every industry imaginable.

But until recently, these videos were narrated by a human. Previous speech synthesis models offered limited control over a synthesized voice’s pacing and pitch, so attempts at AI narration didn’t evoke the emotional response in viewers that a talented human speaker could.

That changed over the past year when NVIDIA’s text-to-speech research team developed more powerful, controllable speech synthesis models like RAD-TTS, used in our winning demo at the SIGGRAPH Real-Time Live competition. By training the text-to-speech model with audio of an individual’s speech, RAD-TTS can convert any text prompt into the speaker’s voice.

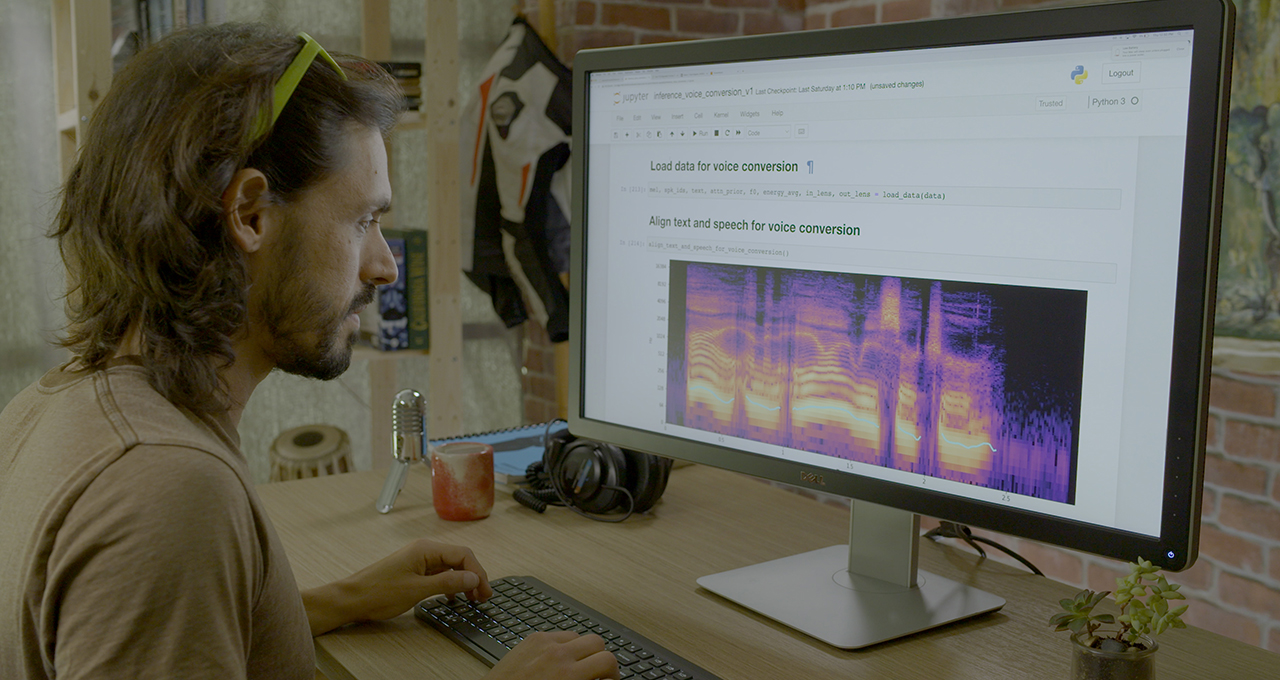

Another of its features is voice conversion, where one speaker’s words (or even singing) is delivered in another speaker’s voice. Inspired by the idea of the human voice as a musical instrument, the RAD-TTS interface gives users fine-grained, frame-level control over the synthesized voice’s pitch, duration and energy.

With this interface, our video producer could record himself reading the video script, and then use the AI model to convert his speech into the female narrator’s voice. Using this baseline narration, the producer could then direct the AI like a voice actor — tweaking the synthesized speech to emphasize specific words, and modifying the pacing of the narration to better express the video’s tone.

The AI model’s capabilities go beyond voiceover work: text-to-speech can be used in gaming, to aid individuals with vocal disabilities or to help users translate between languages in their own voice. It can even recreate the performances of iconic singers, matching not only the melody of a song, but also the emotional expression behind the vocals.

Giving Voice to AI Developers, Researchers

With NVIDIA NeMo — an open-source Python toolkit for GPU-accelerated conversational AI — researchers, developers and creators gain a head start in experimenting with, and fine-tuning, speech models for their own applications.

Easy-to-use APIs and models pretrained in NeMo help researchers develop and customize models for text-to-speech, natural language processing and real-time automated speech recognition. Several of the models are trained with tens of thousands of hours of audio data on NVIDIA DGX systems. Developers can fine tune any model for their use cases, speeding up training using mixed-precision computing on NVIDIA Tensor Core GPUs.

Through NGC, NVIDIA NeMo also offers models trained on Mozilla Common Voice, a dataset with nearly 14,000 hours of crowd-sourced speech data in 76 languages. Supported by NVIDIA, the project aims to democratize voice technology with the world’s largest open data voice dataset.

Voice Box: NVIDIA Researchers Unpack AI Speech

Interspeech brings together more than 1,000 researchers to showcase groundbreaking work in speech technology. At this week’s conference, NVIDIA Research is presenting conversational AI model architectures as well as fully formatted speech datasets for developers.

Catch the following sessions led by NVIDIA speakers:

- Scene-Agnostic Multi-Microphone Speech Dereverberation — Tues., Aug. 31

- SPGISpeech: 5,000 Hours of Transcribed Financial Audio for Fully Formatted End-to-End Speech Recognition — Weds., Sept. 1

- Hi-Fi Multi-Speaker English TTS Dataset — Weds., Sept 1

- TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Prediction — Thurs., Sept. 2

- Compressing 1D Time-Channel Separable Convolutions Using Sparse Random Ternary Matrices — Friday, Sept. 3

- NeMo Inverse Text Normalization: From Development to Production — Friday, Sept. 3

Find NVIDIA NeMo models in the NGC catalog, and tune into talks by NVIDIA researchers at Interspeech.