Whether you’re managing stadiums, cities or global corporations, edge AI is critical to improve operational efficiency.

That’s the message from people drawn from each of these fields who will share their experiences and aspirations in a panel at GTC.

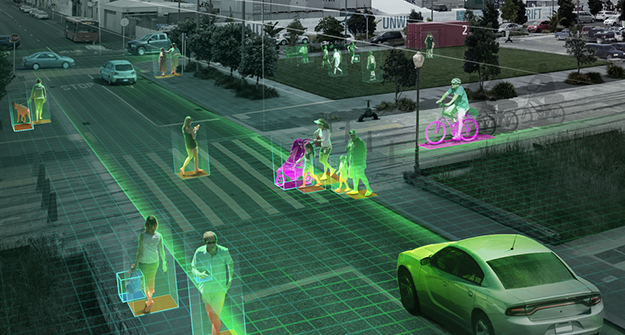

They’re using a type of AI called intelligent video analytics (IVA), powered by edge computing. IVA combines computer vision and deep learning to turn mountains of data into actionable insights.

Taking Edge AI on the Road

“Transportation is so ripe for this — the possibilities are endless,” said James Alberque, a tech manager for the City of Raleigh, North Carolina, and one of the panelists.

For example, Raleigh, like many cities, hires consultants to stand at a few intersections every so often and note the amount and direction of traffic. With IVA, it can gather traffic data on dozens of intersections 24/7.

AI models can use that data to improve everything from plans for new roads to timing for traffic lights during bad weather or a special event. Citizens could potentially get real-time feeds so they can plan trips, know when the next bus is due or find out if there’s a free bike or scooter at a nearby public corral.

Smarts Where You Need Them

Edge AI makes such insights possible because it collects and processes events where they happen. This approach also minimizes the cost and delay of sending data to the cloud.

Royal Dutch Shell has been applying edge AI to autonomously inspect integrity issues in Shell assets, detect and prevent safety incidents and check with robots the operational status of equipment.

“We start with a proof of concept to assess technical feasibility. Once it’s successful, we create a minimal viable product in operations, using historical and real-life data. We then hand it over to the IT department to scale it up,” said Xin Wang, a GTC panelist and machine vision manager.

Curating an AI Library

Today the company has a library of pre-trained models it can quickly optimize with transfer learning for new use cases as they emerge, said Siva Chamarti, a principal machine learning engineer who started Shell’s AI engineering team and is also on the GTC panel.

The NVIDIA DeepStream SDK helped simplify an early project in edge AI for Shell. The project used five AI models with data from 10 closed-circuit video cameras serving five use cases.

“Before DeepStream, it was difficult to share video from ten cameras with the models in an efficient way. We needed a lot of memory to copy and buffer it,” said Siva. “But with DeepStream, we could unpack the videos faster, giving each model the same frame references — making it easier to run our use cases on small edge devices.”

AI Assists on Game Day

Gillette Stadium, the home of the New England Patriots, plans to use edge AI to manage thousands of video streams for as many as 10 use cases.

For example, the system will send staff alerts when it detects security concerns, said panelist Michael Israel, chief information officer for the Kraft Group, a conglomerate that operates the 65,000-seat stadium.

The group also runs a recycling business where edge AI analyzes bundles of cardboard the size of small cars. Smart cameras identify seven different grades of cardboard so forklift operators can load the proper mix of bales to the conveyor.

“The more we follow these methods the better we perform as a manufacturer,” said Israel.

The wide variety of use cases for edge AI is one reason we created NVIDIA Metropolis. It’s an applications framework that acts as software scaffolding for IVA in industries including healthcare, retail, manufacturing and more.

Register for free to watch the panel (session A31422) during NVIDIA GTC, taking place online Nov. 8-11. You can also watch NVIDIA founder and CEO Jensen Huang’s GTC keynote address streaming on Nov. 9 and in replay.