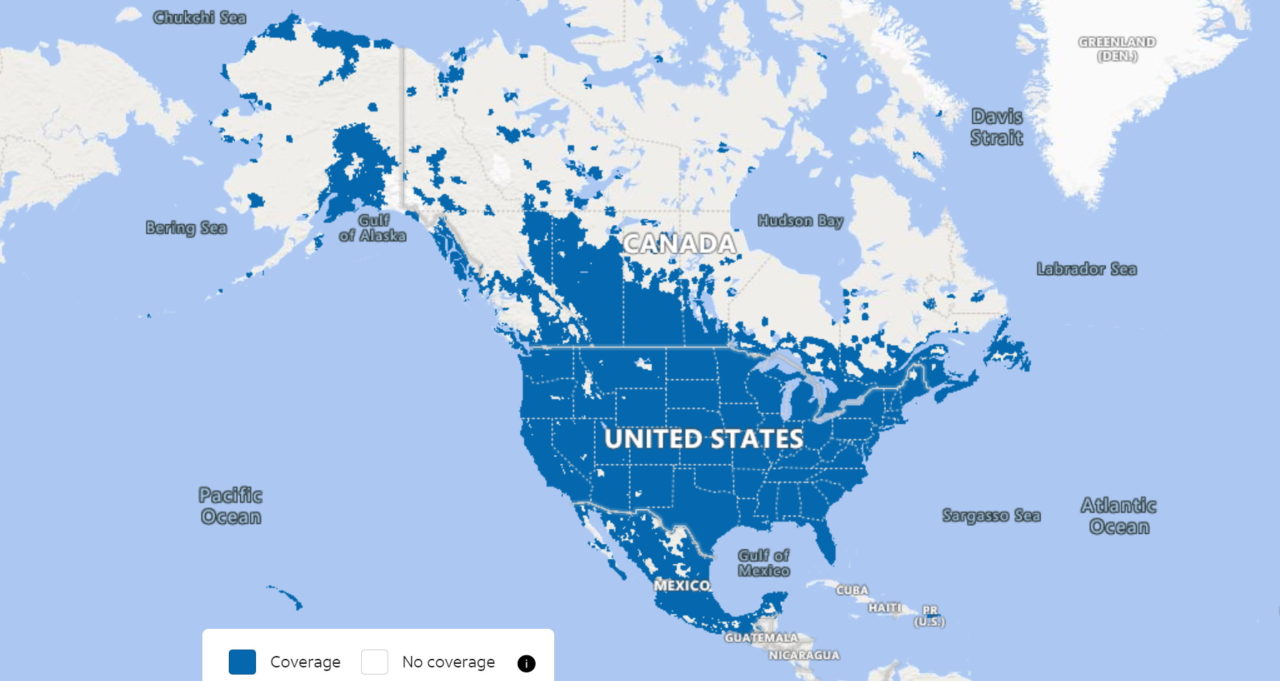

AT&T’s wireless network connects more than 100 million subscribers from the Aleutian Islands to the Florida Keys, spawning a big data sea.

Abhay Dabholkar runs a research group that acts like a lighthouse on the lookout for the best tools to navigate it.

“It’s fun, we get to play with new tools that can make a difference for AT&T’s day-to-day work, and when we give staff the latest and greatest tools it adds to their job satisfaction,” said Dabholkar, a distinguished AI architect who’s been with the company more than a decade.

Recently, the team tested on GPU-powered servers the NVIDIA RAPIDS Accelerator for Apache Spark, software that spreads work across nodes in a cluster.

It processed a month’s worth of mobile data — 2.8 trillion rows of information — in just five hours. That’s 3.3x faster at 60 percent lower cost than any prior test.

A Wow Moment

“It was a wow moment because on CPU clusters it takes more than 48 hours to process just seven days of data — in the past, we had the data but couldn’t use it because it took such a long time to process it,” he said.

Specifically, the test benchmarked what’s called ETL, the extract, transform and load process that cleans up data before it can be used to train the AI models that uncover fresh insights.

“Now we’re thinking GPUs can be used for ETL and all sorts of batch-processing workloads we do in Spark, so we’re exploring other RAPIDS libraries to extend work from feature engineering to ETL and machine learning,” he said.

Today, AT&T runs ETL on CPU servers, then moves data to GPU servers for training. Doing everything in one GPU pipeline can save time and cost, he added.

Pleasing Customers, Speeding Network Design

The savings could show up across a wide variety of use cases.

For example, users could find out more quickly where they get optimal connections, improving customer satisfaction and reducing churn. “We could decide parameters for our 5G towers and antennas more quickly, too,” he said.

Identifying what area in the AT&T fiber footprint to roll out a support truck can require time-consuming geospatial calculations, something RAPIDS and GPUs could accelerate, said Chris Vo, a senior member of the team who supervised the RAPIDS tests.

“We probably get 300-400 terabytes of fresh data a day, so this technology can have incredible impact — reports we generate over two or three weeks could be done in a few hours,” Dabholkar said.

Three Use Cases and Counting

The researchers are sharing their results with members of AT&T’s data platform team.

“We recommend that if a job is taking too long and you have a lot of data, turn on GPUs — with Spark, the same code that runs on CPUs runs on GPUs,” he said.

So far, separate teams have found their own gains across three different use cases; other teams have plans to run tests on their workloads, too.

Dabholkar is optimistic business units will take their test results to production systems.

“We are a telecom company with all sorts of datasets processing petabytes of data daily, and this can significantly improve our savings,” he said.

Other users including the U.S. Internal Revenue Service are on a similar journey. It’s a path many will take given Apache Spark is used by more than 13,000 companies including 80 percent of the Fortune 500.

Register free for GTC to hear AT&T’s Chris Vo talk about his work, learn more about data science at these sessions and hear NVIDIA CEO Jensen Huang’s keynote.