CUDA is a parallel computing platform and programming model created by NVIDIA. With more than 20 million downloads to date, CUDA helps developers speed up their applications by harnessing the power of GPU accelerators.

In addition to accelerating high performance computing (HPC) and research applications, CUDA has also been widely adopted across consumer and industrial ecosystems.

For example, pharmaceutical companies use CUDA to discover promising new treatments. Cars use CUDA to augment autonomous driving. Both brick-and-mortar and online stores use CUDA to analyze customer purchases and buyer data to make recommendations and place ads.

So, What Is CUDA?

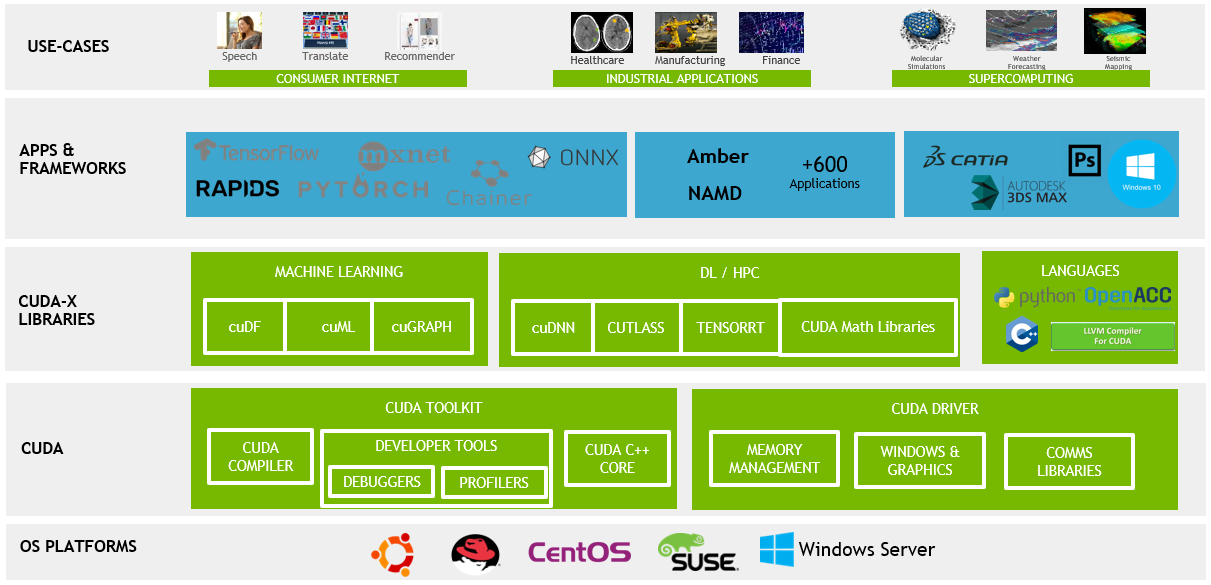

Some people confuse CUDA, launched in 2006, for a programming language — or maybe an API. With over 150 CUDA-based libraries, SDKs, and profiling and optimization tools, it represents far more than that.

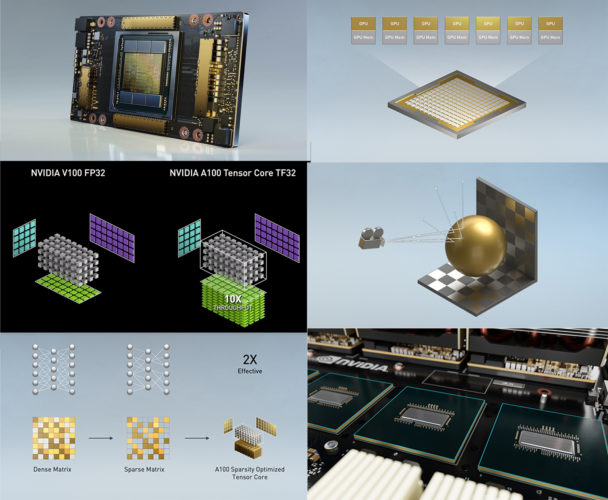

We’re constantly innovating. Thousands of GPU-accelerated applications are built on the NVIDIA CUDA parallel computing platform. The flexibility and programmability of CUDA have made it the platform of choice for researching and deploying new deep learning and parallel computing algorithms.

CUDA also makes it easy for developers to take advantage of all the latest GPU architecture innovations — as found in our most recent NVIDIA Ampere GPU architecture.

How Do You Use CUDA?

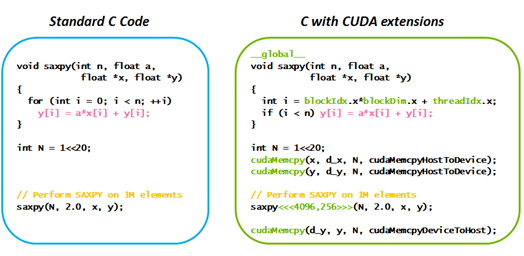

With CUDA, developers write programs using an ever-expanding list of supported languages that includes C, C++, Fortran, Python and MATLAB, and incorporate extensions to these languages in the form of a few basic keywords.

These keywords let the developer express massive amounts of parallelism and direct the compiler (or interpreter) to those portions of the application on GPU accelerators.

The simple example below shows how a standard C program can be accelerated using CUDA.

Getting Started with CUDA

Learning how to program using the CUDA parallel programming model is easy. There are videos and self-study exercises on the NVIDIA Developer website.

The CUDA Toolkit includes GPU-accelerated libraries, a compiler, development tools and the CUDA runtime. In addition to toolkits for C, C++ and Fortran, there are tons of libraries optimized for GPUs and other programming approaches such as the OpenACC directive-based compilers.

To boost performance across multiple application domains from AI to HPC, developers can harness NVIDIA CUDA-X — a collection of libraries, tools and technologies built on top of CUDA.

Check it out, and share how you’re using CUDA to advance your work.

Looking for a crash course in AI? Check out this overview of AI, deep learning and GPU-accelerated applications.