Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how NVIDIA DRIVE addresses them. Catch up on our earlier posts, here.

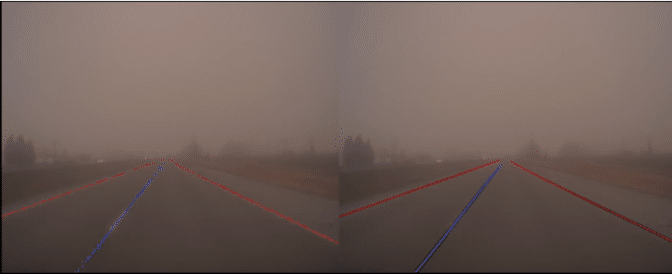

Lane markings are critical guides for autonomous vehicles, providing vital context for where they are and where they’re going. That’s why detecting them with pixel-level precision is fundamentally important for self-driving cars.

To begin with, AVs need long lane detection range — which means the AV system needs to perceive lanes at long distances from the ego car, or the vehicle in which the perception algorithms are operating. Detecting more lane line pixels near the horizon in the image adds tens of meters to lane-detection range in real life.

Moreover, a lane detection solution must be robust — during autonomous lane keeping, missed or flickering lane line detections can cause the vehicle to drift out of its lane. Pixel-level redundancy helps mitigate missed or flickering detections.

Deep neural network processing has emerged as an important AI-based technique for lane detection. Using this approach, humans label high-resolution camera images of lanes and lane edges. These images are used to train a convolutional DNN model to recognize lane lines in previously unseen data.

Preserving Precision in Convolutional DNNs

However, with convolutional DNNs, an inevitable loss of image resolution occurs at the DNN output. While input images may have high resolution, it’s largely lost as they are incrementally down-sampled as the convolutional DNN processes them.

As a result, individual pixels that precisely denoted lane lines and lane edges in the high-resolution input image become blurred at the DNN output. Critical spatial information for inferring the lane line/edge with high accuracy and precision becomes lost.

NVIDIA’s high-precision LaneNet solution encodes ground truth image data in a way that preserves high-resolution information during convolutional DNN processing. The encoding is designed to create enough redundancy for rich spatial information to not be lost during the downsampling process inherent to convolutional DNNs. The key benefits of high-precision LaneNet include increased lane detection range, increased lane edge precision/recall, and increased lane detection robustness.

The high-precision LaneNet approach also enables us to preserve rich information available in high-resolution images while leveraging lower-resolution image processing. This results in more efficient computation for in-car inference.

With such high-precision lane detection, autonomous vehicles can better locate themselves in space, as well as better perceive and plan a safe path forward.