The NVIDIA Deep Learning Institute is launching three new courses, which can be taken for the first time ever at the GPU Technology Conference next month.

The new instructor-led workshops cover fundamentals of deep learning, recommender systems and Transformer-based applications. Anyone connected online can join for a nominal fee, and participants will have access to a fully configured, GPU-accelerated server in the cloud.

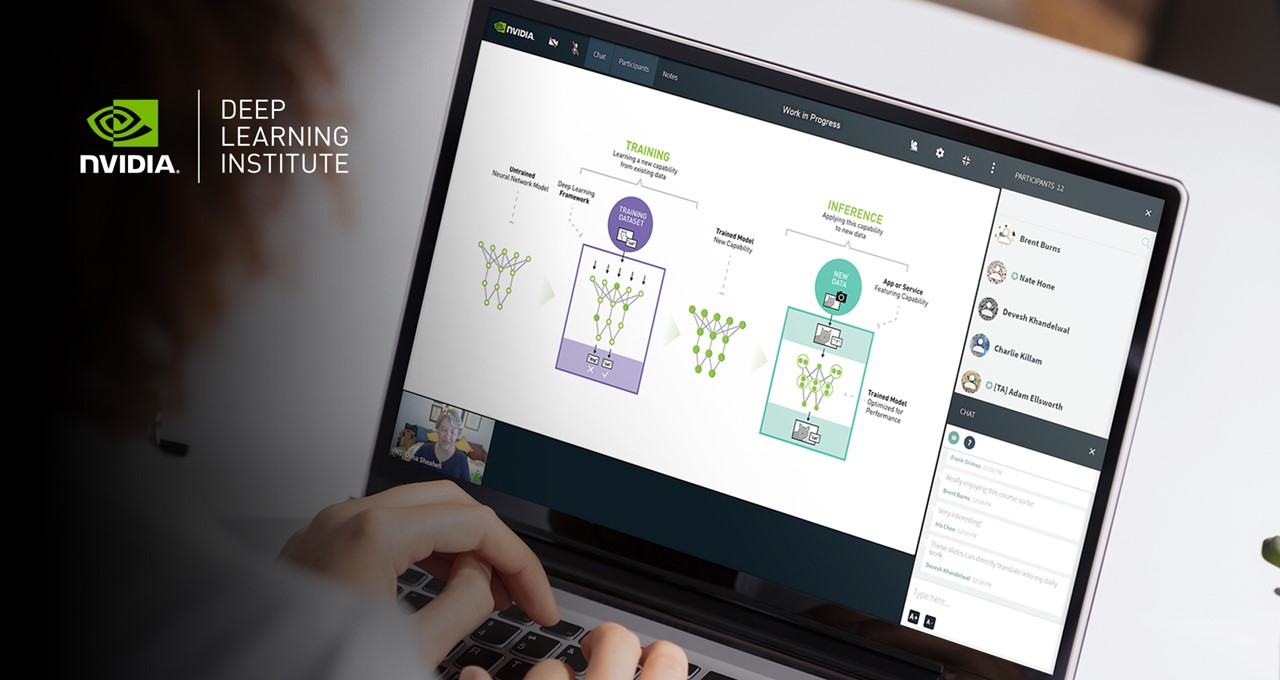

DLI instructor-led trainings consist of hands-on remote learning taught by NVIDIA-certified experts in virtual classrooms. Participants can interact with their instructors and peers in real time. They can whiteboard ideas, tackle interactive coding challenges and earn a DLI certificate of subject competency to support their professional growth.

DLI at GTC is offered globally, with several courses available in Korean, Japanese and Simplified Chinese for attendees in their respective time zones.

New DLI workshops launching at GTC include:

- Fundamentals of Deep Learning — Build the confidence to take on a deep learning project by learning how to train a model, work with common data types and model architectures, use transfer learning between models, and more.

- Building Intelligent Recommender Systems — Create different types of recommender systems: content-based, collaborative filtering, hybrid, and more. Learn how to use the open-source cuDF library, Apache Arrow, alternating least squares, CuPy and TensorFlow 2 to do so.

- Building Transformer-Based Natural Language Processing Applications — Learn about NLP topics like Word2Vec and recurrent neural network-based embeddings, as well as Transformer architecture features and how to improve them. Use pre-trained NLP models for text classification, named-entity recognition and question answering, and deploy refined models for live applications.

Other DLI offerings at GTC will include:

- Fundamentals of Accelerated Computing with CUDA Python — Dive into how to use Numba to compile NVIDIA CUDA kernels from NumPy universal functions, as well as create and launch custom CUDA kernels, while applying key GPU memory management techniques.

- Applications of AI for Predictive Maintenance — Leverage predictive maintenance and identify anomalies to manage failures and avoid costly unplanned downtimes, use time-series data to predict outcomes using machine learning classification models with XGBoost, and more.

- Fundamentals of Accelerated Data Science with RAPIDS — Learn how to use cuDF and Dask to ingest and manipulate large datasets directly on the GPU, applying GPU-accelerated machine learning algorithms including XGBoost, cuGRAPH and cuML to perform data analysis at massive scale.

- Fundamentals of Accelerated Computing with CUDA C/C++ — Find out how to accelerate CPU-only applications to run their latent parallelism on GPUs, using techniques like essential CUDA memory management to optimize accelerated applications.

- Fundamentals of Deep Learning for Multi-GPUs — Scale deep learning training to multiple GPUs, significantly shortening the time required to train lots of data and making solving complex problems with deep learning feasible.

- Applications of AI for Anomaly Detection — Discover how to implement multiple AI-based solutions to identify network intrusions, using accelerated XGBoost, deep learning-based autoencoders and generative adversarial networks.

With more than 2 million registered NVIDIA developers working on technological breakthroughs to solve the world’s toughest problems, the demand for deep learning expertise is greater than ever. The full DLI course catalog includes a variety of topics for anyone interested in learning more about AI, accelerated computing and data science.

Get a glimpse of the DLI experience:

Workshops have limited seating, with the early bird deadline on Sep 25. Register now.