Banks use AI to determine whether to extend credit, and how much, to customers. Radiology departments deploy AI to help distinguish between healthy tissue and tumors. And HR teams employ it to work out which of hundreds of resumes should be sent on to recruiters.

These are just a few examples of how AI is being adopted across industries. And with so much at stake, businesses and governments adopting AI and machine learning are increasingly being pressed to lift the veil on how their AI models make decisions.

Charles Elkan, a managing director at Goldman Sachs, offers a sharp analogy for much of the current state of AI, in which organizations debate its trustworthiness and how to overcome objections to AI systems:

We don’t understand exactly how a bomb-sniffing dog does its job, but we place a lot of trust in the decisions they make.

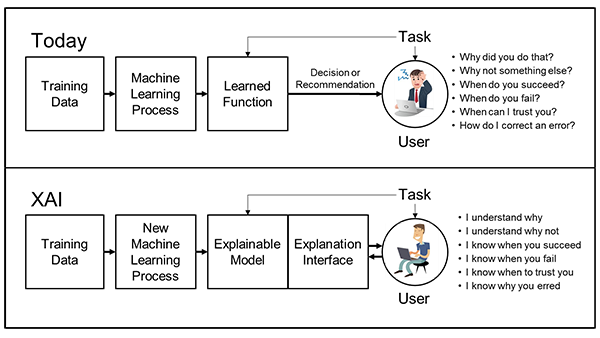

To reach a better understanding of how AI models come to their decisions, organizations are turning to explainable artificial intelligence (AI).

What Is Explainable AI?

Explainable AI, also abbreviated as XAI, is a set of tools and techniques used by organizations to help people better understand why a model makes certain decisions and how it works. XAI is:

- A set of best practices: It takes advantage of some of the best procedures and rules that data scientists have been using for years to help others understand how a model is trained. Knowing how, and on what data, a model was trained helps us understand when it does and doesn’t make sense to use that model. It also shines a light on what sources of bias the model might have been exposed to.

- A set of design principles: Researchers are increasingly focused on simplifying the building of AI systems to make them inherently easier to understand.

- A set of tools: As the systems get easier to understand, the training models can be further refined by incorporating those learnings into it — and by offering those learnings to others for incorporation into their models.

How Does Explainable AI Work?

While there’s still a great deal of debate over the standardization of XAI processes, a few key points resonate across industries implementing it:

- Who do we have to explain the model to?

- How accurate or precise an explanation do we need?

- Do we need to explain the overall model or a particular decision?

Data scientists are focusing on all these questions, but explainability boils down to: What are we trying to explain?

Explaining the pedigree of the model:

- How was the model trained?

- What data was used?

- How was the impact of any bias in the training data measured and mitigated?

These questions are the data science equivalent of explaining what school your surgeon went to — along with who their teachers were, what they studied and what grades they got. Getting this right is more about process and leaving a paper trail than it is about pure AI, but it’s critical to establishing trust in a model.

While explaining a model’s pedigree sounds fairly easy, it’s hard in practice, as many tools currently don’t support strong information-gathering. NVIDIA provides such information about its pretrained models. These are shared on the NGC catalog, a hub of GPU-optimized AI and high performance computing SDKs and models that quickly help businesses build their applications.

Explaining the overall model:

Sometimes called model interpretability, this is an active area of research. Most model explanations fall into one of two camps:

In a technique sometimes called “proxy modeling,” simpler, more easily comprehended models like decision trees can be used to approximately describe the more detailed AI model. These explanations give a “sense” of the model overall, but the tradeoff between approximation and simplicity of the proxy model is still more art than science.

Proxy modeling is always an approximation and, even if applied well, it can create opportunities for real-life decisions to be very different from what’s expected from the proxy models.

The second approach is “design for interpretability.” This limits the design and training options of the AI network in ways that attempt to assemble the overall network out of smaller parts that we force to have simpler behavior. This can lead to models that are still powerful, but with behavior that’s much easier to explain.

This isn’t as easy as it sounds, however, and it sacrifices some level of efficiency and accuracy by removing components and structures from the data scientist’s toolbox. This approach may also require significantly more computational power.

Why XAI Explains Individual Decisions Best

The best understood area of XAI is individual decision-making: why a person didn’t get approved for a loan, for instance.

Techniques with names like LIME and SHAP offer very literal mathematical answers to this question — and the results of that math can be presented to data scientists, managers, regulators and consumers. For some data — images, audio and text — similar results can be visualized through the use of “attention” in the models — forcing the model itself to show its work.

In the case of the Shapley values used in SHAP, there are some mathematical proofs of the underlying techniques that are particularly attractive based on game theory work done in the 1950s. There is active research in using these explanations of individual decisions to explain the model as a whole, mostly focusing on clustering and forcing various smoothness constraints on the underlying math.

The drawback to these techniques is that they’re somewhat computationally expensive. In addition, without significant effort during the training of the model, the results can be very sensitive to the input data values. Some also argue that because data scientists can only calculate approximate Shapley values, the attractive and provable features of these numbers are also only approximate — sharply reducing their value.

While healthy debate remains, it’s clear that by maintaining a proper model pedigree, adopting a model explainability method that provides clarity to senior leadership on the risks involved in the model, and monitoring actual outcomes with individual explanations, AI models can be built with clearly understood behaviors.

For a closer look at examples of XAI work, check out the talks presented by Wells Fargo and ScotiaBank at NVIDIA GTC21.

Learn more about data science on the NVIDIA Technical Blog.