Talk about a bright idea. A team of scientists has used GPU-accelerated deep learning to show how color can be brought to night-vision systems.

In a paper published this week in the journal PLOS One, a team of researchers at the University of California, Irvine led by Professor Pierre Baldi and Dr. Andrew Browne, describes how they reconstructed color images of photos of faces using an infrared camera.

The study is a step toward predicting and reconstructing what humans would see using cameras that collect light using imperceptible near-infrared illumination.

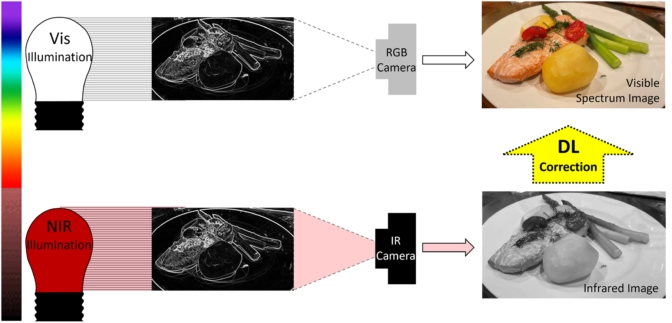

The study’s authors explain that humans see light in the so-called “visible spectrum,” or light with wavelengths of between 400 and 700 nanometers.

Typical night vision systems rely on cameras that collect infrared light outside this spectrum that we can’t see.

Information gathered by these cameras is then transposed to a display that shows a monochromatic representation of what the infrared camera detects, the researchers explain.

The team at UC Irvine developed an imaging algorithm that relies on deep learning to predict what humans would see using light captured by an infrared camera.

In other words, they’re able to digitally render a scene for humans using cameras operating in what, to humans, would be complete “darkness.”

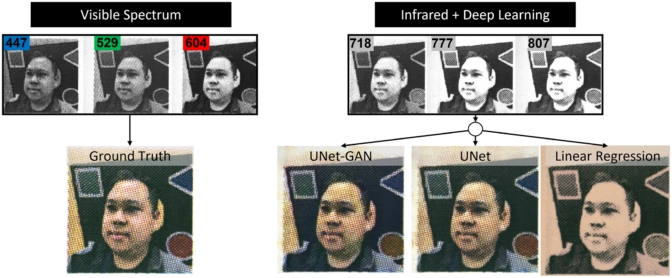

To do this, the researchers used a monochromatic camera sensitive to visible and near-infrared light to acquire an image dataset of printed images of faces.

These images were gathered under multispectral illumination spanning standard visible red, green, blue and infrared wavelengths.

The researchers then optimized a convolutional neural network with a U-Net-like architecture — a specialized convolutional neural network first developed for biomedical image segmentation at the Computer Science Department of the University of Freiburg — to predict visible spectrum images from near-infrared images.

The system was trained using NVIDIA GPUs and 140 images of human faces for training, 40 for validation and 20 for testing.

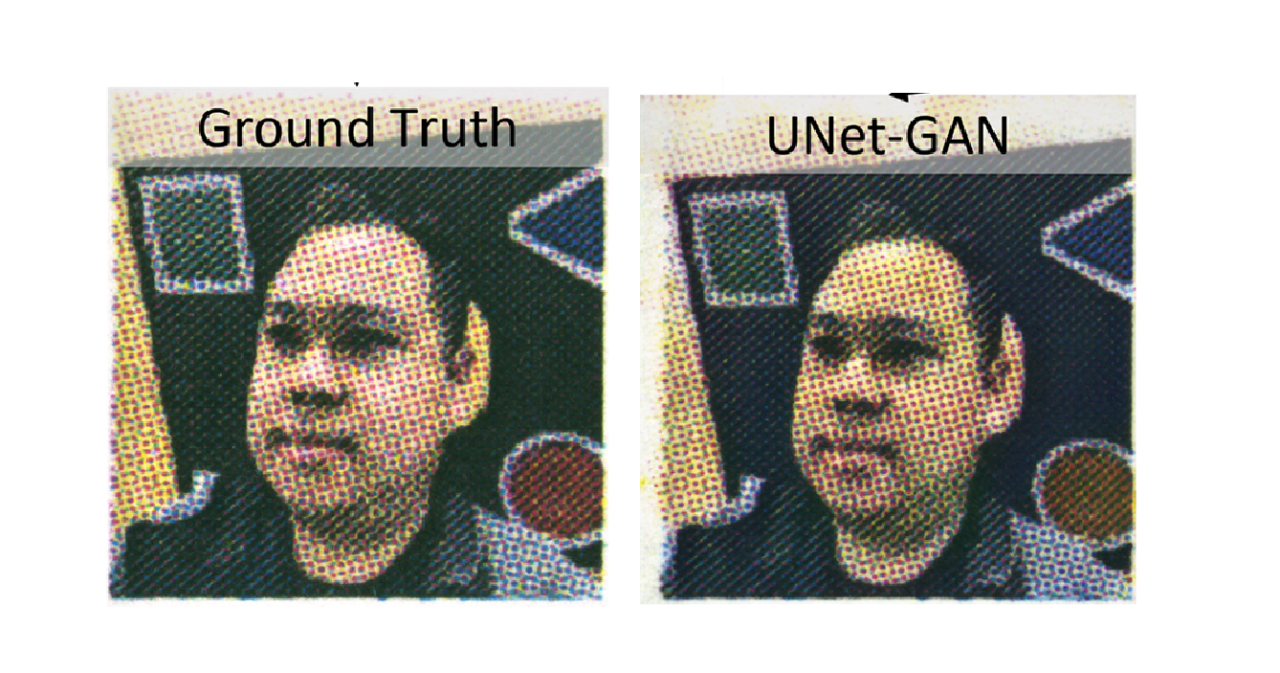

The result: the team successfully recreated color portraits of people taken by an infrared camera in darkened rooms. In other words, they created systems that could “see” color images in the dark.

To be sure, these systems aren’t yet ready for general purpose use. These systems would need to be trained to predict the color of different kinds of objects — such as flowers or faces.

Nevertheless, the study could one day lead to night vision systems able to see color, just as we do in daylight, or allow scientists to study biological samples sensitive to visible light.

Featured image source: Browne, et al.