Growing up in the Nile delta, Ahmed Elgammal dreamed of becoming an archaeologist or art historian. He never imagined he’d wind up in a concert hall in Bonn listening to the premier of Beethoven’s Tenth, a symphony he helped finish with AI.

“As a boy in Alexandria, I had a passion for art, but I was good at math. When it came time to pick a major in college, people said if I chose a career in art I’d have no money, so I went into computer science,” said Elgammal, now a professor at Rutgers and head of its Art and Artificial Intelligence Laboratory.

“I never gave up my passion for art,” he said, noting his two-year-old startup, Playform, has already helped 25,000 visual artists wade into deep learning.

Getting a Classical Call

About 15 years into his work in computer vision, Elgammal founded the Rutgers lab at the nexus of AI and art.

“I’ve had the privilege to work on some amazing research over the last 10 years doing what I really like — working with art historians, artists and musicians,” he said.

Hearing of Elgammal’s work, the director of a music technology institute in Salzburg asked him in early 2019 to help complete two movements of Beethoven’s Tenth, also known as his Unfinished Symphony, for a celebration of the composer’s 250th birthday.

AI Hears a Symphony

The challenge was enormous. The composer had only sketched out a few brief themes for the work before he died in 1827.

For more than two years, Elgammal worked with musicologists and composers, taking AI in new directions.

Ultimately, machine learning would add harmony to Beethoven’s themes, develop them as the composer would have, bridge one theme to the next, and then help orchestrate the work, assigning parts to different instruments.

“I hadn’t heard all this done before. Some AIs had helped create a few minutes of a string quartet but not a full symphony,” he said.

Language Models Help Beethoven Sing

Given the sequential nature of music, the team adapted transformer models used for natural-language processing.

Ultimately, they created four neural networks. Two models used in language translation proved best for adding harmony and orchestration, another transformer served to develop themes and a BERT model helped bridge between themes.

Since music can be represented as mathematical symbols, the lab’s servers, outfitted with a range of NVIDIA GPUs including a TITAN RTX, could easily handle the task.

“To watch AI learn from a genius like Beethoven was an amazing experience,” said Elgammal, whose training data included many hours of music from the German master.

Doubters and Devotees

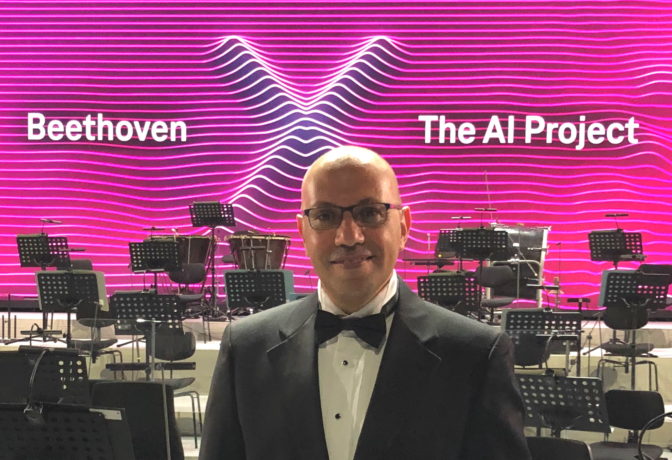

The work was released and premiered on Oct. 9 by the Bonn Beethoven Orchestra in the composer’s hometown. (You can hear a recording online.)

“After listening to this music for two years on my laptop, it was amazing to be in the event and hear it played live,” he said.

Responses spanned the spectrum. Skeptics disliked the mix of art and technology, others felt inspired.

“Some were very enthusiastic with tears in their eyes and goosebumps, and one friend listened to it the whole day, over and over,” he recalled.

Taking Music AI Mainstream

Whatever the response, it was a historic moment in what Elgammal calls computational creativity.

“We imagine in the future we could offer various tools. We worked in classical music, but this could also be relevant to today’s music,” he said.

Early in its work, the team rendered one of Beethoven’s themes in a pop style, just for fun.

Lending Artists a GAN

Much of Elgammal’s work these days is in visual arts. His startup Playform makes the generative adversarial networks (GANs) used in computer vision available as tools for a painter’s palette.

Playform uses data augmentation so artists can train their own AI models with as few as 30 images rather than the tens of thousands that GANs typically require. The service runs on NVIDIA GPUs in a public cloud service.

“Artists don’t want to use pretrained models, they want their art to be unique, so they won’t use someone else’s GAN model,” he said.

Plans for an Encore

Some artists use Playform to brainstorm, exploring new concepts that inspire what they paint by hand. Others use AI as an assistant, creating digital assets they incorporate in their work.

“We’re adding capabilities like synchronizing music and art for events and AI models trained to make music videos,” he said.

The next Beethoven might start a symphony with a digital flourish.