To remove complexities associated with standing up an NVIDIA GPU cluster, NVIDIA has announced a new reference architecture for cloud providers that want to offer generative AI services to their customers.

The NVIDIA Cloud Partner (NCP) reference architecture is a blueprint for building high-performance, scalable and secure data centers that can handle generative AI and large language models (LLMs).

The reference architecture enables NCPs within the NVIDIA Partner Network to reduce the time and cost of deploying AI solutions, while ensuring compatibility and interoperability among various hardware and software components.

The architecture will also help NCPs meet the growing demand for AI services from organizations — of all sizes and industries — that want to leverage the power of generative AI and LLMs without investing in their own infrastructure.

Generative AI and LLMs are transforming the way organizations solve complex problems and create new value. These technologies use deep neural networks to generate realistic and novel outputs, such as text, images, audio and video, based on a given input or context. Generative AI and LLMs can be used for a variety of applications, such as copilots, chatbots and other content creation.

However, generative AI and LLMs also pose significant challenges for NCPs, which need to provide the infrastructure and software to support these workloads. The technologies require massive amounts of computing power, storage and network bandwidth, as well as specialized hardware and software to optimize performance and efficiency.

For example, LLM training involves many GPU servers working together, communicating constantly among themselves and with storage systems. This translates to east-west and north-south traffic in data centers, which requires high-performance networks for fast and efficient communication.

Similarly, generative AI inference with larger models needs multiple GPUs to work together to process a single query.

Moreover, NCPs need to ensure that their infrastructure is secure, reliable and scalable, as they serve multiple customers with different needs and expectations. NCPs also need to comply with industry standards and best practices, as well as provide support and maintenance for their services.

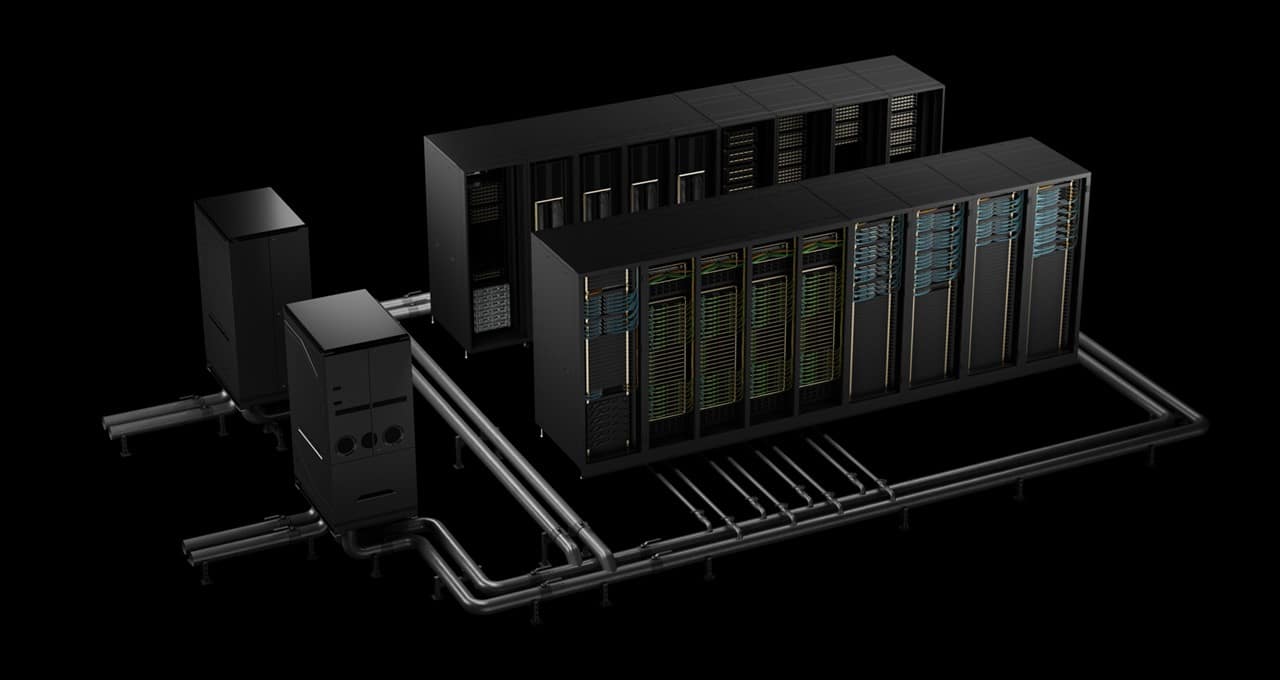

The NCP reference architecture addresses these challenges by providing a comprehensive, full-stack hardware and software solution for cloud providers to offer AI services and workflows for different use cases. Based on the years of experience NVIDIA has in designing and building large-scale deployments both internally and for customers, the reference architecture includes:

- GPU servers from NVIDIA and its manufacturing partners, featuring NVIDIA’s latest GPU architectures, such as Hopper and Blackwell, which deliver unparalleled compute power and performance for AI workloads.

- Storage offerings from certified partners, which provide high-performance storage optimized for AI and LLM workloads. The offerings also include those tested and validated for NVIDIA DGX SuperPOD and NVIDIA DGX Cloud. They are proven to be reliable, efficient and scalable.

- NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet networking, which provide a high-performance east-west network for fast and efficient communication between GPU servers.

- NVIDIA BlueField-3 DPUs, which deliver high-performance north-south network connectivity and enable data storage acceleration, elastic GPU computing and zero-trust security.

- In/out-of-band management solutions from NVIDIA and management partners, which provide tools and services for provisioning, monitoring and managing AI data center infrastructure.

- NVIDIA AI Enterprise software, including:

- Base Command Manager Essentials, which helps cloud providers provision and manage their servers.

- NeMo framework, which helps cloud providers train and fine-tune generative AI models.

- NVIDIA NIM, a set of easy-to-use microservices designed to accelerate deployment of generative AI across enterprises.

- Riva, for speech services.

- NVIDIA RAPIDS accelerator for managed Spark services.

The NCP reference architecture offers the following key benefits to partners:

- Build, Train and Go: NVIDIA infrastructure specialists highly experienced team use the architecture to physically install and provision the cluster for faster rollouts for cloud providers.

- Speed: By incorporating the expertise and best practices of NVIDIA and its partners, the architecture can help cloud providers accelerate the deployment of AI solutions and gain a competitive edge in the market.

- High Performance: The architecture is tuned and benchmarked with industry-standard benchmarks, ensuring optimal performance for AI workloads.

- Scalability: The architecture is designed for cloud-native environments, facilitating the development of scalable AI systems that offer flexibility and can seamlessly expand to meet increasing demand of end users.

- Interoperability: The architecture ensures compatibility among various components of the architecture from NVIDIA’s partners, making integration and communication between components seamless.

- Maintenance and Support: NCPs have access to NVIDIA subject-matter experts, who can help address any unexpected challenges that may arise during and after deployment.

The NCP reference architecture provides a proven blueprint for cloud providers to establish and manage high-performance scalable infrastructure for AI data centers. Both NCPs and customers benefit from the comprehensive guidelines this architecture offers.

Update: NVIDIA announced in October 2024 a new Reference Platform NCP designation for select partners who operate large clusters built in coordination with NVIDIA and that adhere to a tested and optimized reference architecture. Reference Platform NCPs may offer the NVIDIA AI Enterprise software platform and easy-to-use NVIDIA NIM microservices to help customers build and deploy AI applications.

See notice regarding software product information.