Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and which showcases new hardware, software, tools and accelerations for RTX PC users.

AI continues to raise the bar for PC gaming.

DLSS 3.5 with Ray Reconstruction creates higher quality ray-traced images for intensive ray-traced games and apps. This advanced AI-powered neural renderer is a groundbreaking feature that elevates ray-traced image quality for all GeForce RTX GPUs, outclassing traditional hand-tuned denoisers by using an AI network trained by an NVIDIA supercomputer. The result improves lighting effects like reflections, global illumination, and shadows to create a more immersive, realistic gaming experience.

A Ray of Light

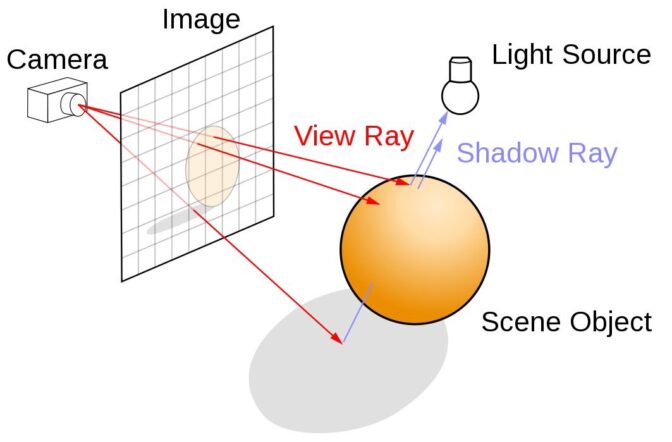

Ray tracing is a rendering technique that can realistically simulate the lighting of a scene and its objects by rendering physically accurate reflections, refractions, shadows and indirect lighting. Ray tracing generates computer graphics images by tracing the path of light from the view camera — which determines the view into the scene — through the 2D viewing plane, out into the 3D scene, and back to the light sources. For instance, if rays strike a mirror, reflections are generated.

It’s the digital equivalent to real-world objects illuminated by beams of light and the path of the light being followed from the eye of the viewer to the objects that light interacts with. That’s ray tracing.

Simulating light in this manner — shooting rays for every pixel on the screen — is computationally intensive, even for offline renderers that calculate scenes over the course of several minutes or hours. Instead, ray samples fire a handful of rays at various points across the scene for a representative sample of the scene’s lighting, reflectivity and shadowing.

However, there are limitations. The output is a noisy, speckled image with gaps, good enough to ascertain how the scene should look when ray traced. To fill in the missing pixels that weren’t ray traced, hand-tuned denoisers use two different methods, temporally accumulating pixels across multiple frames, and spatially interpolating them to blend neighboring pixels together. Through this process, the noisy raw output is converted into a ray-traced image.

This adds complexity and cost to the development process, and reduces the frame rate in highly ray-traced games where multiple denoisers operate simultaneously for different lighting effects.

DLSS 3.5 Ray Reconstruction introduces an NVIDIA supercomputer-trained, AI-powered neural network that generates higher-quality pixels in between the sampled rays. It recognizes different ray-traced effects to make smarter decisions about using temporal and spatial data, and retains high frequency information for superior-quality upscaling. And it recognizes lighting patterns from its training data, such as that of global illumination or ambient occlusion, and recreates it in-game.

Portal with RTX is a great example of Ray Reconstruction in action. With DLSS OFF, the denoiser struggles to reconstruct the dynamic shadowing alongside the moving fan.

With DLSS 3.5 and Ray Reconstruction enabled, the denoiser is trained on AI and recognizes certain patterns associated with shadows and keeps the image stable, accumulating accurate pixels while blending neighboring pixels to generate high-quality reflections.

Deep Learning, Deep Gaming

Ray Reconstruction is just one of the AI graphics breakthroughs that multiply performance in DLSS. Super Resolution, the cornerstone of DLSS, samples multiple lower resolution images and uses motion data and feedback from prior frames to reconstruct native-quality images. The result is high image quality without sacrificing game performance.

DLSS 3 introduced Frame Generation, which boosts performance by using AI to analyze data from surrounding frames to predict what the next generated frame should look like. These generated frames are then inserted in between rendered frames. Combining the DLSS-generated frames with DLSS Super Resolution enables DLSS 3 to reconstruct seven-eighths of the displayed pixels with AI, boosting frame rates by up to 4x compared to without DLSS.

Because DLSS Frame Generation is post-processed (applied after the main render) on the GPU, it can boost frame rates even when the game is bottlenecked by the CPU.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.