The works of Plato state that when humans have an experience, some level of change occurs in their… Read Article

The works of Plato state that when humans have an experience, some level of change occurs in their… Read Article

The Hao AI Lab research team at the University of California San Diego — at the forefront of pioneering AI model innovation — recently received an NVIDIA DGX B200 system… Read Article

For 25 years, the NVIDIA Graduate Fellowship Program has supported graduate students doing outstanding work relevant to NVIDIA technologies. Today, the program announced the latest awards of up to $60,000… Read Article

Researchers worldwide rely on open-source technologies as the foundation of their work. To equip the community with the latest advancements in digital and physical AI, NVIDIA is further expanding its… Read Article

Five finalists for the esteemed high-performance computing award have achieved breakthroughs in climate modeling, fluid simulation and more with the Alps, JUPITER and Perlmutter supercomputers — with two winners taking… Read Article

Tanya Berger-Wolf’s first computational biology project started as a bet with a colleague: that she could build an AI model capable of identifying individual zebras faster than a zoologist. She… Read Article

Where CPUs once ruled, power efficiency — and then AI — flipped the balance. Extreme co-design across GPUs, networking and software now drives the frontier of science…. Read Article

To power future technologies including liquid-cooled data centers, high-resolution digital displays and long-lasting batteries, scientists are searching for novel chemicals and materials optimized for factors like energy use, durability and… Read Article

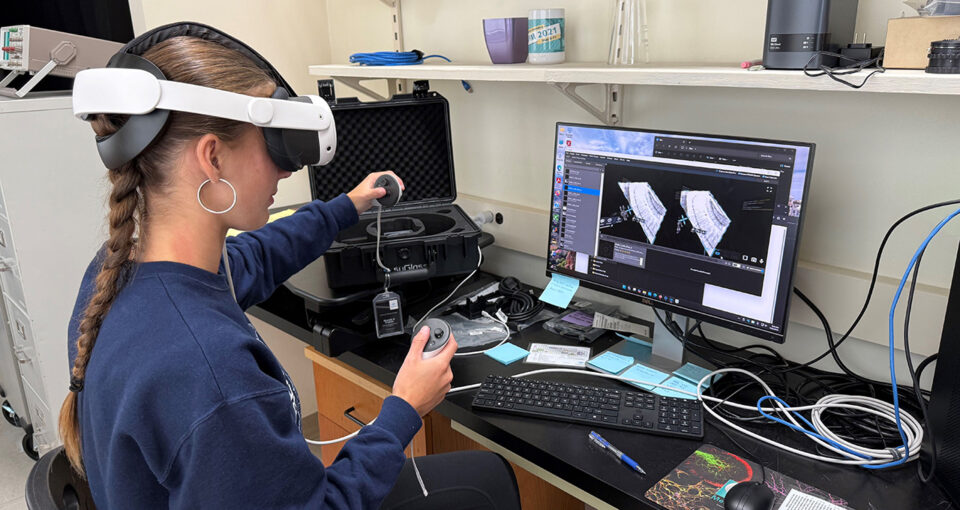

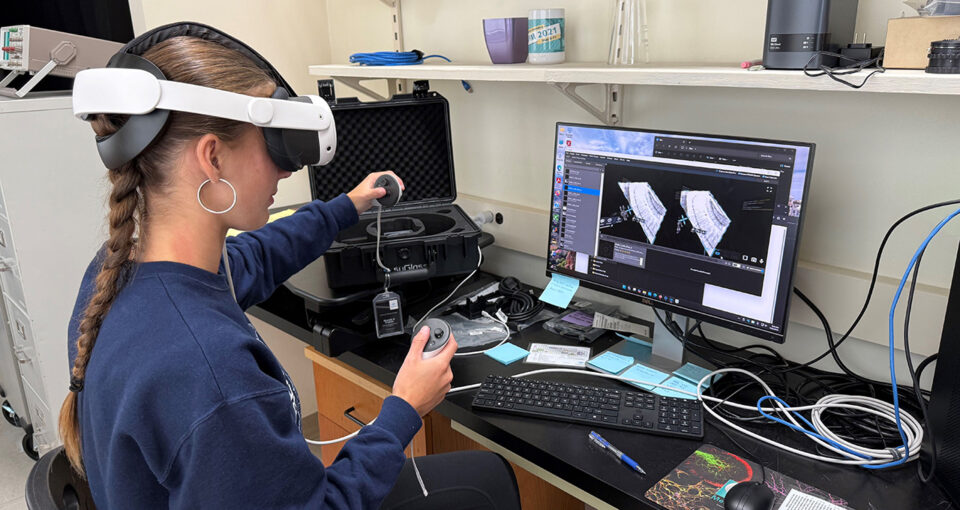

Coastal communities in the U.S. have a 26% chance of flooding within a 30-year period. This percentage is expected to increase due to climate-change-driven sea-level rise, making these areas even… Read Article