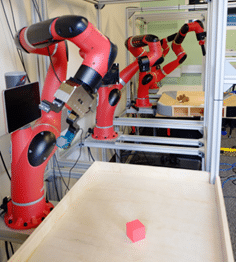

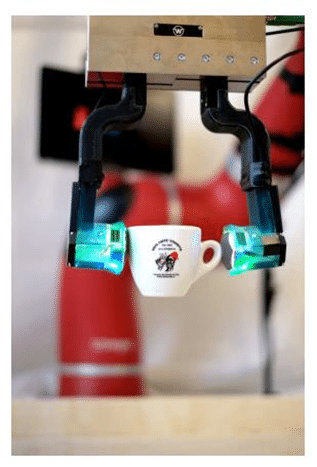

Imagine robots straining to grip door handles. Or lifting plastic bananas and dropping them into dog bowls. Or struggling to push Lego pieces around a metal bin.

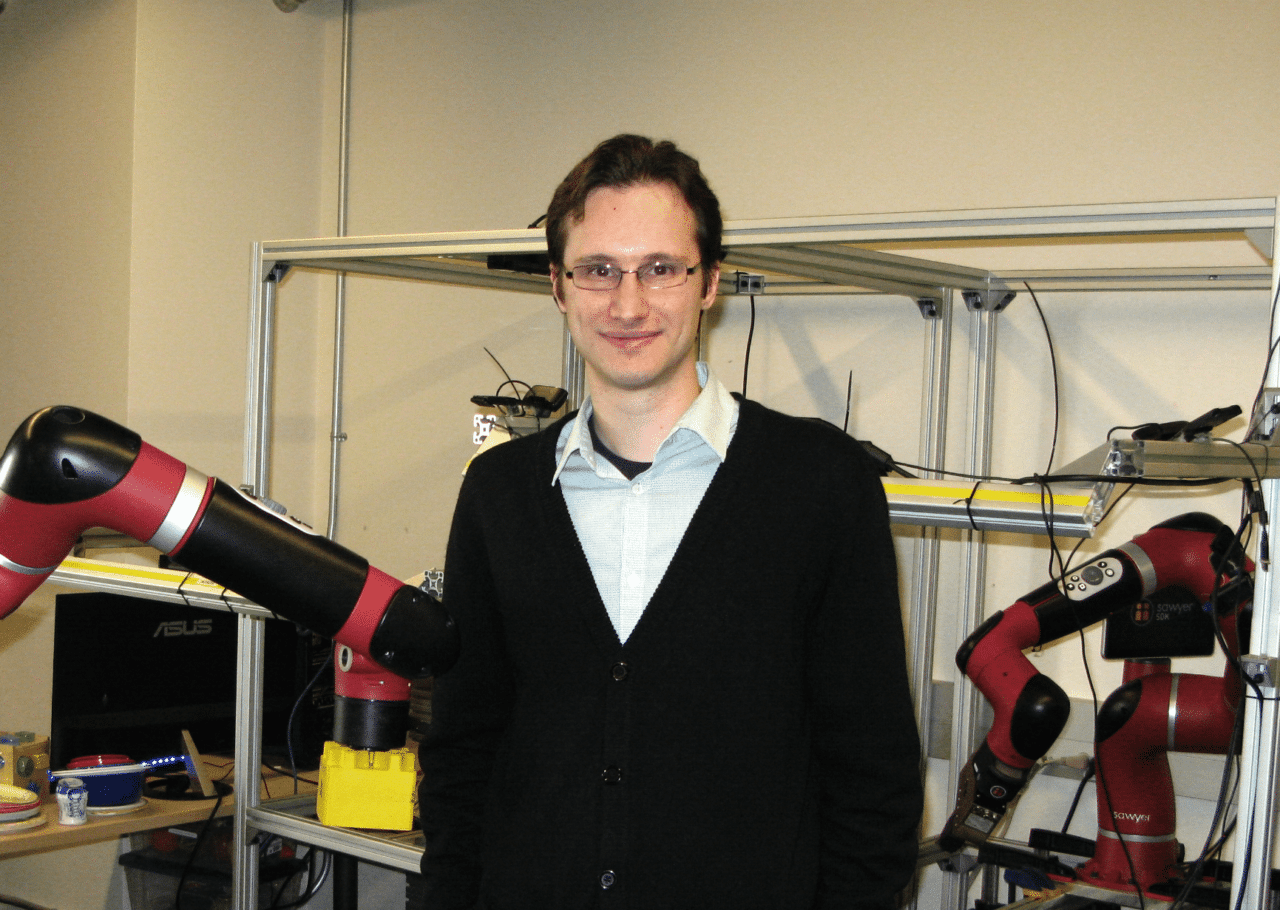

If you visited the laboratory of professor Sergey Levine at the University of California at Berkeley, you might see some puzzling scenes.

“We want to put our robots into an environment where they can explore, where they can essentially play,” says Levine, an assistant professor who runs the Robotic Artificial Intelligence and Learning Lab within the Artificial Intelligence Research Lab at Berkeley, which is a participant in our NVIDIA AI Labs initiative.

Why would robots be playing? Because a key to intelligence may be the way that creatures learn about their physical environment by poking at things, pushing things and observing what happens.

“The only proof of the existence of intelligence is in humans, and humans exist in a physical world, they’re embodied,” explains Levine. “In fact, all intelligent creatures we know are embodied. Perhaps they don’t have to be, but we don’t know that.”

Hence, “I see the robotics as actually a lens on artificial intelligence” more broadly, he says.

From the Bottom Up

One of the biggest lessons of robotics over many years, says Levine, is that it confirms “Moravec’s Paradox.”

Hans Moravec, a Carnegie Mellon University professor of robotics, wrote about a dichotomy in AI in his 1988 book “Mind Children: The Future of Robot and Human Intelligence.”

Machines can be taught to do well “things humans find hard,” such as mastering the game of chess. But machines do poorly at “what is easy for us,” such as basic motor skills.

“If you want a machine to play chess, it is actually comparatively quite easy,” observes Levine. “If you want a machine to pick up the chess pieces, that is incredibly difficult.”

Moravec viewed that dichotomy as a “giant clue” to the problem of constructing machines that think. He argued for building up intelligence by following the path of Darwinian evolution. That is, the gradual development, from the bottom up, of basic sensorimotor systems and then, much later, higher reasoning.

No Cats

There’s a parallel to deep learning, such as the breakthroughs in image recognition with convolutional neural networks (CNNs). Neural networks that automatically learn the most basic features in data —“edge detectors” and “corner detectors,” say — can then assemble hierarchies of representation.

“What we saw was that a method that can figure out those low-level features can then also figure out the higher-level features,” says Levine.

Unlike pictures of cats on the internet, however, there isn’t a ready supply of data for robots to learn from. Hence his lab’s focus on having machines explore the environment “for weeks, autonomously pushing things around, manipulating objects and then learning something about their world.”

Levine uses a variety of machine learning techniques to train robots, including CNNs but also, especially, reinforcement learning, where a route to a destination is planned by inferring from a current state to a goal state. The policy is then used by the robot at test time to carry out new instances of those tasks.

During the training phase, the play with objects is “unsupervised.” There is no hand-engineering by humans of the precise movements the robot should make to carry out a task. Nor is the goal even specified.

The neural network figures out what goal it should accomplish, and then figures out what policies, including angles of movement of its appendages, can lead to that goal.

‘Learning to Learn’

Training makes use of clusters of NVIDIA GPUs in an offsite facility. During test time, a single GPU is attached to each robot, which is used to run the policies that have been learned. In some more ambitious tests, such as learning a new policy from watching a video demonstration by a human, a more powerful NVIDIA DGX-1 is attached to each machine.

Says Levine, GPU compute power has brought two benefits to AI. By speeding up training, it “allows us to do science faster.” Second, during inference, the power of GPUs allows for real-time response, something that is “a really big deal for robots.”

“When the robot is actually in the physical world, if it’s doing something dynamic, such as flying at a closed door,” as in the case of a drone, “it needs to figure out the door’s closed before it hits it.”

The work of Levine and his staff with reinforcement learning has evolved to greater levels of sophistication. It’s one thing to teach a robot to perform a task at test time similar to what it learned in training. More ambitious is for the robot to learn new policies for problem solving at test time on tasks that are novel. The machine is “learning to learn,” says Levine.

The latter, called meta-learning, is an increasing focus of his lab. In a recent paper, “One-shot Hierarchical Imitation Learning of Compound Visuomotor Tasks,” a robot first watches a human demonstrate a simple, “primitive” task, such as dropping an object into a bowl. It develops a policy to imitate that action.

At test time, the robot is shown a “compound” task, such as dropping the object in the bowl and then moving the bowl along the table. The robot uses its prior experience with the simple tasks to compose a “sequence” of policies by which to perform actions in succession.

Levine’s robots were able to imitate the human’s demonstration of the compound task after seeing it demonstrated just one time, what’s known as “single-shot” learning.

What will humans learn about intelligence from all this? Some of what robots arrive at may be a rather alien form of intelligence. Robots developed to, say, work in a power plant, may build “some kind of internal representation of the world through learning that is kind of unique, that they are setting for the job they’ve been tasked with doing,” says Levine.

“It might be very different from our [representations],” he says, “and very different from how we might design them if we were to do it manually.”

Bot So Fast

Levine is very mindful of skeptics of AI such as NYU professor Gary Marcus, with whom he agrees that deep learning today doesn’t lead to higher reasoning.

“People transfer past knowledge from past experience, and most of what we call AI systems that are deployed today don’t do that, and they should.”

Development of higher reasoning may be a process over the lifetime of a robot, not a single neural network.

“I think it would be fantastic if, in the future, robots have a kind of a childhood, the same way that we do,” he says, one in which they make progress through various developmental stages.

“Except that with robots, the nice thing is that you can copy and paste their brain,” perhaps speeding that development.

In an eventual adulthood, muses Levine, robots’ mental development would continue.

“If you have a robot that has to perform some kind of task, maybe it has to do construction, for example, in its off-time, the robot doesn’t just sit there in the closet collecting dust, it actually practices things the same way a person would.”

Coming to a Real World Near You

There’s a tremendous amount of systems engineering that has to be coupled to deep learning to make robots viable. But Levine is confident that “over the span of the next five years or so, we’ll see that these things will actually make their way into the real world.”

“It may start with industrial robots, things like robots in warehouses, grocery stores and so on, but I think we’ll see more and more robots in our daily lives.”