A variety of open compute, networking, storage, automated provisioning, energy efficiency and security solutions will take center stage at the Open Compute Project (OCP) Global Summit, taking place Oct. 15-17 in San Jose, Calif.

Attendees, speakers and sponsors will present and discuss the impact of these technologies when run at cloud scale, with a special focus on AI workloads, liquid cooling, enhanced networking, accelerator management, memory fabrics and sustainability.

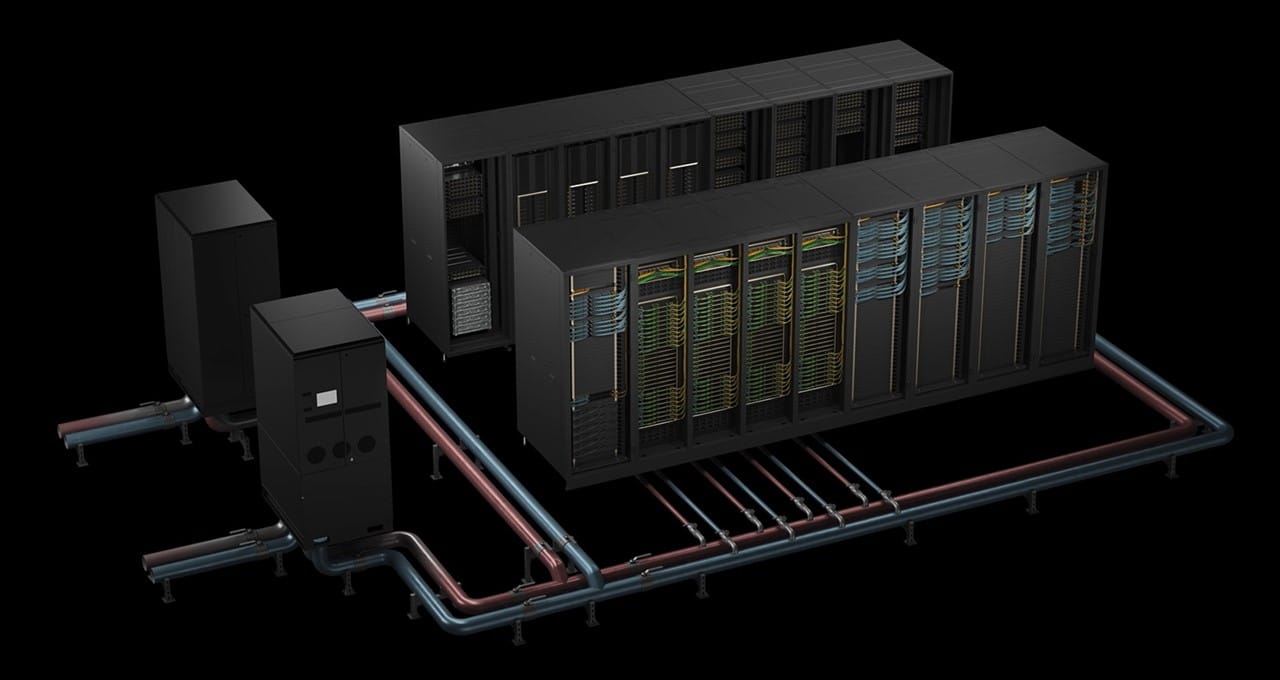

NVIDIA has long contributed to OCP designs and data center innovations. At the show, the company will showcase a wide range of OCP-related solutions, including AI factories, scalable GPU compute clusters, Arm-based CPUs and DPUs, storage and networking.

NVIDIA partners and customers will display and demo the latest NVIDIA accelerated computing platforms, including Blackwell AI servers, AI compute racks, liquid-cooled hardware, DPUs and SuperNICs, network switches and network operating systems.

Additionally showcased will be a new NVIDIA Grace CPU C1 configuration — a single-socket Grace CPU Arm-based server that is ideal for hyperscale cloud, high-performance edge, telco and more — as well as NVIDIA GH200 Grace Hopper and Grace Blackwell Superchips.

Partners showcasing NVIDIA technology and products at the OCP Global Summit include Aivres, Arm, ASRock Rack, ASUS, Dell Technologies, GIGABYTE, Hewlett Packard Enterprise, Hyve Solutions, Ingrasys, Inventec, Lenovo, Mitac, MSI, Pegatron, QCT, Supermicro, Wistron and Wiwynn.

OCP Summit Keynotes and Sessions

Ian Buck, vice president of hyperscale and HPC at NVIDIA, will deliver a keynote on Tuesday, Oct. 15, at 9:24 a.m. PT. He’ll address the challenges of designing data centers that can meet future AI workload demands and how NVIDIA is collaborating with the OCP community to overcome them.

Attendees can learn more about NVIDIA’s cloud-scale offerings in more than 20 OCP Global Summit and OCP Future Technologies Symposium sessions, including:

- Addressing the future thermal challenges with AI, an executive session with panelists from Lenovo, Meta, NVIDIA and Vertiv (Tuesday, Oct. 15, at 2:30 p.m. PT)

- The Insatiable Demand AI Places on the Rack and Datacenter, by Google and NVIDIA (Wednesday, Oct. 16, at 8:05 a.m. PT)

- Standardizing Hyperscale Requirements for Accelerators, by AMD, Google, Meta, Microsoft and NVIDIA (Wednesday, Oct. 16, at 9 a.m. PT)

- AI/HPC Future Fabrics Panel (Future Technologies Symposium), by Baya Systems, Lawrence Berkeley National Labs, Lipac Inc., NIST, Marvell and NVIDIA (Wednesday, Oct. 16, at 9:40 a.m. PT)

- Fabric Resiliency at Scale, by NVIDIA (Wednesday, Oct. 16, at 10:25 a.m. PT)

- High-Performance Data Center Storage Using DPUs, by Supermicro and NVIDIA (Wednesday, Oct. 16, at 1:10 p.m. PT)

- CTAM — Compliance Tool for Accelerator Manageability, by Meta, Microsoft and NVIDIA (Wednesday, Oct. 16, at 4:05 p.m. PT)

- Protecting AI Workloads From Noisy Neighbors in Cloud Networks With NVIDIA Spectrum-X, by NVIDIA (Wednesday, Oct. 16, at 4:10 p.m. PT)

- OCP Cooling Environments and ASHRAE TC9.9 Roadmap for the Future Collaboration, by AEI, BP, Meta and NVIDIA (Thursday, Oct. 17, at 9:45 a.m. PT)

- NVIDIA MGX: Faster Time to Market for the Accelerated Data Center, by NVIDIA (Thursday, Oct. 17, at 10:50 a.m. PT)

- Study of Fluid Velocity Limits in Cold Plate Liquid-Cooling Loops, by 1547 Datacenter and NVIDIA (Thursday, Oct. 17, at 3:20 p.m. PT)

- Enabling Chiplet Interoperability and Breakthroughs in AI, by Arm and NVIDIA (Thursday, Oct. 17, at 3:25 p.m. PT)

OCP started with one type of efficient server for Facebook (now Meta) and has grown to encompass accelerators, multiple server types, storage, networking — including adapters, switches and network operating systems — and a host of related data center technologies, including AI.

See what NVIDIA and its partners are contributing to the Open Compute Project by visiting the NVIDIA OCP Global Summit page.