Researchers at the University of Minnesota Supercomputing Institute have found a way to keep the lid on the legions of small but significant software elements that high-performance computing and AI spawn by using containers.

MSI is a hub for HPC research in academic institutions across the state, accelerating with NVIDIA GPUs more than 400 applications that range from understanding the genomics of cancer to the impacts of climate change. Empowering thousands of users statewide across these diverse applications is no simple task.

Each application has its own complex set of ingredients. The hardware configuration, compiler and libraries one application requires may clash with what another needs.

System administrators can get overwhelmed by the need to upgrade, install and monitor each app. The experience can leave both admins and users drained in a hunt for the latest and greatest code.

To avoid these pitfalls and empower their users, MSI adopted containers that essentially bundle apps with the libraries, runtime engines and other software elements they require.

Containers speed up application deployment

With containers, MSI’s users can deploy their apps in a few minutes, without help from administrators.

“Containers are a tool that can increase portability and reproducibility of key elements of research workflows,” said Benjamin Lynch, associate director of Research Computing at MSI. “They play an important role in the rapidly changing software ecosystems like we see in AI/ML on NVIDIA GPUs.”

Because a container provides everything needed to run an app, a researcher who wants to test an application built in Ubuntu doesn’t have to worry about incompatibility when running on MSI’s CentOS cluster.

“Containers are a critical tool for encapsulating complex agro-environmental models into reproducible and easily parallelized workflows that other researchers can replicate,” said Bryan Runck, a geo-computing scientist at the University of Minnesota.

NGC: Hub for GPU-Optimized HPC & AI Software

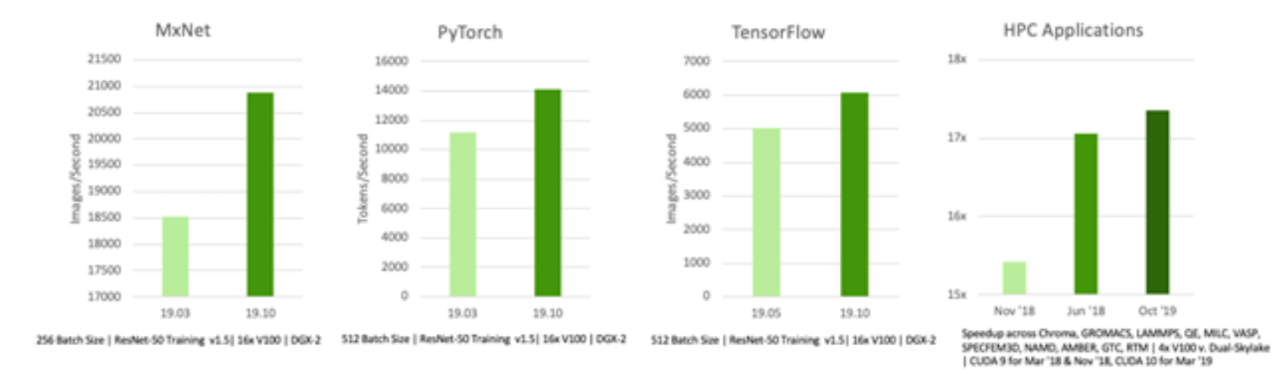

MSI’s researchers chose NVIDIA’s NGC registry as their source for GPU-optimized HPC and AI containers. The NGC catalog sports containers that span everything from deep learning to visualizations, all tested and tuned to deliver maximum performance.

These containers are tested for optimal performance. They’re also tested for compatibility across multiple architectures such as x86 and ARM, so system administrators can easily support diverse users.

When it comes to AI, NGC packs a large collection of pre-trained models and developer kits. Researchers can apply transfer learning to these models to create their own custom versions, reducing development time.

“Being able to run containerized applications on HPC platforms is an easy way to get started, and GPUs have reduced the computation time by more than 10x,” said Christina Poudyal, a data scientist with a focus on agriculture at the University of Minnesota.

HPC, AI Workloads Converging

The melding of HPC and AI applications is another driver for MSI’s adoption of containers. Both workloads leverage the parallel computing capabilities of MSI’s GPU-accelerated systems.

This convergence spawns work across disciplines.

“Application scientists are closely collaborating with computer scientists to fundamentally advance the way AI methods are using new sources of data and incorporating some of the physical processes that we already know,” said Jim Wilgenbusch, director of Research Computing at the university.

These multi-disciplinary teams partner with NVIDIA to optimize their workflows and algorithms. And they rely on containers updated, tested and stored in NGC to keep pace with rapid changes in AI software.