Cloud or on premises? That’s the question many organizations ask when building AI infrastructure.

Cloud computing can help developers get a fast start with minimal cost. It’s great for early experimentation and supporting temporary needs.

As businesses iterate on their AI models, however, they can become increasingly complex, consume more compute cycles and involve exponentially larger datasets. The costs of data gravity can escalate, with more time and money spent pushing large datasets from where they’re generated to where compute resources reside.

This AI development “speed bump” is often an inflection point where organizations realize there are opex benefits with on-premises or collocated infrastructure. Its fixed costs can support rapid iteration at the lowest “cost per training run,” complementing their cloud usage.

Conversely, for organizations whose datasets are created in the cloud and live there, procuring compute resources adjacent to that data makes sense. Whether on-prem or in the cloud, minimizing data travel — by keeping large volumes as close to compute resources as possible — helps minimize the impact of data gravity on operating costs.

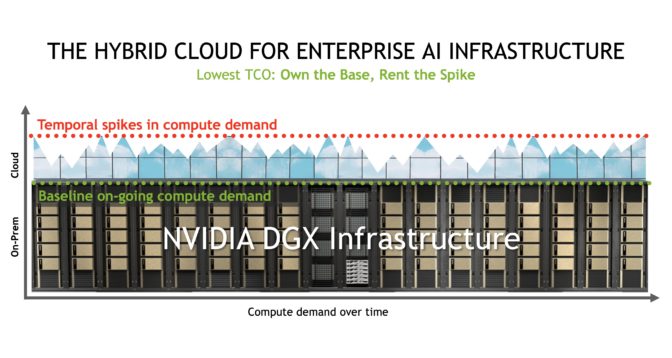

‘Own the Base, Rent the Spike’

Businesses that ultimately embrace hybrid cloud infrastructure trace a familiar trajectory.

One customer developing an image recognition application immediately benefited from a fast, effortless start in the cloud.

As their database grew to millions of images, costs rose and processing slowed, causing their data scientists to become more cautious in refining their models.

At this tipping point — when a fixed cost infrastructure was justified — they shifted training workloads to an on-prem NVIDIA DGX system. This enabled an immediate return to rapid, creative experimentation, allowing the business to build on the great start enabled by the cloud.

The saying “own the base, rent the spike” captures this situation. Enterprise IT provisions on-prem DGX infrastructure to support the steady-state volume of AI workloads and retains the ability to burst to the cloud whenever extra capacity is needed.

It’s this hybrid cloud approach that can secure the continuous availability of compute resources for developers while ensuring the lowest cost per training run.

Delivering the AI Hybrid Cloud with DGX and Google Cloud’s Anthos on Bare Metal

To help businesses embrace hybrid cloud infrastructure, NVIDIA has introduced support for Google Cloud’s Anthos on bare metal for its DGX A100 systems.

For customers using Kubernetes to straddle cloud GPU compute instances and on-prem DGX infrastructure, Anthos on bare metal enables a consistent development and operational experience across deployments, while reducing expensive overhead and improving developer productivity.

This presents several benefits to enterprises. While many have implemented GPU-accelerated AI in their data centers, much of the world retains some legacy x86 compute infrastructure. With Anthos on bare metal, IT can easily add on-prem DGX systems to their infrastructure to tackle AI workloads and manage it the same familiar way, all without the need for a hypervisor layer.

Without the need for a virtual machine, Anthos on bare metal — now generally available — manages application deployment and health across existing environments for more efficient operations. Anthos on bare metal can also manage application containers on a wide variety of performance, GPU-optimized hardware types and allows for direct application access to hardware.

“Anthos on bare metal provides customers with more choice over how and where they run applications and workloads,” said Rayn Veerubhotla, Director of Partner Engineering at Google Cloud. “NVIDIA’s support for Anthos on bare metal means customers can seamlessly deploy NVIDIA’s GPU Device Plugin directly on their hardware, enabling increased performance and flexibility to balance ML workloads across hybrid environments.”

Additionally, teams can access their favorite NVIDIA NGC containers, Helm charts and AI models from anywhere.

With this combination, enterprises can enjoy the rapid start and elasticity of resources offered on Google Cloud, as well as the secure performance of dedicated on-prem DGX infrastructure.

Learn more about Google Cloud’s Anthos.

Learn more about NVIDIA DGX A100.