Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how NVIDIA DRIVE addresses them. Catch up on all of our automotive posts, here.

Autonomous vehicles don’t just need to detect the moving traffic that surrounds them — they must also be able to tell what isn’t in motion.

At first glance, camera-based perception may seem sufficient to make these determinations. However, low lighting, inclement weather or conditions where objects are heavily occluded can affect cameras’ vision. This means diverse and redundant sensors, such as radar, must also be capable of performing this task. However, additional radar sensors that leverage only traditional processing may not be enough.

In this DRIVE Labs video, we show how AI can address the shortcomings of traditional radar signal processing in distinguishing moving and stationary objects to bolster autonomous vehicle perception.

Traditional radar processing bounces radar signals off of objects in the environment and analyzes the strength and density of reflections that come back. If a sufficiently strong and dense cluster of reflections comes back, classical radar processing can determine this is likely some kind of large object. If that cluster also happens to be moving over time, then that object is probably a car.

While this approach can work well for inferring a moving vehicle, the same may not be true for a stationary one. In this case, the object produces a dense cluster of reflections, but doesn’t move. According to classical radar processing, this means the object could be a railing, a broken down car, a highway overpass or some other object. The approach often has no way of distinguishing which.

Introducing Radar DNN

One way to overcome the limitations of this approach is with AI in the form of a deep neural network (DNN).

Specifically, we trained a DNN to detect moving and stationary objects, as well as accurately distinguish between different types of stationary obstacles, using data from radar sensors.

Training the DNN first required overcoming radar data sparsity problems. Since radar reflections can be quite sparse, it’s practically infeasible for humans to visually identify and label vehicles from radar data alone.

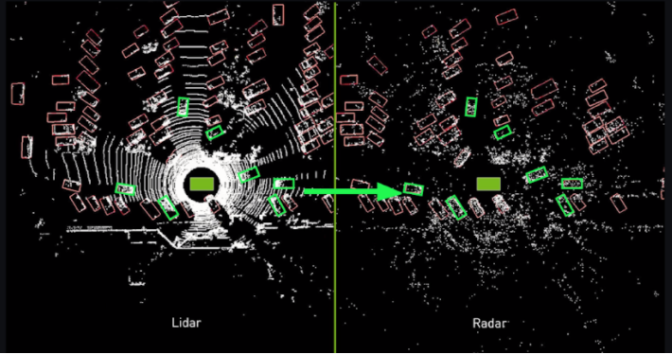

Lidar, however, can create a 3D image of surrounding objects using laser pulses. Thus, ground truth data for the DNN was created by propagating bounding box labels from the corresponding lidar dataset onto the radar data as shown in Figure 1. In this way, the ability of a human labeler to visually identify and label cars from lidar data is effectively transferred into the radar domain.

Moreover, through this process, the radar DNN not only learns to detect cars, but also their 3D shape, dimensions and orientation, which classical methods cannot easily do.

With this additional information, the radar DNN is able to distinguish between different types of obstacles — even if they’re stationary — increase confidence of true positive detections, and reduce false positive detections.

The higher confidence 3D perception results from the radar DNN in turn enables AV prediction, planning and control software to make better driving decisions, particularly in challenging scenarios. For radar, classically difficult problems like accurate shape and orientation estimation, detecting stationary vehicles as well as vehicles under highway overpasses become feasible with far fewer failures.

The radar DNN output is integrated smoothly with classical radar processing. Together, these two components form the basis of our radar obstacle perception software stack.

This stack is designed to both offer full redundancy to camera-based obstacle perception and enable radar-only input to planning and control, as well as enable fusion with camera- or lidar-perception software.

With such comprehensive radar perception capabilities, autonomous vehicles can perceive their surroundings with confidence.

To learn more about the software functionality we’re building, check out the rest of our DRIVE Labs series.