Cars of the future will be more than just modes of transportation; they’ll be intelligent companions, seamlessly blending technology and comfort to enhance driving experiences, and built for safety, inside and out.

NVIDIA GTC, running this week at the San Jose Convention Center, will spotlight the groundbreaking work NVIDIA and its partners are doing to bring the transformative power of generative AI, large language models and visual language models to the mobility sector.

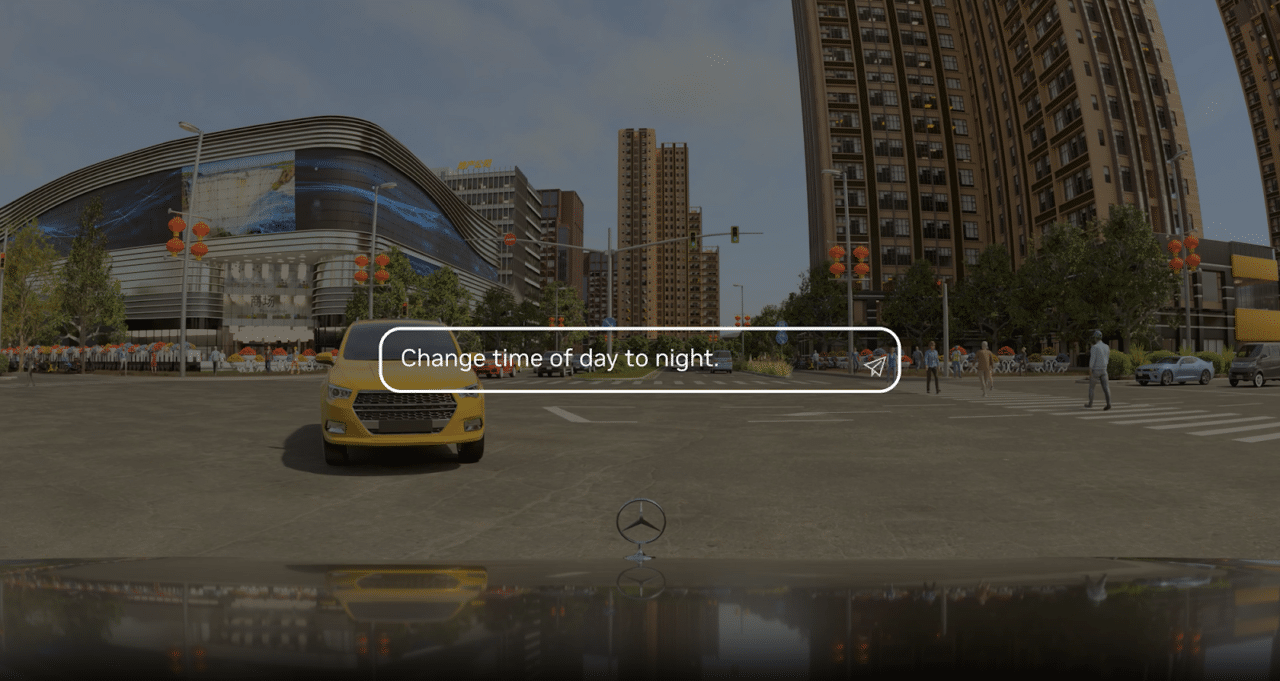

At its booth, NVIDIA will showcase how it’s building automotive assistants to enhance driver safety, security and comfort through enhanced perception, understanding and generative capabilities powered by deep learning and transformer models.

Talking the Talk

LLMs, a form of generative AI, largely represent a class of deep-learning architectures known as transformer models, which are neural networks adept at learning context and meaning.

Vision language models are another derivative of generative AI, that offer image processing and language understanding capabilities. Unlike traditional or multimodal LLMs that primarily process and generate text-based data, VLMs can analyze and generate text via images or videos.

And retrieval-augmented generations allows manufacturers to access knowledge from a specific database or the web to assist drivers.

These technologies together enable NVIDIA Avatar Cloud Engine, or ACE, and multimodal language models to work together with the NVIDIA DRIVE platform to let automotive manufacturers develop their own intelligent in-car assistants.

For example, an Avatar configurator can allow designers to build unique, brand-inspired personas for their cars, complete with customized voices and emotional attributes. These AI-animated avatars can engage in natural dialogue, providing real-time assistance, recommendations and personalized interactions.

Furthermore, AI-enhanced surround visualization enhances vehicle safety using 360-degree camera reconstruction, while the intelligent assistant sources external information, such as local driving laws, to inform decision-making.

Personalization is paramount, with AI assistants learning driver and passenger habits and adapting its behavior to suit occupants’ needs.

Generative AI for Automotive in Full Force at GTC

Several NVIDIA partners at GTC are also showcasing their latest generative AI developments using NVIDIA’s edge-to-cloud technology:

- Cerence’s CaLLM is an automotive-specific LLM that serves as the foundation for the company’s next-gen in-car computing platform, running on NVIDIA DRIVE. The platform, unveiled late last year, is the future of in-car interaction, with an automotive- and mobility-specific assistant that provides an integrated in-cabin experience. Cerence is collaborating with NVIDIA engineering teams for deeper integration of CaLLM with the NVIDIA AI Foundation Models. Through joint efforts, Cerence is harnessing NVIDIA DGX Cloud as the development platform, applying guardrails for enhanced performance, and leveraging NVIDIA AI Enterprise to optimize inference. NVIDIA and Cerence will continue to partner and pioneer this solution together with several automotive OEMs this year.

- Wavye is helping usher in the new era of Embodied AI for autonomy, their next-generation AV2.0 approach is characterized by a large Embodied AI foundation model that learns to drive self-supervised using AI end-to-end —from sensing, as an input, to outputting driving actions. The British startup has already unveiled its GAIA-1, a generative world model for AV development running on NVIDIA; alongside LINGO-1, a closed-loop driving commentator that uses natural language to enhance the learning and explainability of AI driving models.

- Li Auto unveiled its multimodal cognitive model, Mind GPT, in June. Built on NVIDIA TensorRT-LLM, an open-source library, it serves as the basis for the electric vehicle maker’s AI assistant, Lixiang Tongxue, for scene understanding, generation, knowledge retention and reasoning capabilities. Li Auto is currently developing DriveVLM to enhance autonomous driving capabilities, enabling the system to understand complex scenarios, particularly those that are challenging for traditional AV pipelines, such as unstructured roads, rare and unusual objects, and unexpected traffic events. This advanced model is trained on the NVIDIA GPUs and utilizes TensorRT-LLM and NVIDIA Triton Inference Server for data generation in the data center. With inference optimized by NVIDIA DRIVE and TensorRT-LLM, DriveVLMs perform efficiently on embedded systems.

- NIO launched its NOMI GPT, which offers a number of functional experiences, including NOMI Encyclopedia Q&A, Cabin Atmosphere Master and Vehicle Assistant. With the capabilities enabled by LLMs and an efficient computing platform powered by NVIDIA AI stacks, NOMI GPT is capable of basic speech recognition and command execution functions and can use deep learning to understand and process more complex sentences and instructions inside the car.

- Geely is working with NVIDIA to provide intelligent cabin experiences, along with accelerated edge-to-cloud deployment. Specifically, Geely is applying generative AI and LLM technology to provide smarter, personalized and safer driving experiences, using natural language processing, dialogue systems and predictive analytics for intelligent navigation and voice assistants. When deploying LLMs into production, Geely uses NVIDIA TensorRT-LLM to achieve highly efficient inference. For more complex tasks or scenarios requiring massive data support, Geely plans to deploy large-scale models in the cloud.

- Waabi is building AI for self-driving and will use the generative AI capabilities afforded by NVIDIA DRIVE Thor for its breakthrough autonomous trucking solutions, bringing safe and reliable autonomy to the trucking industry.

- Lenovo is unveiling a new AI acceleration engine, dubbed UltraBoost, which will run on NVIDIA DRIVE, and features an AI model engine and AI compiler tool chains to facilitate the deployment of LLMs within vehicles.

- SoundHound AI is using NVIDIA to run its in-vehicle voice interface — which combines both real-time and generative AI capabilities — even when a vehicle has no cloud connectivity. This solution also offers drivers access to SoundHound’s Vehicle Intelligence product, which instantly delivers settings, troubleshooting and other information directly from the car manual and other data sources via natural speech, as opposed to through a physical document.

- Tata Consultancy Services (part of the TATA Group), through its AI-based technology and engineering innovation, has built its automotive GenAI suite powered by NVIDIA GPUs and software frameworks. It accelerates the design, development, and validation of software-defined vehicles, leveraging the various LLMs and VLMs for in-vehicle and cloud-based systems.

- MediaTek is announcing four automotive systems-on-a-chip within its Dimensity Auto Cockpit portfolio, offering powerful AI-based in-cabin experiences for the next generation of intelligent vehicles that span from premium to entry level. To support deep learning capabilities, the Dimensity Auto Cockpit chipsets integrate NVIDIA’s next-gen GPU-accelerated AI computing and NVIDIA RTX-powered graphics to run LLMs in the car, allowing vehicles to support chatbots, rich content delivery to multiple displays, driver alertness detection and other AI-based safety and entertainment applications.

Check out the many automotive talks on generative AI and LLMs throughout the week of GTC.

Register today to attend GTC in person, or tune in virtually, to explore how generative AI is making transportation safer, smarter and more enjoyable.