Thanks to earbuds you can have calls anywhere while doing anything. The problem: those on the other end of the call hear it all, too, from your roommate’s vacuum cleaner to background conversations at the cafe you’re working from.

Now, work by a trio of graduate students at the University of Washington who spent the pandemic cooped up together in a noisy apartment, lets those on the other end of the call hear just you — rather than all the stuff going on around you.

Users found that the system, dubbed “ClearBuds” — presented last month at the ACM International Conference on Mobile Systems, Applications, and Services — improved background noise suppression much better than a commercially available alternative.

“You’re removing your audio background the same way you can remove your visual background on a video call,” explained Vivek Jayaram, a doctoral student in the Paul G. Allen School of Computer Science & Engineering.

Outlined in a paper co-authored by the three roommates, all computer science and engineering graduate students at the University of Washington — Maruchi Kim, Ishan Chatterjee, and Jayaram — ClearBuds are different from other wireless earbuds in two big ways.

First, ClearBuds use two microphones per earbud.

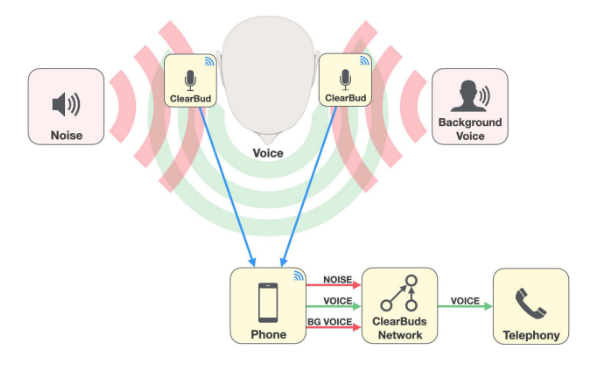

While most earbuds use two microphones on the same earbud, ClearBuds uses a microphone from both earbuds and creates two audio streams.

This creates higher spatial resolution for the system to better separate sounds coming from different directions, Kim explained. In other words, it makes it easier for the system to pick out the earbud wearer’s voice.

Second, the team created a neural network algorithm that can run on a mobile phone to process the audio streams to identify which sounds should be enhanced and which should be suppressed.

The researchers relied on two separate neural networks to do this.

The first neural network suppresses everything that isn’t a human voice.

The second enhances the speaker’s voice. The speaker can be identified because it’s coming from microphones in both earbuds at the same time.

Together, they effectively mask background noise and ensure the earbud wearer is heard loud and clear.

While the software the researchers created was lightweight enough to run on a mobile device, they relied on an NVIDIA TITAN desktop GPU to train the neural networks. They used both synthetic audio samples and real audio. Training took less than a day.

And the results, users reported, were dramatically better than commercially available earbuds, results that are winning recognition industrywide.

The team took second place for best paper at last month’s ACM MobSys 2022 conference. In addition to Kim, Chatterjee and Jayarm, the paper’s co-authors included Ira Kemelmacher-Shlizerman, an associate professor at the Allen School; Shwetak Patel, a professor in both the Allen School and the electrical and computer engineering department; and Shyam Gollakota and Steven Seitz, both professors in the Allen School.

Read the full paper here: https://dl.acm.org/doi/10.1145/3498361.3538933

To be sure, the system outlined in the paper can’t be adopted instantly. While many earbuds have two microphones per earbud, they only stream audio from one earbud. Industry standards are just catching up to the idea of processing multiple audio streams from earbuds.

Nevertheless, the researchers are hopeful their work, which is open source, will inspire others to couple neural networks and microphones to provide better quality audio calls.

The ideas could also be useful for isolating and enhancing conversations taking place over smart speakers by harnessing them for ad hoc microphone arrays, Kim said, and even tracking robot locations or search and rescue missions.

Sounds good to us.

Featured image credit: Raymond Smith, University of Washington