AI is going to the dogs. Literally.

Colorado State University researchers Jason Stock and Tom Cavey have published a paper on an AI system to recognize and reward dogs for responding to commands.

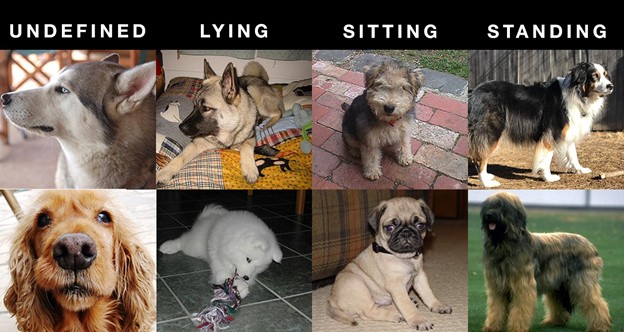

The graduate students in computer science trained image classification networks to determine whether a dog is sitting, standing or lying. If a dog responds to a command by adopting the correct posture, the machine dispenses it a treat.

The duo relied on the NVIDIA Jetson edge AI platform for real-time trick recognition and treats.

Stock and Cavey see their prototype system as a dog trainer’s aid — it handles the treats — or a way to school dogs on better behavior at home.

“We’ve demonstrated the potential for a future product to come out of this,” Stock said

Fetching Dog Training Data

The researchers needed to fetch dog images that exhibited the three postures. They found the Stanford Dogs datasets had more than 20,000 at many positions and image sizes, requiring preprocessing. They wrote a program to help quickly label them.

To refine the model, they applied features of dogs from ImageNet to enable transfer learning. Next, they applied post-training and optimization techniques to boost the speed and reduce model size.

For optimizations, they tapped into NVIDIA’s Jetpack SDK on Jetson, offering an easy way to get it up and running quickly and to access the TensorRT and cuDNN libraries, Stock said. NVIDIA TensorRT optimization libraries offered “significant improvements in speed,” he added.

Tapping into the university’s computing system, Stock trained the model overnight on two 24GB NVIDIA RTX 6000 GPUs.

“The RTX GPU is a beast — with 24GB of VRAM, the entire dataset can be loaded into memory,” he said. “That makes the entire process way faster.”

Deployed Models on Henry

The researchers tested their models on Henry, Cavey’s Australian Shepherd.

They achieved model accuracy in tests of up to 92 percent and an ability to make split-second inference at nearly 40 frames per second.

Powered by the NVIDIA Jetson Nano, the system makes real-time decisions on dog behaviors and reinforces positive actions with a treat, transmitting a signal to a servo motor to release a reward.

“We looked at Raspberry Pi and Coral but neither was adequate, and the choice was obvious for us to use Jetson Nano,” said Cavey.

Biting into Explainable AI

Explainable AI helps provide transparency about the makeup of neural networks. It’s becoming more common in the financial services industry to understand fintech models. Stock and Cavey included model interpretation in their paper to provide explainable AI for the pet industry.

They do this with images of the videos that show the posture analysis. One set of images relies on GradCAM — a common technique to display where a convolutional neural network model is focused. Another set of images explains the model by tapping into Integrated Gradients, which helps analyze pixels.

The researchers said it was important to create a trustworthy and ethical component of the AI system for trainers and general users. Otherwise, there’s no way to explain your methodology should it come into question.

“We can explain what our model is doing, and that might be helpful to certain stakeholders — otherwise how can you back up what your model is really learning?” said Cavey.

The NVIDIA Deep Learning Institute offers courses in computer vision and the Jetson Nano.