Editor’s note: The name of the NVIDIA Jarvis conversational AI framework was changed to NVIDIA Riva in July 2021. All references to the name have been updated in this blog.

We’ve all been there: on a road trip and hungry. Wouldn’t it be amazing to ask your car’s driving assistant and get recommendations to nearby food, personalized to your taste?

Now, it’s possible for any business to build and deploy such experiences and many more with NVIDIA GPU systems and software libraries. That’s because NVIDIA Riva for conversational AI services and NVIDIA Merlin for recommender systems have entered open beta. Speaking today at the GPU Technology Conference, NVIDIA CEO Jensen Huang announced the news.

While AI for voice services and recommender systems has never been more needed in our digital worlds, development tools have lagged. And the need for better voice AI services is rising sharply.

More people are working from home and remotely learning, shopping, visiting doctors and more, putting strains on services and revealing shortcomings in user experiences. Some call centers report a 34 percent increase in hold times and a 68 percent increase in call escalations, according to a report from Harvard Business Review.

Meanwhile, current recommenders personalize the internet but often come up short. Retail recommenders suggest items recently purchased or continue pursuing people with annoying promos. Media and entertainment recommendations are often more of the same and not diverse. These systems are often fairly crude because they only go off of past recommendations or similarities.

NVIDIA Riva and NVIDIA Merlin allow companies to explore larger deep learning models, and develop more nuanced and intelligent recommendation systems. Conversational AI services built on Riva and recommender systems built on Merlin offer the fast track forward to better services from businesses.

Early Access Riva Adopter Advances

Some companies in the NVIDIA Developer program have already begun work on conversational AI services with NVIDIA Riva. Early adopters included Voca, an AI agent for call center support; Kensho, for automatic voice transcriptions for finance and business; and Square, offering a virtual assistant for scheduling appointments.

London-based Intelligent Voice, which offers high-performance speech recognition services, is always looking for more, said its CTO, Nigel Cannings.

“Riva takes a multimodal approach that fuses key elements of automatic speech recognition with entity and intent matching to address new use cases where high-throughput and low latency are required,” he said. “The Riva API is very easy to use, integrate and customize to our customers’ workflows for optimized performance.”

It has allowed Intelligent Voice — a member of the NVIDIA Inception program for turbocharging startups — to pivot quickly during the COVID crisis to bring to market in record time a complete new product, Myna, that allows accurate and useful meeting recall.

Better Conversational AI Needed

In the U.S., call center assistants handle 200 million calls per day, and telemedicine services enable 2.4 million daily physician visits, demanding transcriptions with high accuracy.

Traditional voice systems leave room for improvement. With processing constrained by CPUs, their lower quality models result in lag-filled robotic voice products. Riva includes Megatron-BERT models, the largest today, to offer the highest accuracy and lowest latency.

Deploying real-time conversational AI for natural interactions requires model computations in under 300 milliseconds — versus 600 milliseconds on CPU-powered models.

Riva provides more natural interactions through sensor fusion — the integration of video cameras and microphones. Its ability to handle multiple data streams in real time enables the delivery of improved services.

Complex Model Pipelines, Easier Solutions

Model pipelines in conversational AI can be complex and require coordination across multiple services.

Microservices are required to run at scale with automatic speech recognition models, natural language understanding, text-to-speech and domain-specific apps. These super-specialized tasks, sped up when run in parallel processing, gain a 3x cost advantage over a competing CPU-only server.

NVIDIA Riva is a comprehensive framework, offering software libraries for building conversational AI applications and including GPU-optimized services for ASR, NLU, TTS and computer vision that use the latest deep learning models.

Developers can meld these multiple skills within their applications, and quickly help our hungry vacationer find just the right place.

Merlin Creates a More Relevant Internet

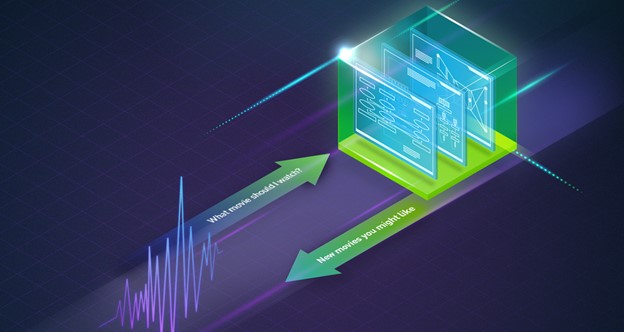

Recommender systems are the engine of the personalized internet and they’re everywhere online. They suggest food you might like, offer items related to your purchases and can capture your interest in the moment with retargeted advertising for product offers as you bounce from site to site.

But when recommenders don’t do their best, people may walk away empty-handed and businesses leave money on the table.

On some of the world’s largest online commerce sites, recommender systems account for as much as 30 percent of revenue. Just a 1 percent improvement in the relevance of recommendations can translate into billions of dollars in revenue.

Recommenders at Scale on GPUs

At Tencent, recommender systems support videos, news, music and apps. Using NVIDIA Merlin, the company reduced its recommender training time from 20 hours to three.

“With the use of the Merlin HugeCTR advertising recommendation acceleration framework, our advertising business model can be trained faster and more accurately, which is expected to improve the effect of online advertising,” said Ivan Kong, AI technical leader at Tencent TEG.

Democratizes Access to Recommenders

Now everyone has access to the NVIDIA Merlin application framework, which allows businesses of all kinds to build recommenders accelerated by NVIDIA GPUs.

Merlin’s collection of libraries includes tools for building deep learning-based systems that provide better predictions than traditional methods and increase click-through rates. Each stage of the pipeline is optimized to support hundreds of terabytes of data, all accessible through easy-to-use APIs.

Merlin is used at one of the world’s largest media companies and is in testing with hundreds of companies worldwide. Social media giants in the U.S. are experimenting with its ability to share related news. Streaming media services are testing it for suggestions on next views and listens. And major retailers are looking at it for suggestions on next items to purchase.

Those who are interested can learn more about the technology advances behind Merlin since its initial launch, including its support for NVTabular, multi-GPU support, HugeCTR and NVIDIA Triton Inference Server.

Businesses can sign up for the NVIDIA Riva beta for access to the latest developments in conversational AI, and get started with the NVIDIA Merlin beta for the fastest way to upload terabytes of training data and deploy recommenders at scale.

It’s not too late to get access to hundreds of live and on-demand talks at GTC. Register now through Oct. 9 using promo code CMB4KN to get 20 percent off.