Coding assistants or copilots — AI-powered assistants that can suggest, explain and debug code — are fundamentally changing how software is developed for both experienced and novice developers.

Experienced developers use these assistants to stay focused on complex coding tasks, reduce repetitive work and explore new ideas more quickly. Newer coders — like students and AI hobbyists — benefit from coding assistants that accelerate learning by describing different implementation approaches or explaining what a piece of code is doing and why.

Coding assistants can run in cloud environments or locally. Cloud-based coding assistants can be run anywhere but offer some limitations and require a subscription. Local coding assistants remove these issues but require performant hardware to operate well.

NVIDIA GeForce RTX GPUs provide the necessary hardware acceleration to run local assistants effectively.

Code, Meet Generative AI

Traditional software development includes many mundane tasks such as reviewing documentation, researching examples, setting up boilerplate code, authoring code with appropriate syntax, tracing down bugs and documenting functions. These are essential tasks that can take time away from problem solving and software design. Coding assistants help streamline such steps.

Many AI assistants are linked with popular integrated development environments (IDEs) like Microsoft Visual Studio Code or JetBrains’ Pycharm, which embed AI support directly into existing workflows.

There are two ways to run coding assistants: in the cloud or locally.

Cloud-based coding assistants require source code to be sent to external servers before responses are returned. This approach can be laggy and impose usage limits. Some developers prefer to keep their code local, especially when working with sensitive or proprietary projects. Plus, many cloud-based assistants require a paid subscription to unlock full functionality, which can be a barrier for students, hobbyists and teams that need to manage costs.

Coding assistants run in a local environment, enabling cost-free access with:

Get Started With Local Coding Assistants

Tools that make it easy to run coding assistants locally include:

- Continue.dev — An open-source extension for the VS Code IDE that connects to local large language models (LLMs) via Ollama, LM Studio or custom endpoints. This tool offers in-editor chat, autocomplete and debugging assistance with minimal setup. Get started with Continue.dev using the Ollama backend for local RTX acceleration.

- Tabby — A secure and transparent coding assistant that’s compatible across many IDEs with the ability to run AI on NVIDIA RTX GPUs. This tool offers code completion, answering queries, inline chat and more. Get started with Tabby on NVIDIA RTX AI PCs.

- OpenInterpreter — Experimental but rapidly evolving interface that combines LLMs with command-line access, file editing and agentic task execution. Ideal for automation and devops-style tasks for developers. Get started with OpenInterpreter on NVIDIA RTX AI PCs.

- LM Studio — A graphical user interface-based runner for local LLMs that offers chat, context window management and system prompts. Optimal for testing coding models interactively before IDE deployment. Get started with LM Studio on NVIDIA RTX AI PCs.

- Ollama — A local AI model inferencing engine that enables fast, private inference of models like Code Llama, StarCoder2 and DeepSeek. It integrates seamlessly with tools like Continue.dev.

These tools support models served through frameworks like Ollama or llama.cpp, and many are now optimized for GeForce RTX and NVIDIA RTX PRO GPUs.

See AI-Assisted Learning on RTX in Action

Running on a GeForce RTX-powered PC, Continue.dev paired with the Gemma 12B Code LLM helps explain existing code, explore search algorithms and debug issues — all entirely on device. Acting like a virtual teaching assistant, the assistant provides plain-language guidance, context-aware explanations, inline comments and suggested code improvements tailored to the user’s project.

This workflow highlights the advantage of local acceleration: the assistant is always available, responds instantly and provides personalized support, all while keeping the code private on device and making the learning experience immersive.

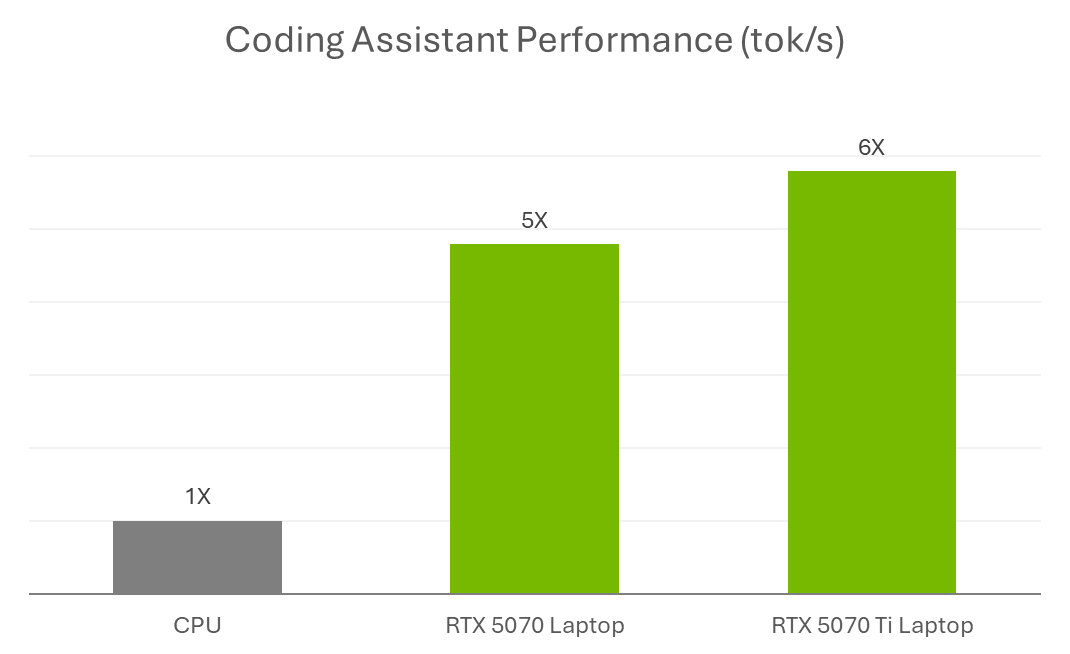

That level of responsiveness comes down to GPU acceleration. Models like Gemma 12B are compute-heavy, especially when they’re processing long prompts or working across multiple files. Running them locally without a GPU can feel sluggish — even for simple tasks. With RTX GPUs, Tensor Cores accelerate inference directly on the device, so the assistant is fast, responsive and able to keep up with an active development workflow.

Whether used for academic work, coding bootcamps or personal projects, RTX AI PCs are enabling developers to build, learn and iterate faster with AI-powered tools.

For those just getting started — especially students building their skills or experimenting with generative AI — NVIDIA GeForce RTX 50 Series laptops feature specialized AI technologies that accelerate top applications for learning, creating and gaming, all on a single system. Explore RTX laptops ideal for back-to-school season.

And to encourage AI enthusiasts and developers to experiment with local AI and extend the capabilities of their RTX PCs, NVIDIA is hosting a Plug and Play: Project G-Assist Plug-In Hackathon — running virtually through Wednesday, July 16. Participants can create custom plug-ins for Project G-Assist, an experimental AI assistant designed to respond to natural language and extend across creative and development tools. It’s a chance to win prizes and showcase what’s possible with RTX AI PCs.

Join NVIDIA’s Discord server to connect with community developers and AI enthusiasts for discussions on what’s possible with RTX AI.

Each week, the RTX AI Garage blog series features community-driven AI innovations and content for those looking to learn more about NVIDIA NIM microservices and AI Blueprints, as well as building AI agents, creative workflows, digital humans, productivity apps and more on AI PCs and workstations.

Plug in to NVIDIA AI PC on Facebook, Instagram, TikTok and X — and stay informed by subscribing to the RTX AI PC newsletter.

Follow NVIDIA Workstation on LinkedIn and X.

See notice regarding software product information.