For autonomous vehicles, what’s on the surface matters.

While humans are taught to avoid snap judgments, self-driving cars must be able to see, detect and act quickly and accurately to operate safely. This capability requires a robust perception software stack that can comprehensively identify and track the vehicle’s surrounding environment.

Startups worldwide are developing these perception stacks, using the high-performance, energy-efficient compute of NVIDIA DRIVE AGX to deliver highly accurate object detection to autonomous vehicle manufacturers.

The NVIDIA DRIVE AGX platform processes data from a range of sensors — including camera, radar, lidar and ultrasonic — to help autonomous vehicles perceive the surrounding environment, localize to a map, then plan and execute a safe path forward. This AI supercomputer enables autonomous driving, in-cabin functions and driver monitoring, plus other safety features — all in a compact package.

Hundreds of automakers, suppliers and startups are building self-driving vehicles on NVIDIA DRIVE AGX, which is why perception stack developers across the globe have chosen to develop their solutions on the AI platform.

Development From Day One

NVIDIA DRIVE is the place to start for companies looking to get their perception solutions up and running.

aiMotive, a self-driving startup based out of Hungary, has built a modular software stack, called aiDrive, that delivers comprehensive perception capabilities for automated driving solutions.

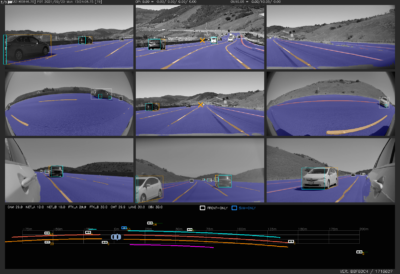

The company first began building out its solution on a compute platform with NVIDIA DRIVE in 2016. With high-performance, energy-efficient compute, aiDrive can perform perception using mono, stereo and fisheye cameras, as well as fuse data from radar, lidar and other sensors for a flexible and scalable solution.

“We’ve been using NVIDIA DRIVE from day one,” said Péter Kovács, aiMotive’s senior vice president of aiDrive. “The platforms work turnkey and support easy cross-target development — it’s also a technology ecosystem that developers are familiar with.”

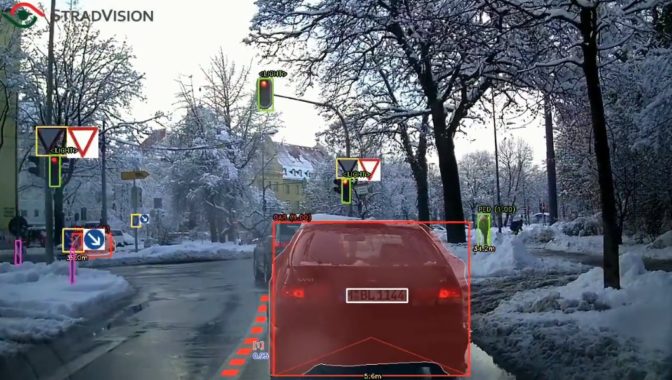

Stradvision, a startup based in South Korea, was formed by perception specialists in 2014 to build advanced driver assistance systems at scale. By developing on NVIDIA DRIVE AGX, the company has deployed a robust perception deep neural network for AI-assisted driving platforms.

The DNN, known as SVNet, is one of a few networks that meet the accuracy and computational requirements of production vehicles.

Performance at Every Level

Even for lower levels of autonomy, such as ADAS or AI-assisted driving, a robust perception stack is critical for safety.

Silicon Valley startup Phantom AI has leveraged years of automotive and tech industry experience to develop an intelligent perception stack that can predict object motion. The computer vision solution, known as PhantomVision, covers a 360-degree view from the vehicle in a combination of front-, side- and rear-view cameras.

The real-time detection and target tracking on the bird’s-eye view provides an accurate motion estimate of road objects. The high-performance processing of DRIVE AGX enables the software to perform live perception functions.

With the mission of creating a safer road environment for all users, Chinese startup CalmCar has built a multi-camera active surround view perception system. With the automotive-grade NVIDIA DRIVE Xavier at its core, CalmCar’s solution enables Level 2+ driving, valet parking and mapping.

By developing comprehensive solutions on NVIDIA DRIVE, these startups are delivering accurate and robust perception to AI-assisted and autonomous vehicles around the world.