It was the kind of career moment developers dream of but rarely experience. To whoops and cheers from the crowd at SIGGRAPH 2016, Dirk Van Gelder of Pixar Animation Studios presented Universal Scene Description.

USD would become the open-source glue filmmakers used to bind their favorite tools together so they could collaborate with colleagues around the world, radically simplifying the job of creating animated movies. At its birth, it had backing from three seminal partners—Autodesk, Foundry and SideFX.

Today, more than a dozen companies from Apple to Unity support USD. The standard is on the cusp of becoming the solder that fuses all sorts of virtual and physical worlds into environments where everything from skyscrapers to sports cars and smart cities will be designed and tested in simulation.

What’s more, it’s helping spawn machinima, an emerging form of digital storytelling based on game content.

How USD Found an Audience

The 2016 debut “was pretty exciting” for Van Gelder, who spent more than 20 years developing Pixar’s tools.

“We had talked to people about USD, but we weren’t sure they’d embrace it,” he said. “I did a live demo on a laptop of a scene from Finding Dory so they could see USD’s scalability and performance and what we at Pixar could do with it, and they really got the message.”

One of those in the crowd was Rev Lebaredian, vice president of simulation technology at NVIDIA.

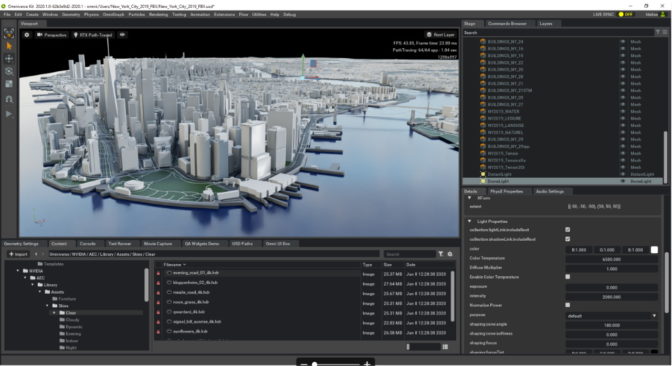

“Dirk’s presentation of USD live and in real time inspired us. It triggered a series of ideas and events that led to what is NVIDIA Omniverse today, with USD as its soul. So, it was fate that Dirk would end up on the Omniverse team,” said Lebaredian of the 3D graphics platform, now in open beta, that aims to collaborate with Pixar and the 3D graphics community to carry the USD vision forward.

Developers Layer Effects on 3D Graphics

Adobe’s developers were among many others who welcomed USD and now support it in their products.

“USD has a whole world of features that are incredibly powerful,” said Davide Pesare, who worked on USD at Pixar and is now a senior R&D manager at Adobe.

“For example, with USD layering, artists can work in the same scene without stepping on each other’s toes. Each artist has his or her own layer, so you can let the modeler work while someone else is building the shading,” he said.

“Today USD has spread beyond the film industry where it is pervasive in animation and special effects. Game developers are looking at it, Apple’s products can read it, we have partners in architecture using it and the number of products compatible with USD is only going to grow,” Pesare said.

Building a Virtual 3D Home for Architects

Although it got its start in the movies, USD can play many roles.

Millions of architects, engineers and designers need a way to quickly review progress on construction projects with owners and real-estate developers. Each stakeholder wants different programs often running on different computers, tablets or even handsets. It’s a script for an IT horror film where USD can write a happy ending.

Companies such as Autodesk, Bentley Systems, McNeel & Associates and Trimble Inc. are already exploring what USD can do for this community. NVIDIA used Omniverse to create a video showing some of the possibilities, such as previewing how the sun will play on the glassy interior of a skyscraper through the day.

Product Design Comes Alive with USD

It’s a similar story with a change of scene in the manufacturing industry. Here, companies have a cast of thousands of complex products they want to quickly design and test, ranging from voice-controlled gadgets to autonomous trucks.

The process requires iterations using programs in the hands of many kinds of specialists who demand photorealistic 3D models. Beyond de rigueur design reviews, they dream of the possibilities like putting visualizations in the hands of online customers.

Showing the shape of things to come, the Omniverse team produced a video for the debut of the NVIDIA DGX A100 system with exploding views of how its 30,000 components snap into a million drill holes. More recently, it generated a video of NVIDIA’s GeForce RTX 30 Series graphics card, (below) complete with a virtual tour of its new cooling subsystem, thanks to USD in Omniverse.

“These days my team spends a lot of time working on real-time physics and other extensions of USD for autonomous vehicles and robotics for the NVIDIA Isaac and DRIVE platforms,” Van Gelder said.

To show what’s possible today, engineers used USD to import into Omniverse an accurately modelled luxury car and details of a 17-mile stretch of highway around NVIDIA’s Silicon valley headquarters. The simulation, to be shown this week at GTC, shows the potential for environments detailed enough to test both vehicles and their automated driving capabilities.

Another team imported Kaya, a robotic car for consumers, so users could program the digital model and test its behavior in an Omniverse simulation before building or buying a physical robot.

The simulation was accurate despite the fact “the wheels are insanely complex because they can drive forward, backward or sideways,” said Mike Skolones, manager of the team behind NVIDIA Isaac Sim.

Lights! Camera! USD!

In gaming, Epic’s Unreal Engine supports USD and Unity and Blender are working to support it as well. Their work is accelerating the rise of machinima, a movie-like spinoff from gaming demonstrated in a video for NVIDIA Omniverse Machinima.

Meanwhile, back in Hollywood, studios are well along in adopting USD.

Pixar produced Finding Dory using USD. Dreamworks Animation described its process adopting USD to create the 2019 feature How to Train Your Dragon: The Hidden World. Disney Animation Studios blended USD into its pipeline for animated features, too.

Steering USD into the Omniverse

NVIDIA and partners hope to take USD into all these fields and more with Omniverse, an environment one team member describes as “like Google Docs for 3D graphics.”

Omniverse plugs the power of NVIDIA RTX real-time ray-tracing graphics into USD’s collaborative, layered editing. The recent “Marbles at Night” video (below) showcased that blend, created by a dozen artists scattered across the U.S., Australia, Poland, Russia and the U.K.

That’s getting developers like Pesare of Adobe excited.

“All industries are going to want to author everything with real time texturing, modeling, shading and animation,” said Pesare.

That will pave the way for a revolution in people consuming real-time media with AR and VR glasses linked on 5G networks for immersive, interactive experience anywhere, he added.

He’s one of more than 400 developers who’ve had a hands-on with Omniverse so far. Others come from companies like Ericsson, Foster & Partners and Industrial Light & Magic.

USD Gives Lunar Explorers a Hand

The Frontier Development Lab (FDL), a NASA partner, recently approached NVIDIA for help simulating light on the surface of the moon.

Using data from a lunar satellite, the Omniverse team generated images FDL used to create a video for a public talk, explaining its search for water ice on the moon and a landing site for a lunar rover.

Back on Earth, challenges ahead include using USD’s Hydra renderer to deliver content at 30 frames per second that might blend images from a dozen sources for a filmmaker, an architect or a product designer.

“It’s a Herculean effort to get this in the hands of the first customers for production work,” said Richard Kerris, general manager of NVIDIA’s media and entertainment group and former chief technologist at Lucasfilm. “We’re effectively building an operating system for creatives across multiple markets, so support for USD is incredibly important,” he said.

Kerris called on anyone with an RTX-enabled system to get their hands on the open beta of Omniverse and drive the promise of USD forward.

“We can’t wait to see what you will build,” he said.

It’s not too late to get access to hundreds of live and on-demand talks at GTC. Register now through Oct. 9 using promo code CMB4KN to get 20 percent off. And watch NVIDIA CEO Jensen Huang recap all the news at GTC in the video below.