Two chance encounters propelled remote direct memory access from a good but obscure idea for fast networks into the jet fuel for the world’s more powerful supercomputers.

The lucky breaks launched the fortunes of an Israel-based startup that staked its fortunes on InfiniBand, a network based on RDMA. Later, that startup, Mellanox Technologies, helped steer RDMA into mainstream computing, today’s AI boom and the tech industry’s latest multibillion-dollar merger.

It started in August 2001.

D.K. Panda, a professor at Ohio State University, met Kevin Deierling, vice president of marketing for newly funded Mellanox. Panda explained how his lab’s work on software for the message-passing interface (MPI) was fueling a trend to assembling clusters of low-cost systems for high performance computing.

The problem was the fast networks they used from small companies such as Myrinet and Quadrics were proprietary and expensive.

It was an easy deal for a startup on a shoestring. For the price of a few of its first InfiniBand cards, some pizza and Jolt cola, grad students got MPI running on Mellanox’s chips. U.S. national labs, also eyeing InfiniBand, pitched in, too. Panda’s team debuted the open source MVAPICH at the Supercomputing 2002 conference.

“People got performance gains over Myrinet and Quadrics, so it had a snowball effect,” said Panda of the software that recently passed a milestone of half a million downloads.

Pouring RDMA on a Really Big Mac

The demos captured the imagination of an ambitious assistant professor of computer science from Virginia Tech. Srinidhi Varadarajan had research groups across the campus lining up to use an inexpensive 80-node cluster he had built, but that was just his warm-up.

An avid follower of the latest tech, Varadarajan set his sights on IBM’s PowerPC 970, a new 2-GHz CPU with leading floating-point performance, backed by IBM’s heritage of solid HPC compilers. Apple was just about to come out with its Power Mac G5 desktop using two of the CPUs.

With funding from the university and a government grant, Varadarajan placed an order with the local Apple sales office for 1,100 of the systems on a Friday. “By Sunday morning we got a call back from Steve Jobs for an in-person meeting on Monday,” he said.

From the start, Varadarajan wanted the Macs linked on InfiniBand. “We wanted the high bandwidth and low latency because HPC apps tend to be latency sensitive, and InfiniBand would put us on the leading edge of solving that problem,” he said.

Mellanox Swarms an Infiniband Supercomputer

Mellanox gave its new and largest customer first-class support. CTO Michael Kagan helped design the system.

An architect on Kagan’s team, Dror Goldenberg, was having lunch at his desk at Mellanox’s Israel headquarters, near Haifa, when he heard about the project and realized he wanted to be there in person.

He took a flight to Silicon Valley that night and spent the next three weeks camped out in a secret lab at One Infinite Loop — Apple’s Cupertino, Calif., headquarters — where he helped develop the company’s first drivers for the Mac.

“That was the only place we could get access to the Mac G5 prototypes,” said Dror of the famously secretive company.

He worked around the clock with Virginia Tech researchers at Apple and Mellanox engineers back in Israel, getting the company’s network running on a whole new processor architecture.

The next stage brought a challenge of historic proportions. Goldenberg and others took up residence for weeks in a Virginia Tech server room, stacking computers to unheard-of heights in the days when Amazon was mainly known as a rain forest.

“It was crashing, but only at very large scale, and we couldn’t replicate the problem at small scale,” he said. “I was calling people back at headquarters about unusual bit errors. They said it didn’t make sense,” he added.

Energized by a new class of computer science issues, they often worked 22-hour days. They took catnaps in sleeping bags in between laying 2.3 miles of InfiniBand cable in a race to break records on the TOP500 list of the world’s biggest supercomputers.

Linking 1,100 off-the-shelf computers into one, “we stressed the systems to levels no one had seen before,” he said.

“Today, I know when you run such large jobs heat goes up, fans go crazy and traffic patterns on the wire change, creating different frequencies that can cause bit flips,” said Goldenberg, now a seasoned vice president of software architecture with the world’s largest cloud service providers among his customers.

RDMA Fuels 10 Teraflops and Beyond

Varadarajan was “a crazy guy, writing object code even though he was a professor,” recalls Kagan.

Benchmarks plateaued around 9.3 teraflops, just shy of the target that gave the System X its name. “The results were changing almost daily, and we had two days left on the last weekend when a Sunday night run popped out 10.3 teraflops,” Varadarajan recalled.

The $5.2 million system was ranked No. 3 on the TOP500 in November 2003. The fastest system at the time, the Earth Simulator in Japan, had cost an estimated $350 million.

“That was our debut in HPC,” said Kagan. “Now more than half the systems on the TOP500 use our chips with RDMA, and HPC is a significant portion of our business,” he added.

RDMA Born in November 1993

It was a big break for the startup and the idea of RDMA, first codified in a November 1993 patent by a team of Hewlett-Packard engineers.

Like many advances, the idea behind RDMA was simple, yet fundamental. If you give networked systems a way to access each other’s main memory without interrupting the processor or operating system, you can drive down latency, drive up throughput and simplify life for everybody.

The approach promised a way to eliminate a rat’s nest of back-and-forth traffic between systems that was slowing computers.

RoCE: RDMA Goes Mainstream

After its first success in supercomputers, baking RDMA into mature, mainstream Ethernet networks was a logical next step, but it took hard work and time. The InfiniBand Trade Association defined an initial version of RDMA over Converged Ethernet (RoCE, pronounced “rocky”) in 2010, and today’s more complete version that supports routing in 2014.

Mellanox helped write the spec and rolled RoCE into ConnectX, a family of chips that also support the high data rates of InfiniBand.

“That made them early with 25/50G and 100G Ethernet,” said Bob Wheeler, a networking analyst with The Linley Group. “When hyperscalers moved beyond 10G, Mellanox was the only game in town,” he said, noting OEMs jumped on the bandwagon to serve their enterprise customers.

IBM and Microsoft are vocal in their support for RoCE in their public clouds. And Alibaba recently “sent us a note that the network was great for its big annual Singles Day sale,” said Kagan.

Smart NICs: Chips Off the Old RDMA Block

Now that RDMA is nearly everywhere, it’s becoming invisible.

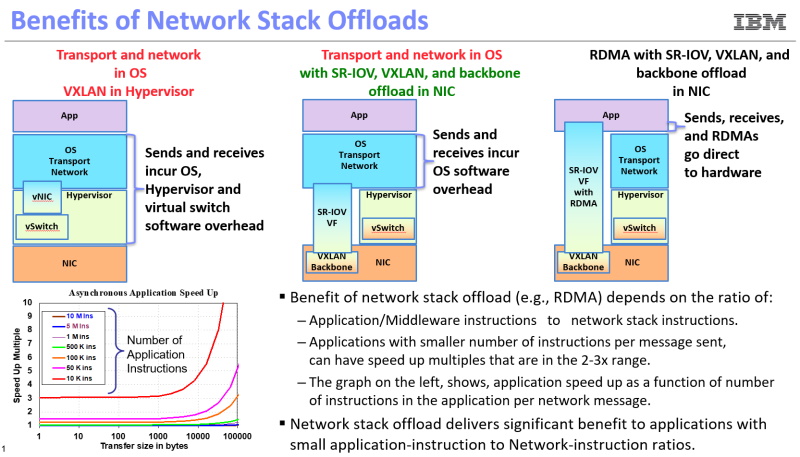

Today’s networks are increasingly defined by high-level software and virtualization that simplifies operations in part by hiding details of underlying hardware. Under the covers, RDMA is becoming just one of an increasing number of functions, many running on embedded processor cores on smart network-interface cards, aka smart NICs.

Today smart NICs enable adaptive routing, handle congestion management and increasingly oversee security. They “are essentially putting a computer in front of the computer,” continuing RDMA’s work of freeing up time on server CPUs, said Kagan.

The next role for tomorrow’s smart networks built on RDMA and RoCE is to accelerate a rising tide of AI applications.

Mellanox’s SHARP protocol already consolidates data from HPC apps distributed across dozens to thousands of servers. In the future, it’s expected to speed AI training by aggregating data from parameter servers such as updated weights from deep-learning models. Panda’s Ohio State lab continues to support such work with RDMA libraries for AI and big-data analytics.

“Today RDMA is an order of magnitude better and richer in its feature set for networking, storage and AI — scaling out to address the exponentially growing amount of data,” said Goldenberg.

Double Date: AI, RDMA Unite NVIDIA, Mellanox

The combination of AI and RDMA drew Mellanox and NVIDIA together into a $6.9 billion merger announced in March 2019. The deal closed on April 27, 2020.

NVIDIA first implemented RDMA in GPUDirect for its Kepler architecture GPUs and CUDA 5.0 software. Last year, it expanded the capability with GPUDirect Storage.

As supercomputers embraced Mellanox’s RDMA-powered InfiniBand networks, they also started adopting NVIDIA GPUs as accelerators. Today the combined companies power many supercomputers including Summit, named the world’s most powerful system in June 2018.

Similarities in data-intensive, performance-hungry HPC and AI tasks led the companies to collaborate on using InfiniBand and RoCE in NVIDIA’s DGX systems for training neural networks.

Now that Mellanox is part of NVIDIA, Kagan sees his career coming full circle.

“My first big design project as an architect was a vector processor, and it was the fastest microprocessor in the world in those days,” he said. “Now I’m with a company designing state-of-the-art vector processors, helping fuse processor and networking technologies together and driving computing to the next level — it’s very exciting.”

“Mellanox has been leading RDMA hardware delivery for nearly 20 years, it’s been a pleasure working with Michael and Dror throughout that time, and we look forward to what is coming next,” said Renato Recio an IBM Fellow in networking who helped define the Infiniband standard.