Most of us don’t think about it when we take the wheel, but the sheer variety of conditions and events we face on our daily drive is staggering.

To help manufacturers build self-driving cars able to handle these, NVIDIA announced on Thursday at the Facebook @Scale Conference, in San Jose, Calif., Project MagLev — an internally developed AI training and inference infrastructure architected with safety and scalability in mind.

We built our AI for autonomous driving, with deep neural networks running simultaneously to handle the full range of real-world conditions — vehicles and pedestrians, lighting at different times of day, sleet, glare, black ice, you name it.

Implementing the right algorithms to power this AI requires a tremendous amount of research and development. One of the most daunting challenges is verifying the correctness of deep neural networks across all conditions. And it’s not enough to do it once: you have to do so again and again — or “at scale” — to meet rigorous safety requirements.

Carrying out this type of comprehensive training requires the deep neural networks to be able to run millions of real data miles without error. And that’s just to match human performance. Factor in a full 12-camera platform equipped with lidar and radar sensors and the entire dataset for training over these millions of miles amounts to hundreds of petabytes.

That’s an enormous amount just to ensure human-level capability. And to be truly safe, self-driving systems must do better than human drivers.

MagLev: Hurtling Technology Forward

NVIDIA has dedicated time, talent and investment to AI development at the scale required for safe autonomous driving. It includes the work over several years of hundreds of engineers and AI developers, and a massive hardware and software infrastructure.

Much like the high-speed train after which it’s named, MagLev was created to hurtle technology forward at an unprecedented pace. We developed it to support all of the data processing necessary to train and validate industry-grade AI systems — including petabyte-scale testing, high-throughput data management and labeling, AI-based data selection to build the right datasets, traceability for safety, and end-to-end workflow automation.

Solving Bottlenecks

We designed MagLev to ease bottlenecks in the end-to-end industry-grade AI development workflow.

Every new model version we deploy to our self-driving car entails a journey through hundreds of petabytes of collected data, millions of labeled frames, dozens of dataset versions, and hundreds of experiments, each consuming days of training. MagLev uses automation to make this process more efficient. It stores all the gathered information and uses its growing knowledge base to make faster connections on new data, and provides full traceability between trained models and source data.

We built every component in MagLev with scale and flexibility in mind. This includes, for example, infrastructure to run hyper-parameter tuning, which is essential to explore more model architectures and training techniques, and find the best possible one. MagLev suggests experiments to run based on several exploration strategies, and can leverage results from past experiments.

All the information produced by the exploration jobs, including models and metrics, is tracked against corresponding hyper-parameter values in the knowledge base. This makes it easy to analyze the data, automatically or interactively.

Another central aspect of MagLev is its ability to programmatically capture end-to-end workflows — including data preprocessing, selection, model training, testing and pruning. Programmatic workflows free up scientists and researchers from having to constantly monitor the process. They also enable production engineers to seamlessly deploy models to the car. These can be executed periodically using the most recent dataset, as well as the latest features and hyper-parameters from the machine learning algorithm developers.

By logging each model’s accuracy and performance, the team can enable continuous improvement of their production stack. Should a regression in a production model occur, the production engineer can trace the latest functional model, its hyper-parameters and the dataset used.

Another important challenge for self-driving cars is building the right training datasets so we can capture all the possible conditions for the AI system to operate under. A particularly effective way of doing so is active learning — using our current best AI models to classify new collected data, and tell us how well they perform.

We can then use this information to label data that was misclassified, avoiding time-consuming mistakes. Active learning and similar methods are an efficient way to build better training datasets, faster. But they require the ability to perform inference at a massive scale. There, again, MagLev enables the team to apply pre-trained AI models at that level.

Revolutionary Inference Platform for Many Industries

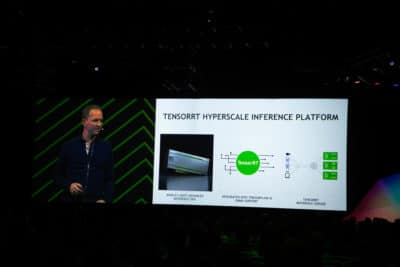

AI development isn’t just a core element of autonomous vehicles. It extends to every industry that relies on inferencing for automation. And it’s an ongoing effort that includes many of the innovations within the NVIDIA TensorRT Hyperscale Inference Platform, which was announced this week at GTC Japan.

The new NVIDIA TensorRT inference server provides a containerized, production-ready AI inference server for data center deployments. It maximizes utilization of GPU servers, supports all the top AI frameworks. And it provides metrics for scalability with Docker and Kubernetes.

NVIDIA is also working with Kubeflow to make it easy to deploy GPU-accelerated inference across Kubernetes clusters. The combination of NVIDIA TensorRT inference server and Kubeflow makes data center production using AI inference repeatable and scalable.

Learn more about NVIDIA inference solutions: