Thousands flocked to Munich this week for a major gathering — not Oktoberfest, but GTC Europe.

The conference, now in its third year, is a celebration of groundbreaking GPU-accelerated work across the region. Nearly 300 developers, startups and researchers took the stage, sharing innovative projects. Among them were some of the major science centers in Europe, spanning fields as diverse as particle physics, climate research and neuroscience.

Understanding the Universe

Técnico Lisboa, Portugal

Nuclear energy today is generated through nuclear fission: the process of splitting apart an atom’s nucleus, which creates both usable energy and radioactive waste. A cleaner and more powerful alternative is nuclear fusion, the joining together of two nuclei.

But so far, scientists haven’t been able to sustain a nuclear fusion reaction long enough to harness its energy. Using deep learning algorithms and a Tesla P100 GPU, university researchers at Portugal’s Técnico Lisboa are studying the plasma shape and behavior that takes place in a fusion reactor.

Gaining insight into the factors at play during nuclear fusion is essential for physicists. If researchers are able to predict when a reaction is about to be disrupted, they could make changes to take preventive action to prolong the reaction until enormous amounts of energy can be captured.

GPUs are essential to make these neural network inferences in real time during a fusion reaction. The deep learning models currently predict disruption with 85 percent accuracy, matching state-of-the-art systems. By adding more probes that collect measurements within the reactor, and using a multi-GPU system, the researchers can reach even higher accuracy levels.

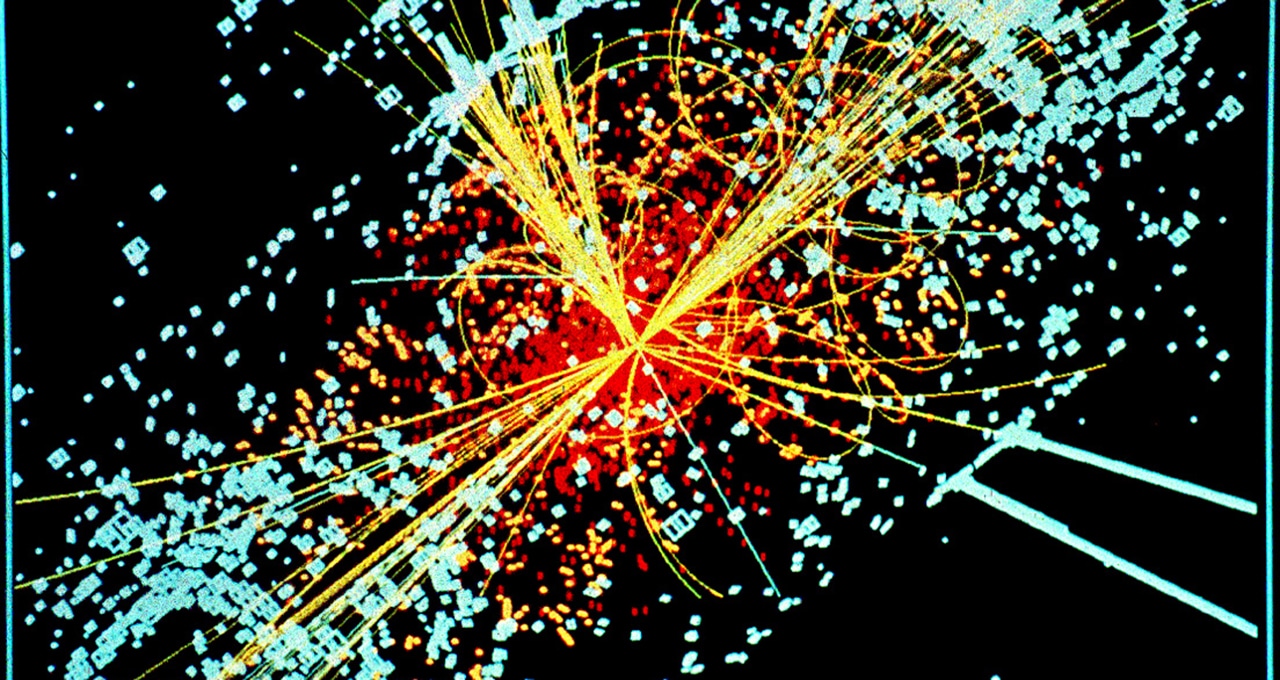

European Organization for Nuclear Research, Switzerland

Physicists have long been in search of a theory of everything, a mathematical model that works in every case, even at velocities approaching the speed of light. CERN, the European Organization for Nuclear Research, is a major center for this research.

Best known in recent years for the discovery of the Higgs boson, often called the “God particle,” the organization uses a machine called the Large Hadron Collider to create collisions between subatomic particles.

The researchers use software first to simulate the interactions they expect to see in the collision, and then to compare the real collision with the original simulation. These experiments require a system that can handle five terabytes of data per second.

“We are working to speed up our software and improve its accuracy, to face at best the challenges of the next Large Hadron Collider phase,” said CERN researcher Andrea Bocci. “We are exploring the use of GPUs to accelerate our algorithms and to integrate fast inference of deep learning models in our next-generation real-time data processing system.”

Using GPUs will allow CERN to raise the bar for detailed and highly accurate analysis, while going up to 100x faster.

Observatoire de Paris, France

Astronomers use large telescopes to get a closer look at the universe from Earth, scanning the skies for planets outside the solar system. But our planet’s atmosphere is turbulent, distorting the images collected by ground-based telescopes.

To counteract this distortion, astronomers use deformable mirrors that can shapeshift in real time.

A combination of high-performance linear algebra and machine learning algorithms determine how the telescope must move to correct for the atmospheric distortion, but they must run extremely quickly, since atmospheric distortion changes constantly. The algorithms must predict what the distortion will be like at the exact time the deformable mirrors will shift to the correct shape.

Researchers at the Observatoire de Paris, in collaboration with Subaru Telescope and KAUST ECRC, are using NVIDIA DGX-1 AI supercomputers to run the algorithms’ inferencing at the multi-kHz frame rate required.

Climate Modeling and Natural Disaster Response

Swiss National Supercomputing Center, Switzerland

A new report from the Intergovernmental Panel on Climate Change Description warns of dire effects if global temperature rises beyond 1.5 degrees Celsius above pre-industrial levels. To make these forecasts, researchers rely on climate models.

Climate models that look years into the future and at large geographical areas are computationally intensive, so scientists often use a low resolution, making inferences at the level of multiple square kilometers.

That means climate models have to approximate the impact clouds, sometimes just a few hundred meters across, can have on global temperature. Clouds are a key element of the weather system, so this approximation has a significant effect on a climate model’s results.

To better account for clouds in their climate model, the Swiss National Supercomputing Center runs their model on Piz Daint, the fastest supercomputer in Europe. The system is loaded with more than 5,000 Tesla P100 GPUs. Piz Daint allows the center’s researchers to run a global climate model at a resolution of one square kilometer, resolving the cloud problem and paving the way for accurate climate modeling to understand the effects of global warming.

German Research Centre for Artificial Intelligence, Germany

DFKI, the leading German AI research center, is using deep learning to estimate the damages of natural disasters like floods and wildfires. Its researchers use satellite imagery data as well as multimedia posted on social networks to identify and gauge the scope of crises.

“Time is critical for any assessment in a crisis situation,” said Andreas Dengel, site head at DFKI. “NVIDIA’s DGX allows us to analyze satellite images for areas that might not be accessible on the ground anymore in real time.”

These rapid insights could help first responders make faster and more efficient decisions about where and how to dispatch aid and resources in the aftermath of a natural disaster. DFKI is the first institution in Europe to adopt the NVIDIA DGX-2 AI system, equipped with 16 Tesla V100 Tensor Core GPUs.

A Closer Look at the Brain

Université de Reims, France

Neurodegenerative diseases like Alzheimer’s affect around 50 million people worldwide, but still lack a cure. Scientists are racing to discover effective drug treatments, aided by GPUs.

When studying the impact of a drug candidate on the brain, scientists take high-resolution brain scans. A single brain scan contains hundreds of slices, with 120 gigabytes of data in each one. They then spend hours or days annotating those scans to understand the drug molecule’s impact.

Researchers at the Université de Reims in France have created software that enables interactive analysis of hundreds of petabytes of brain scan data at around 50 frames per second. Scientists can smoothly zoom in and out of a scan and annotate key cells within the interface, enabling a much faster analysis workflow.

The team is using NVIDIA Quadro GPUs to accelerate their work.

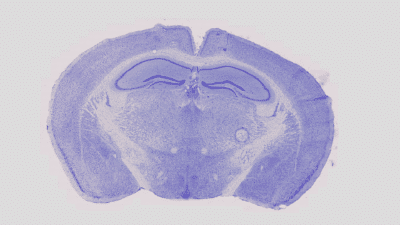

Jülich Research Center, Germany

The human brain holds about 100 billion neurons with 100 trillion connections. It’s no wonder so much about the brain remains a puzzle to scientists.

The Human Brain Project, created by the European Commision, aims to gather data about how the brain works. As part of this effort, researchers at the Jülich Research Center in Germany are building a 3D model of the brain by analyzing thousands of brain slices with deep learning. This research uses a supercomputer with NVIDIA Tesla P100 GPUs.

GPUs are built to crunch large amounts of data, power complex simulations and facilitate the use of deep learning — making them a fitting choice for a broad spectrum of scientific research. Equipped with this computing power, researchers are tackling the biggest challenges facing nearly every field of modern science.

Hear more about this scientific work in the GTC Europe keynote video.