Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series. With this series, we’re taking an engineering-focused look at individual autonomous vehicle challenges and how the NVIDIA DRIVE AV Software team is mastering them. Catch up on our earlier posts, here.

Intersections are common roadway features, whether four-way stops in a neighborhood or traffic-light-filled exchanges on busy multi-lane thoroughfares.

Given the frequency, variety and risk associated with intersections — more than 50 percent of serious accidents in the U.S. happen at or near them — it’s critical that an autonomous vehicle be able to accurately navigate them.

Handling intersections autonomously presents a complex set of challenges for self-driving cars. This includes the ability to stop accurately at an intersection wait line or crosswalk, correctly process and interpret right of way traffic rules in various scenarios, and determine and execute the correct path for a variety of maneuvers, such as proceeding straight through the intersection and unprotected intersection turns.

Earlier in the DRIVE Labs series, we demonstrated how we detect intersections, traffic lights, and traffic signs with the WaitNet DNN. And how we classify traffic light state and traffic sign type with the LightNet and SignNet DNNs. In this episode, we go further to show how NVIDIA uses AI to perceive the variety of intersection structures that an autonomous vehicle could encounter on a daily drive.

Manual Mapmaking

Previous methods have relied on high-definition 3D semantic maps of an intersection and its surrounding area to understand the intersection structure and create paths to navigate safely.

Human labeling is heavily involved to create such a map, hand-encoding all potentially relevant intersection structure features, such as where the intersection entry/exit lines and dividers are located, where any traffic lights or signs are, and how many lanes there are in each direction. The more complex the intersection scenario, the more heavily the map would need to be manually annotated.

An important practical limitation of this approach is lack of scalability. Every intersection in the world would need to be manually labeled before an autonomous vehicle could navigate them, which would create highly impractical data collection, labeling, and cost challenges.

Another challenge lies in temporary conditions, such as construction zones. Because of the temporary nature of these scenarios, writing them into and out of a map can be highly complex.

In contrast, our approach is analogous to how humans drive. Humans use live perception rather than maps to understand intersection structure and navigate intersections.

A Structured Approach to Intersections

Our algorithm extends the capability of our WaitNet DNN to predict intersection structure as a collection of points we call “joints,” which are analogous to joints in a human body. Just as the actuation of human limbs is achieved through connections between our joints, in our approach, actuation of an autonomous vehicle through an intersection may be achieved by connecting the intersection structure joints into a path for the vehicle to follow.

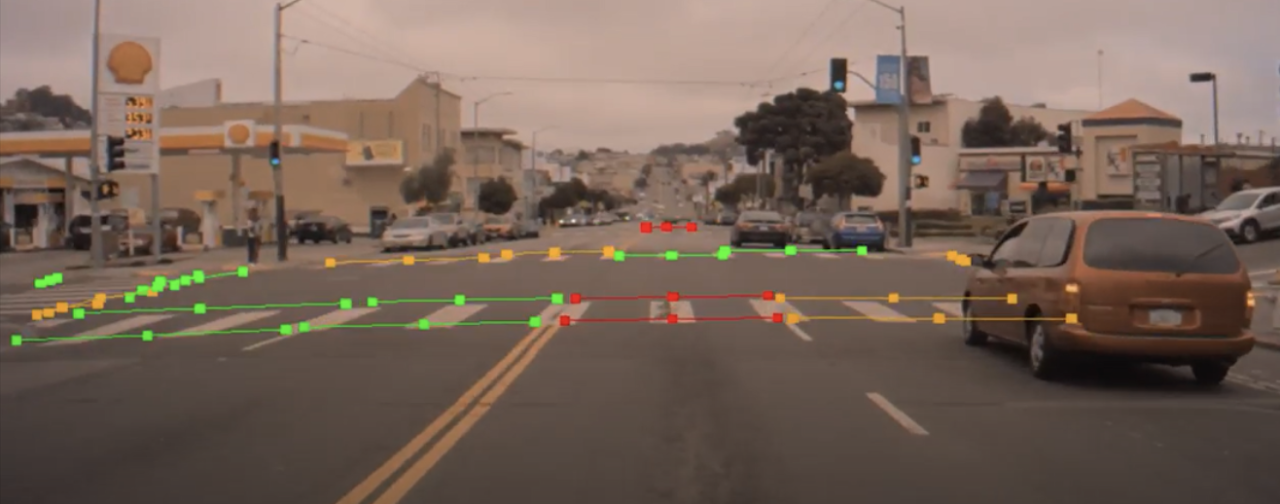

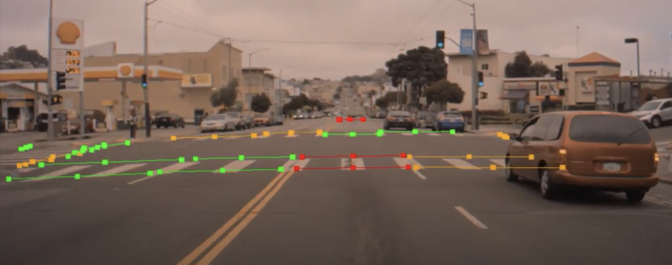

Figure 1. Prediction of intersection structure. Red = intersection entry wait line for ego car; Yellow = intersection entry wait line for other cars; Green = intersection exit line. In this figure, the green lines indicate all the possible ways the ego car could exit the intersection if arriving at it from the left-most lane – specifically, it could continue driving straight, take a left turn, or make a U-turn.

Rather than segment the contours of an image, our DNN is able to differentiate intersection entry and exit points for different lanes. Another key benefit of our approach is that the intersection structure prediction is robust to occlusions and partial occlusions, and it’s able to predict both painted and inferred intersection structure lines.

The intersection key points of figure 1 may also be connected into paths for navigating the intersection. By connecting intersection entry and exit points, paths and trajectories that represent the ego car movements can be predicted.

Our live perception approach enables scalability for handling various types of intersections without the burden of manually labeling each intersection individually. It can also be combined with map information, where high-quality data is available, to create diversity and redundancy for complex intersection handling.

Our DNN-based intersection structure perception capability will become available to developers in an upcoming DRIVE Software release as an additional head of our WaitNet DNN. To learn more about our DNN models, visit our DRIVE Perception page.