NVIDIA DGX systems have quickly become the go-to AI platform for telco, media and entertainment, public sector, healthcare, research organizations and more.

BMW, Lockheed Martin, Naver CLOVA, NTT PC Communications, Sony and Subaru are among the household names from around the world already building AI infrastructure with DGX A100 systems, announced in May, to tackle their most important opportunities.

This week, at the GPU Technology Conference, we’re announcing that the entire NVIDIA DGX ecosystem is expanding to simplify deployment, integration and scaling for enterprise IT and the data science teams they support.

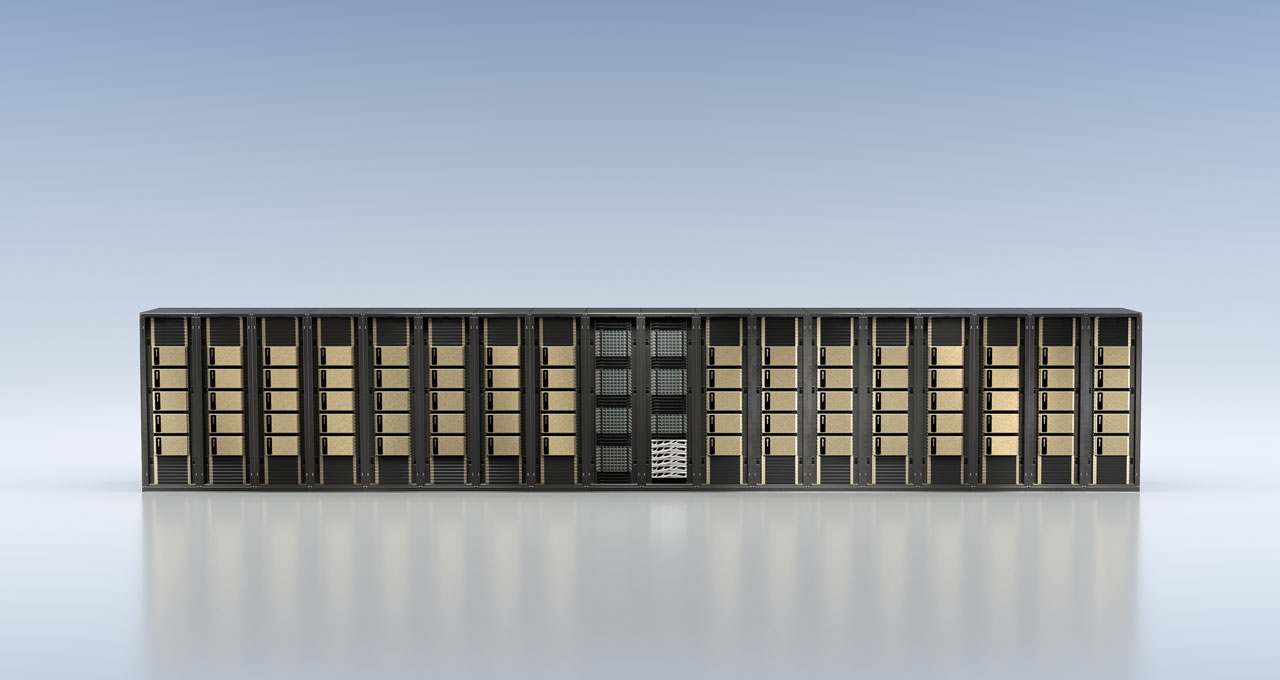

NVIDIA CEO Jensen Huang kicked things off in his GTC keynote, when he announced the NVIDIA DGX SuperPOD Solution for Enterprise. This new product turns the NVIDIA DGX SuperPOD reference architecture into a turnkey offering that customers around the world can use to deploy AI at scale.

The Fastest Way to Get Started with AI

For organizations just beginning their AI journey, going from a great idea to a usable application can be a struggle. Choosing the right software, tools and platform can be intimidating if your team has never built AI applications before. Many just need a faster way to put the right components together, along with domain expertise from folks who have done it before.

The new NVIDIA AI Starter Kit includes everything needed to get started with AI, including an NVIDIA DGX A100 system, ready-to-use AI models and data science workflow software. It also includes consultative services from NVIDIA’s solution delivery partners, who know how to turn AI ideas into business applications. The kit can help organizations reap the benefits of AI sooner, at a fraction of the cost of doing it themselves.

Updates to the World’s Most Popular AI Reference Architectures

While not every organization needs the all-in-one simplicity and easy entry point of the NVIDIA AI Starter Kit or the power and scale of NVIDIA DGX SuperPOD, many need a prescriptive approach for AI infrastructure sized for their business. For these customers, NVIDIA DGX POD reference architectures are ideal.

DGX POD lets enterprises pair DGX systems with their preferred storage technology partner, while eliminating time-consuming design and testing to get maximum AI performance.

Updates to this portfolio — now featuring NVIDIA DGX A100 — include reference architectures from DDN, NetApp and Pure Storage, which join existing ones from Dell EMC and IBM.

Operationalizing AI Infrastructure Faster with DGX-Ready Software

Enterprises that want to scale the value of AI need a way to implement MLOps, which brings the rigor and methodologies of IT/DevOps to AI model development and deployment.

Through close collaboration with software vendors and a comprehensive certification process, the DGX-Ready Software program ensures that software used with DGX systems is proven to be enterprise-grade. This simplifies the deployment, management and scaling of AI infrastructure while enabling enterprises to adopt an MLOps-based workflow.

New certifications expand the DGX-Ready Software program in two key areas: cluster provisioning and management, and container orchestration platforms.

The cluster provisioning, management and monitoring offered by Bright Computing’s Bright Cluster Manager is now certified for use with DGX systems, making it easier for customers to deploy their DGX clusters.

Red Hat OpenShift is also now certified for DGX servers, providing an enterprise Kubernetes platform for DGX clusters. This gives customers a choice between the standard, open source Kubernetes and the enhanced offering from Red Hat.

Best of all, both the Bright Computing and Red Hat solutions take full advantage of the multi-instance GPU (MIG) feature of the NVIDIA A100 Tensor Core GPUs inside DGX A100. MIG right-sizes compute resources for optimal utilization by multiple users and applications, enabling enterprises to operationalize their own elastic AI infrastructure.

DGX-Ready Software partners additionally include Allegro AI, cnvrg.io, Core Scientific, Domino Data Lab, Iguazio and Paperspace, giving enterprises a wide variety of choices to implement MLOps and industrialize their AI development.

Certifications Simplify Operating System Updates

A crucial component of the NVIDIA AI platform is the operating system. We work closely with Canonical and Red Hat to optimize the operating system for the complete NVIDIA AI stack, from the underlying DGX hardware and NVIDIA Mellanox networking to the CUDA-X software layer to the NGC containers and DGX-Ready Software solutions. This creates a vertically integrated stack that users can rely on that is tuned, tested and optimized for the best AI performance.

DGX OS, the operating system that ships from the factory on DGX systems, is based on Ubuntu 18.04 LTS from Canonical. Today, we announced that DGX OS 5, which is based on Ubuntu 20.04, will be available soon for all DGX systems.

Customers can also choose to run Red Hat Enterprise Linux. For them, we provide the same tested and proven DGX software stack that’s part of DGX OS for Red Hat Enterprise Linux and CentOS 7. We’ve been working with Red Hat also to certify RHEL 8 on all DGX systems and it will be available later this quarter.

These updates give DGX users the opportunity to take advantage of new optimizations and features in these operating systems, while remaining fully supported by NVIDIA.

GTC Sessions on DGX Systems

To get the latest information on DGX systems in the enterprise check out these can’t-miss sessions at GTC:

- AI Infrastructure Trends for 2021 [A21322] – with Charlie Boyle, vice president and general manager of DGX Systems at NVIDIA

- Operationalizing AI Workflow in Today’s Changing World [A21329] – with John Barco, senior director of Product Management for DGX Systems at NVIDIA, and Michael Balint, senior product manager for the NVIDIA DGX Platform at NVIDIA

Click the links above to read more and add these sessions to your schedule. Both are available with live Q&A in multiple time zones and as on-demand recordings afterward.

Watch NVIDIA CEO Jensen Huang recap all the news at GTC.

It’s not too late to get access to hundreds of live and on-demand talks at GTC. Register now through Oct. 9 using promo code CMB4KN to get 20 percent off.