Thousands of researchers from around the globe will gather this week for the flagship conference for all things robotics, the International Conference on Robotics and Automation, where 14 papers from NVIDIA Research will delve into topics shaping the future of robotics and automation.

ICRA has become a renowned forum since its inception in 1984, and this year the hybrid event will include paper presentations both onsite, at the Xi’an International Convention and Exhibition Center in China, and online from May 30 to June 5.

Composed of more than 200 scientists worldwide, the NVIDIA Research team focuses on areas such as AI, robotics, computer vision, self-driving cars, and graphics. Several of the papers at ICRA will be presented by researchers and interns from the NVIDIA Robotics Research Lab in Seattle.

The team is led by Dieter Fox, senior director of robotics research at NVIDIA, professor at the University of Washington Paul G. Allen School of Computer Science & Engineering, and head of the UW Robotics and State Estimation Lab. Fox is the recipient of the 2020 RAS Pioneer Award presented at ICRA last year by the IEEE Robotics and Automation Society.

Here’s a preview of some of the NVIDIA research papers that will be presented at ICRA 2021.

Best Paper Finalist: Human-Robot Interaction

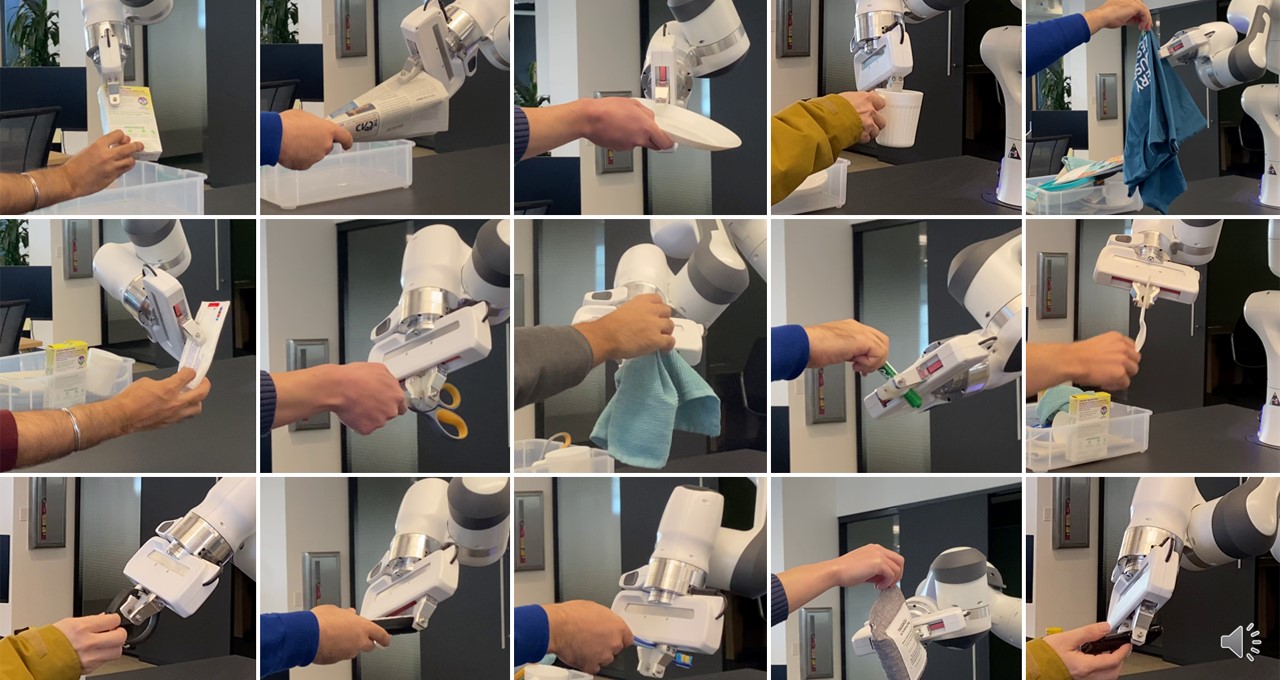

Human-robot object handovers have been intensively studied over the past decade. However, very few techniques and systems have addressed the challenge of handing over diverse objects with arbitrary appearance, size, shape and rigidity.

The paper “Reactive Human-to-Robot Handovers of Arbitrary Objects” by Wei Yang, Chris Paxton, Arsalan Mousavian, Yu-Wei Chao, Maya Cakmak and Dieter Fox is an ICRA 2021 finalist for the Best Paper Award in Human-Robot Interaction.

The paper presents a human-to-robot handover system that is generalizable to diverse unknown objects using accurate hand and object segmentation and temporally consistent grasp generation.

According to the researchers, “There are no hard constraints on how naive users might present an object to the robot, so long as they hold it in a way that is graspable by the robot. The system can adapt to user preferences, is reactive to a user’s movements and generates grasps and motions that are smooth and safe.”

One of the most interesting areas of robotics development is cobots, which can be deployed in areas where robots have not been used thus far. Traditionally, the use of robots on factory floors posed safety risks and were deemed too dangerous to work alongside humans, and therefore these machines were typically caged in isolated environments.

Enter cobots, which — while designed to work in close proximity with humans — faced several challenges like limited capabilities and their inability to think for themselves.

Results of this paper and other NVIDIA research will bring intelligence to cobots and help ensure safety in the factories of the future, while maintaining and optimizing productivity. This includes training a cobot to perceive the environment around it and adapt accordingly — allowing it to reduce its speed, adjust its force/strength, detect changing working conditions, or even shut down safely before it interferes with a human in its proximity.

Simulation, Digital Twins and Reinforcement Learning

Robots are increasingly being deployed in diverse fields in step with advances in physics-based simulation research and its impact in real-world applications. Simulation technology like NVIDIA Omniverse will be used for every aspect of automation: from design and development of a mechanical robot, then training the robot in navigation and behavior, to deploying a “digital twin” in which the robot is simulated and tested in an accurate and photorealistic environment before being implemented in the real world.

Many of these concepts are presented in the following papers:

Yashraj Narang, Balakumar Sundaralingam, Miles Macklin, Arsalan Mousavian, Dieter Fox, “Sim-to-Real for Robotic Tactile Sensing via Physics-Based Simulation and Learned Latent Projections”

Yifeng Zhu, Jonathan Tremblay, Stan Birchfield, Yuke Zhu, “Hierarchical Planning for Long-Horizon Manipulation with Geometric and Symbolic Scene Graphs”

Martin Sundermeyer, Arsalan Mousavian, Rudolph Triebel, Dieter Fox, “Contact-GraspNet: Efficient 6-DoF Grasp Generation in Cluttered Scenes”

Fahad Islam, Chris Paxton, Clemens Eppner, Bryan Peele, Maxim Likhachev, Dieter Fox, “Alternative Paths Planner (APP) for Provably Fixed-Time Manipulation Planning in Semi-Structured Environments”

Clemens Eppner, Arsalan Mousavian, Dieter Fox, “ACRONYM: A Large-Scale Grasp Dataset Based on Simulation”

Michael Danielczuk, Arsalan Mousavian, Clemens Eppner, Dieter Fox, “Object Rearrangement Using Learned Implicit Collision Functions”

Nathan Ratliff, Karl Van Wyk, Mandy Xie, Anqi Li, Muhammad Asif Rana, “Generalized Nonlinear and Finsler Geometry for Robotics”

Guanya Shi, Yifeng Zhu, Jonathan Tremblay, Stan Birchfield, Fabio Ramos, Animashree Anandkumar, Yuke Zhu, “Fast Uncertainty Quantification for Deep Object Pose Estimation”

Weiming Zhi, Tin Lai, Lionel Ott, Fabio Ramos, “Anticipatory Navigation in Crowds by Probabilistic Prediction of Pedestrian Future Movements”

Xinlei Pan, Animesh Garg, Anima Anandkumar, Yuke Zhu, “Emergent Hand Morphology and Control from Optimizing Robust Grasps of Diverse Objects”

Roberto Martín-Martín, Arthur Allshire, Charles Lin, Shawn Manuel, Silvio Savarese, Animesh Garg, “LASER: Learning a Latent Action Space for Efficient Reinforcement Learning”

Homanga Bharadhwaj, Animesh Garg, Florian Shkurti, “LEAF: Latent Exploration Along the Frontier”

Zhaoming Xie, Xingye Da, Michiel van de Panne, Buck Babich, Animesh Garg, “Dynamics Randomization Revisited: A Case Study for Quadrupedal Locomotion”

NVIDIA is investigating how AI along with physics-based and photorealistic simulation can be used to train manipulators in virtual environments and then deploy them in the real world.

The NVIDIA Isaac Sim simulation engine provides the essential features for building virtual robotic worlds and experiments, not just to create better photorealistic environments, but also to provide for synthetic data generation and domain randomization to train robots using reinforcement learning. Isaac Sim supports navigation and manipulation applications through Isaac SDK and ROS, with RGB-D, Lidar and IMU sensors, domain randomization, ground truth labeling, segmentation, and bounding boxes.

The NVIDIA Isaac Gym training platform for physics-based reinforcement learning enables full, end-to-end physics and learning on the GPU. Most previous work on applying reinforcement learning to robotics has involved clusters of thousands of CPUs. It’s now possible to train some of these policies on a single GPU in a fraction of the time.

Attend these paper presentations and visit the NVIDIA virtual exhibit booth at ICRA 2021 to learn how these technologies are revolutionizing robotics and automation.