Tackling one of the largest computing challenges of this lifetime requires larger than life computing.

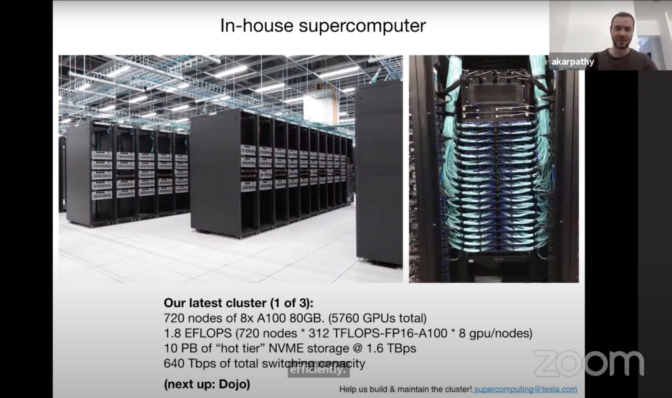

At CVPR this week, Andrej Karpathy, senior director of AI at Tesla, unveiled the in-house supercomputer the automaker is using to train deep neural networks for Autopilot and self-driving capabilities. The cluster uses 720 nodes of 8x NVIDIA A100 Tensor Core GPUs (5,760 GPUs total) to achieve an industry-leading 1.8 exaflops of performance.

“This is a really incredible supercomputer,” Karpathy said. “I actually believe that in terms of flops, this is roughly the No. 5 supercomputer in the world.”

With unprecedented levels of compute for the automotive industry at the center of its development cycle, Tesla is making it possible for autonomous vehicle engineers to do their life’s work efficiently and at the cutting edge.

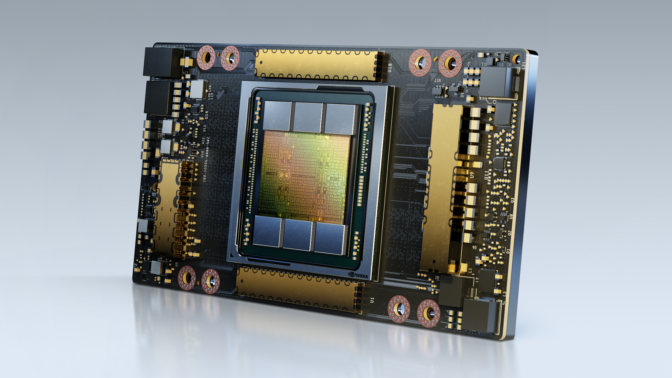

NVIDIA A100 GPUs deliver acceleration at every scale to power the world’s highest-performing data centers. Powered by the NVIDIA Ampere Architecture, the A100 GPU provides up to 20x higher performance over the prior generation and can be partitioned into seven GPU instances to dynamically adjust to shifting demands.

The GPU cluster is part of Tesla’s vertically integrated autonomous driving approach, which uses more than 1 million cars already driving on the road to refine and build new features for continuous improvement.

From the Car to the Data Center

Tesla’s cyclical development begins in the car. A deep neural network running in “shadow mode” quietly perceives and makes predictions while the car is driving without actually controlling the vehicle.

These predictions are recorded, and any mistakes or misidentifications are logged. Tesla engineers then use these instances to create a training dataset of difficult and diverse scenarios to refine the DNN.

The result is a collection of roughly 1 million 10-second clips recorded at 36 frames per second, totaling a whopping 1.5 petabytes of data. The DNN is then run through these scenarios in the data center over and over until it operates without a mistake. Finally, it’s sent back to the vehicle and begins the process again.

Karpathy said training a DNN in this manner and on such a large amount of data requires “a huge amount of compute,” which led Tesla to build and deploy the current generation supercomputer with high-performance A100 GPUs.

Continuous Iteration

In addition to comprehensive training, Tesla’s supercomputer gives autonomous vehicle engineers the performance needed to experiment and iterate in the development process.

Karpathy said the current DNN structure the automaker is deploying allows a team of 20 engineers to work on a single network at once, isolating different features for parallel development.

These DNNs can then be run through training datasets at speeds faster than what has been previously possible for rapid iteration.

“Computer vision is the bread and butter of what we do and enables Autopilot. For that to work, you need to train a massive neural network and experiment a lot,” Karpathy said. “That’s why we’ve invested a lot into the compute.”