Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series. With this series, we’re taking an engineering-focused look at individual autonomous vehicle challenges and how the NVIDIA DRIVE AV Software team is mastering them. Catch up on our earlier posts, here.

Understanding speed limit signs may seem like a straightforward task, but it can quickly become more complex in situations in which different restrictions apply to different lanes (for example, a highway exit) or when driving in a new country.

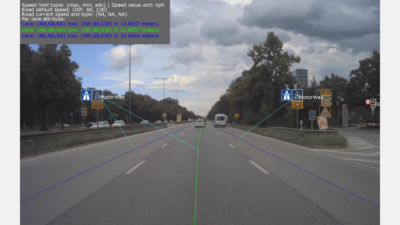

This episode of DRIVE Labs shows how AI-based live perception can help autonomous vehicles better understand the complexities of speed limit signs, using both explicit and implicit cues.

Speed limit signs can be much more nuanced than they might first appear. For example, when driving through a school zone, the posted limit is only in effect at certain times of day.

Some speed limits are conveyed by electronic variable message signs, which may show speed limits that apply to some lanes and not others, or apply under some conditions and not others, or apply differently under different conditions.

And some signs, such as “entrance to motorway” signs in Germany, convey speed limits implicitly, meaning that the driver needs to interpret the speed limit based on underlying local rules and regulations versus being able to read an explicit speed limit number.

Additionally, there may be many variations in semantic meaning for visually similar or identical speed limit signs, as well as signs and supplementary text, which, when present, can modify or even change the semantic meaning.

Conventional Speed Assist System Challenges

Despite this complexity, a speed assist system (SAS) in an autonomous vehicle must be able to accurately detect and interpret signs across widely diverse driving environments. In advanced driver assistance systems, SAS capabilities are critical in correctly informing, and even correcting, the human driver.

In autonomous driving applications, SAS capabilities become critical inputs to planning and control software in order to ensure the vehicle is traveling at a legal and safe speed.

Conventional SAS relies heavily on a navigation map or a high-definition map that contains detailed information about nearby signs, as well as their semantic meaning.

However, due to limitations in map accuracy, as well as potential accuracy limitations in localization to that map, legacy approaches may result in detecting a sign significantly after passing it. Thus, a vehicle may travel at an incorrect speed until after the sign is registered.

Additionally, the map may be outdated or may not correctly associate different signs to the lanes to which they apply.

SAS Going Live

In contrast to legacy approaches, the NVIDIA DRIVE SAS leverages AI-based live perception through a range of deep neural networks (DNNs) that detect and interpret implicit, explicit and variable message signs.

Specifically, the NVIDIA WaitNet DNN detects the sign, the SignNet DNN classifies the sign type and the PathNet DNN provides the path perception information.

As a result, all the signals needed for understanding the speed limit signs, as well as establishing their relevance to the different driving lanes on the road — a process known as sign-to-path association — comes from live perception, without requiring prior information to be provided by a map.

Another advantage of this approach is flexibility. For example, if implicit speed limit signs happen to change in a given region or country, our SAS readily responds through a simple change in the underlying sign-to-path association logic.

For systems relying on a pre-annotated map, the new rule would instead need to be re-annotated everywhere in the map to perform the correct update.

To further increase robustness, both speed sign information and sign-to-path relevance information provided by our live perception SAS can be fused with information from a map. By incorporating a diversity of information inputs, SAS coverage can be enhanced for a wide range of real-world scenarios.