Robots require huge training leaps in AI to become versatile general helpers, says robotics pioneer Pieter Abbeel, who laid out a vision for such neural network brains of the future.

Home robots are electronically and mechanically possible today, Abbeel said, but they lack the AI know-how to navigate a wide variety of situations.

“It’s just our software, our AI, that hasn’t been good enough to make this a reality in our home,” he said.

Abbeel spoke Wednesday at NTECH 2021, an annual internal engineering conference at NVIDIA, drawing hundreds of online viewers.

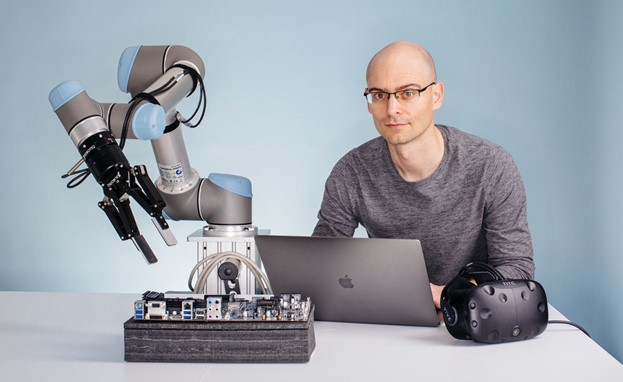

Abbeel is a professor of electrical engineering and computer science at the University of California, Berkeley. He is also director of the university’s Robot Learning Lab and co-director of the Berkeley Artificial Intelligence Research (BAIR) Lab. With all of that going on, the soft-spoken Belgian engineer also hosts The Robot Brains Podcast.

While juggling his roles, Abbeel also spent nearly two years at OpenAI, the nonprofit formed in 2015 by tech luminaries including Ilya Sutskever — who is speaking at GTC 2021 — to develop and release artificial general intelligence aimed at benefiting humanity.

He left OpenAI in 2017 to start Covariant, a developer of AI for robotic automation of warehouses and factories that has attracted $147 million in funding. Before that he co-founded AI-assisted grading startup Gradescope, which was acquired in 2018.

NVIDIA CEO Jensen Huang spoke with Abbeel after the robotics talk, calling him “one of the brightest minds on the planet.”

Talking Big Robot Brains

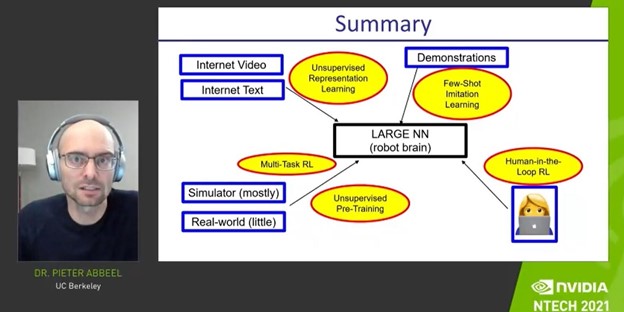

Abbeel’s talk laid out what he called a “nice starting point” for what could be done to create more capable robots, ones with big brains that could learn new tasks on their own. The talk mentioned the work of many AI researchers, such as Geoffrey Hinton, as stepping stones to get there.

Crediting deep learning pioneer Yann LeCun, he said the idea of training a large neural network for robots with internet videos to do prediction was promising. It would require robots to learn things about the world, offering a big piece in the robot AI puzzle.

Robots might learn entire behavioral representations from videos — a sequence of things to do — versus the limitations of using images alone. “Video prediction is possibly the biggest missing piece to have a good pretrained neural network that can be quickly used for other things for real-world robotic tasks,” he said

Training on text could be important as well for robots. They might learn an entire story to perform a sequence of events, like delivering a car to an auto mechanic on command from an owner, handling the entire process, including driving and talking to the mechanic for a pickup time, he suggested.

Train ‘Mostly in Simulation’

Most robot training should be done in simulation — it’s impractical to slowly train robots in the real world, bumping into things to learn by trial and error, said Abbeel.

Only a little bit of training in the real world will be required to make sure robots can also do things in the real world. “Millions of scenes become very hard to collect in the real world,” he said.

Thousands — even millions — of simulations can be used for training to get results on neural networks in a shorter period of time, he said.

“It’s more affordable, and you can scale it up and parallelize many simulation runs,” he said.

Bridging Research to Commercial

In a post-presentation talk with Huang, Abbeel discussed bridging university research to commercial applications.

“You both do groundbreaking research in the university, and you are also practicing that art and the industry of robotics — you’re putting it to work in a real company (Covariant),” Huang said. “Solving the 99 percent problem is essential.”

Abbeel agreed that it’s important to know with confidence that your customer will be happy with your network performance and what you’re selling to them. He said it’s largely a matter of knowing the network you’ve developed, running a lot of tests and getting the statistics.

At Covariant, he said, the current applications are pick-and-place use cases in warehouses — for the Covariant Brain — and that hitting the end-to-end performance is what matters.

“If you don’t make your customers happy, what’s the point in selling to them?” said Abbeel.