AI can design chips no human could, said Bill Dally in a virtual keynote today at the Design Automation Conference (DAC), one of the world’s largest gatherings of semiconductor engineers.

The chief scientist of NVIDIA discussed research in accelerated computing and machine learning that’s making chips smaller, faster and better.

“Our work shows you can achieve orders-of-magnitude improvements in chip design using GPU-accelerated systems. And when you add in AI, you can get superhuman results — better circuits than anyone could design by hand,” said Dally, who leads a team of more than 200 people at NVIDIA Research.

Gains Span Circuits, Boards

Dally cited improvements GPUs and AI deliver across the workflow of chip and board design. His examples spanned the layout and placement of circuits to faster ways to render images of printed-circuit boards.

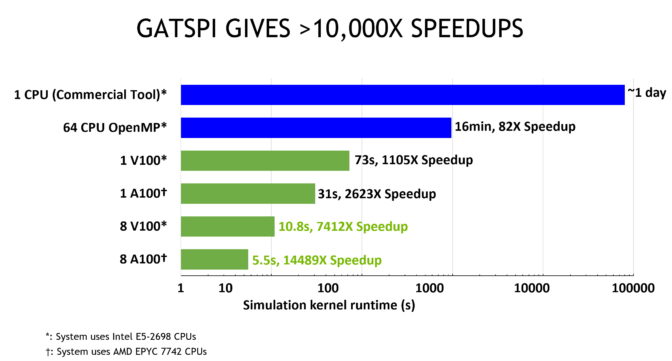

In one particularly stunning example of speedups with GPUs, he pointed to research NVIDIA plans to present at a conference next year. The GATSPI tool accelerates detailed simulations of a chip’s logic by more than 1,000x compared to commercial tools running on CPUs today.

A paper at DAC this year describes how NVIDIA collaborated with Cadence Design Systems, a leading provider of EDA software, to render board-level designs using graphics techniques on NVIDIA GPUs. Their work boosted performance up to 20x for interactive operations on Cadence’s Allegro X platform, announced in June.

“Engineers used to wait for programs to respond after every edit or pan across an image — it was an awkward, frustrating way to work. But with GPUs, the flow becomes truly interactive,” said Dally, who chaired Stanford University’s computer science department before joining NVIDIA in 2009.

Reinforcement Learning Delivers Rewards

A technique called NVCell, described in a DAC session this week, uses reinforcement learning to automate the job of laying out a standard cell, a basic building block of a chip.

The approach reduces work that typically takes months for a 10-person team to an automated process that runs in a couple days. “That lets the engineering team focus on a few challenging cells that need to be designed by hand,” said Dally.

In another example of the power of reinforcement learning, NVIDIA researchers will describe at DAC a new tool called PrefixRL. It discovers how to design a circuit such as an adder, encoder or custom design.

PrefixRL treats the design process like a game where the high score is in finding the smallest area and power consumption for the circuit.

By letting AI optimize the process, engineers get a device that’s more efficient than what’s possible with today’s tools. It’s a good example of how AI can deliver designs no human could.

Leveraging AI’s Tool Box

NVIDIA worked with the University of Texas at Austin on a research project called DREAMPlace that made novel use of PyTorch, a popular software framework for deep learning. It adapted the framework used to optimize weights in a neural network to find the best spot to place a block with 10 million cells inside a larger chip.

It’s a routine job that currently takes nearly four hours using today’s state-of-the-art techniques on CPUs. Running on NVIDIA Volta architecture GPUs in a data center or cloud service, it can finish in as little as five minutes, a 43x speedup.

Getting a Clearer Image Faster

To make a chip, engineers use a lithography machine to project their design onto a semiconductor wafer. To make sure the chip performs as expected, they must accurately simulate that image, a critical challenge.

NVIDIA researchers created a neural network that understands the optical process. It simulated the image on the wafer 80x faster and with higher accuracy, using a 20x smaller model than current state-of-the-art machine learning methods.

It’s one more example of how the combination of accelerated computing and AI are helping engineers design better chips faster.

An AI-Powered Future

“NVIDIA used some of these techniques to make our existing GPUs, and we plan to use more of them in the future,” Dally said.

“I expect tomorrow’s standard EDA tools will be AI-powered to make the chip designer’s job easier and their results better than ever,” he said.

To watch Dally’s keynote, register for a complimentary pass to DAC using the code ILOVEDAC, then view the talk here.