The increased frequency and severity of extreme weather and climate events could take a million lives and cost $1.7 trillion annually by 2050, according to the Munich Reinsurance Company.

This underscores a critical need for accurate weather forecasting, especially with the rise in severe weather occurrences such as blizzards, hurricanes and heatwaves. AI and accelerated computing are poised to help.

More than 180 weather modeling centers employ robust high performance computing (HPC) infrastructure to crunch traditional numerical weather prediction (NWP) models. These include the European Center for Medium-Range Weather Forecasts (ECMWF), which operates on 983,040 CPU cores, and the U.K. Met Office’s supercomputer, which uses more than 1.5 million CPU cores and consumes 2.7 megawatts of power.

Rethinking HPC Design

The global push toward energy efficiency is urging a rethink of HPC system design. Accelerated computing, harnessing the power of GPUs, offers a promising, energy-efficient alternative that speeds up computations.

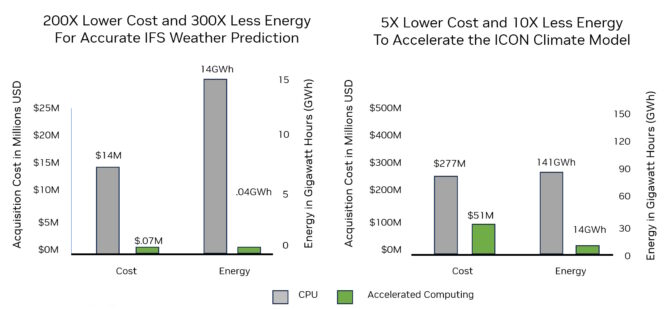

NVIDIA GPUs have made a significant impact on globally adopted weather models, including those from ECMWF, the Max Planck Institute for Meteorology, the German Meteorological Service and the National Center for Atmospheric Research.

GPUs enhance performance up to 24x, improve energy efficiency, and reduce costs and space requirements.

“To make reliable weather predictions and climate projections a reality within power budget limits, we rely on algorithmic improvements and hardware where NVIDIA GPUs are an alternative to CPUs,” said Oliver Fuhrer, head of numerical prediction at MeteoSwiss, the Swiss national office of meteorology and climatology.

AI Model Boosts Speed, Efficiency

NVIDIA’s AI-based weather-prediction model FourCastNet offers competitive accuracy with orders of magnitude greater speed and energy efficiency compared with traditional NWP methods. The latest version of FourCastNet, based on the Spherical Fourier Neural Operator, rapidly produces months-long forecasts and allows for the generation of large ensembles — or groups of simulations with slight variations in starting conditions — for high-confidence, extreme weather risk predictions weeks in advance.

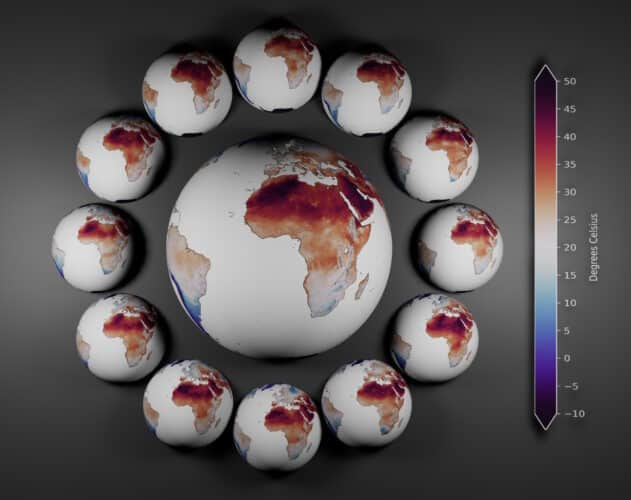

For example, based on historical weather and climate data from ECMWF, FourCastNet ensembles accurately predicted increased risk of extreme temperatures during Africa’s hottest recorded heatwave, in July 2018.

Using NVIDIA GPUs, FourCastNet quickly and accurately generated 1,000 ensemble members. It enables 20x larger ensemble size and 1,000x faster run-times compared with traditional NWP models. A dozen of the FourCastNet members accurately predicted the high temperatures in Algeria three weeks before it occurred. In contrast, smaller 50-member NWP ensembles altogether missed the heatwave.

This marked the first time the FourCastNet team predicted a high-impact event weeks in advance, demonstrating AI’s potential for reliable weather forecasting with lower energy consumption than traditional weather models.

FourCastNet uses the latest AI advances to bridge AI and physics for groundbreaking results. It’s about 45,000x faster than traditional NWP models. And when trained, FourCastNet consumes 12,000x less energy to produce a forecast than the Europe-based Integrated Forecast System, a gold-standard NWP model.

“NVIDIA FourCastNet opens the door to the use of AI for a wide variety of applications that will change the shape of the NWP enterprise,” said Bjorn Stevens, director of the Max Planck Institute for Meteorology.

Expanding What’s Possible

In an NVIDIA GTC session, Stevens described what’s possible now with the ICON climate research tool. The Levante supercomputer, using 3,200 CPUs, can simulate 10 days of weather in 24 hours, Stevens said. In contrast, the JUWELS Booster supercomputer, using 1,200 NVIDIA A100 Tensor Core GPUs, can run 50 simulated days in the same amount of time.

Scientists are looking to study climate effects 300 years into the future, which means systems need to be 20x faster, Stevens added. Embracing faster technology like NVIDIA H100 Tensor Core GPUs and simpler code could get us there, he said.

Researchers now face the challenge of striking the optimal balance between physical modeling and machine learning to produce faster, more accurate climate forecasts. A ECMWF blog published last month describes this hybrid approach, which relies on machine learning for initial predictions and physical models for data generation, verification and system refinement.

Such an integration — delivered with accelerated computing — could lead to significant advancements in weather forecasting and climate science, ushering in a new era of efficient, reliable and energy-conscious predictions.

Learn more about how accelerated computing and AI boost climate science through these resources:

- NVIDIA one-pager: Predicting the Weather With AI

- NVIDIA one-pager: Faster Weather Predictions

- NVIDIA resource page: Sustainable Computing

- NVIDIA technical blog: AI for a Scientific Computing Revolution

Discover how AI is powering the future of clean energy.