AI is fueling a new industrial revolution — one driven by AI factories.

Unlike traditional data centers, AI factories do more than store and process data — they manufacture intelligence at scale, transforming raw data into real-time insights. For enterprises and countries around the world, this means dramatically faster time to value — turning AI from a long-term investment into an immediate driver of competitive advantage. Companies that invest in purpose-built AI factories today will lead in innovation, efficiency and market differentiation tomorrow.

While a traditional data center typically handles diverse workloads and is built for general-purpose computing, AI factories are optimized to create value from AI. They orchestrate the entire AI lifecycle — from data ingestion to training, fine-tuning and, most critically, high-volume inference.

For AI factories, intelligence isn’t a byproduct but the primary one. This intelligence is measured by AI token throughput — the real-time predictions that drive decisions, automation and entirely new services.

While traditional data centers aren’t disappearing anytime soon, whether they evolve into AI factories or connect to them depends on the enterprise business model.

Regardless of how enterprises choose to adapt, AI factories powered by NVIDIA are already manufacturing intelligence at scale, transforming how AI is built, refined and deployed.

The Scaling Laws Driving Compute Demand

Over the past few years, AI has revolved around training large models. But with the recent proliferation of AI reasoning models, inference has become the main driver of AI economics. Three key scaling laws highlight why:

- Pretraining scaling: Larger datasets and model parameters yield predictable intelligence gains, but reaching this stage demands significant investment in skilled experts, data curation and compute resources. Over the last five years, pretraining scaling has increased compute requirements by 50 million times. However, once a model is trained, it significantly lowers the barrier for others to build on top of it.

- Post-training scaling: Fine-tuning AI models for specific real-world applications requires 30x more compute during AI inference than pretraining. As organizations adapt existing models for their unique needs, cumulative demand for AI infrastructure skyrockets.

- Test-time scaling (aka long thinking): Advanced AI applications such as agentic AI or physical AI require iterative reasoning, where models explore multiple possible responses before selecting the best one. This consumes up to 100x more compute than traditional inference.

Traditional data centers aren’t designed for this new era of AI. AI factories are purpose-built to optimize and sustain this massive demand for compute, providing an ideal path forward for AI inference and deployment.

Reshaping Industries and Economies With Tokens

Across the world, governments and enterprises are racing to build AI factories to spur economic growth, innovation and efficiency.

The European High Performance Computing Joint Undertaking recently announced plans to build seven AI factories in collaboration with 17 European Union member nations.

This follows a wave of AI factory investments worldwide, as enterprises and countries accelerate AI-driven economic growth across every industry and region:

- India: Yotta Data Services has partnered with NVIDIA to launch the Shakti Cloud Platform, helping democratize access to advanced GPU resources. By integrating NVIDIA AI Enterprise software with open-source tools, Yotta provides a seamless environment for AI development and deployment.

- Japan: Leading cloud providers — including GMO Internet, Highreso, KDDI, Rutilea and SAKURA internet — are building NVIDIA-powered AI infrastructure to transform industries such as robotics, automotive, healthcare and telecom.

- Norway: Telenor has launched an NVIDIA-powered AI factory to accelerate AI adoption across the Nordic region, focusing on workforce upskilling and sustainability.

These initiatives underscore a global reality: AI factories are quickly becoming essential national infrastructure, on par with telecommunications and energy.

Inside an AI Factory: Where Intelligence Is Manufactured

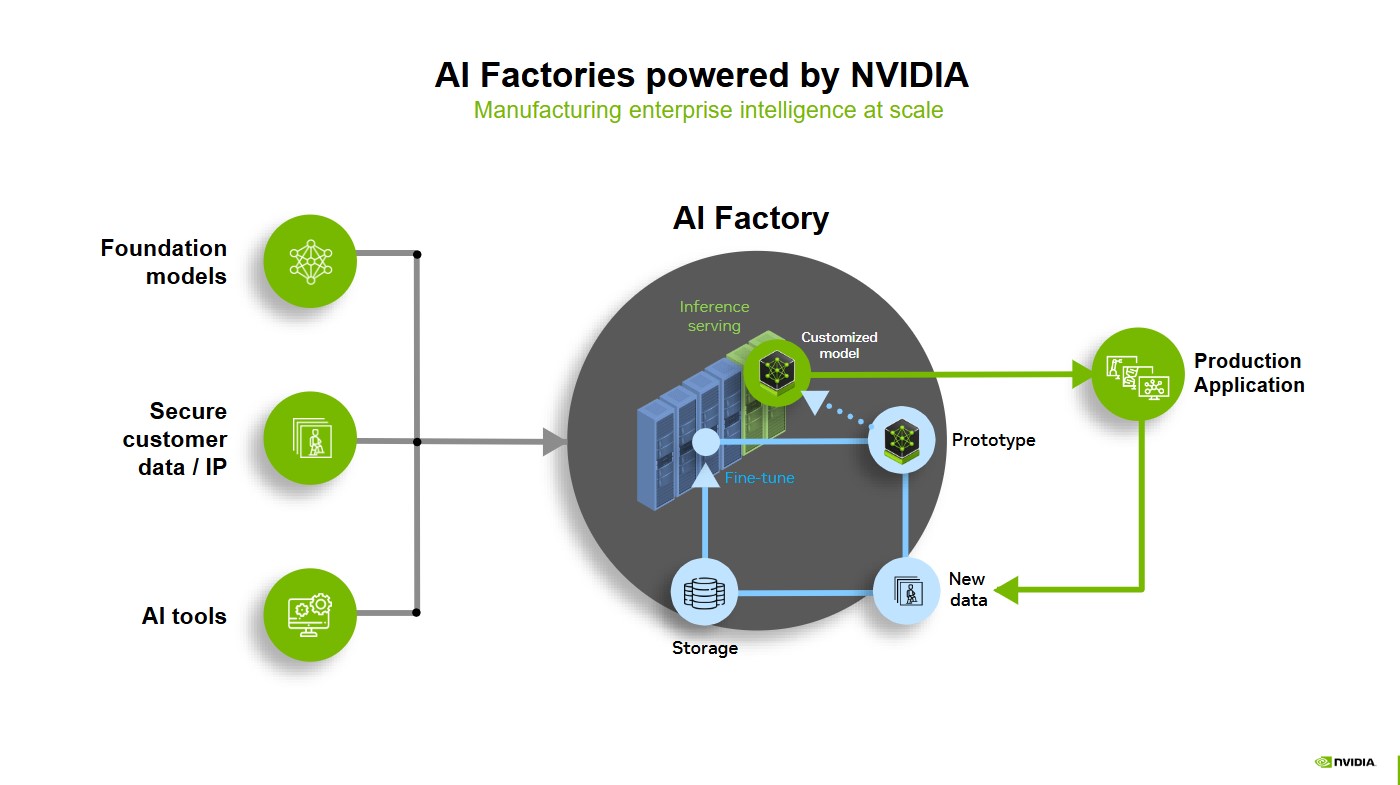

Foundation models, secure customer data and AI tools provide the raw materials for fueling AI factories, where inference serving, prototyping and fine-tuning shape powerful, customized models ready to be put into production.

As these models are deployed into real-world applications, they continuously learn from new data, which is stored, refined and fed back into the system using a data flywheel. This cycle of optimization ensures AI remains adaptive, efficient and always improving — driving enterprise intelligence at an unprecedented scale.

An AI Factory Advantage With Full-Stack NVIDIA AI

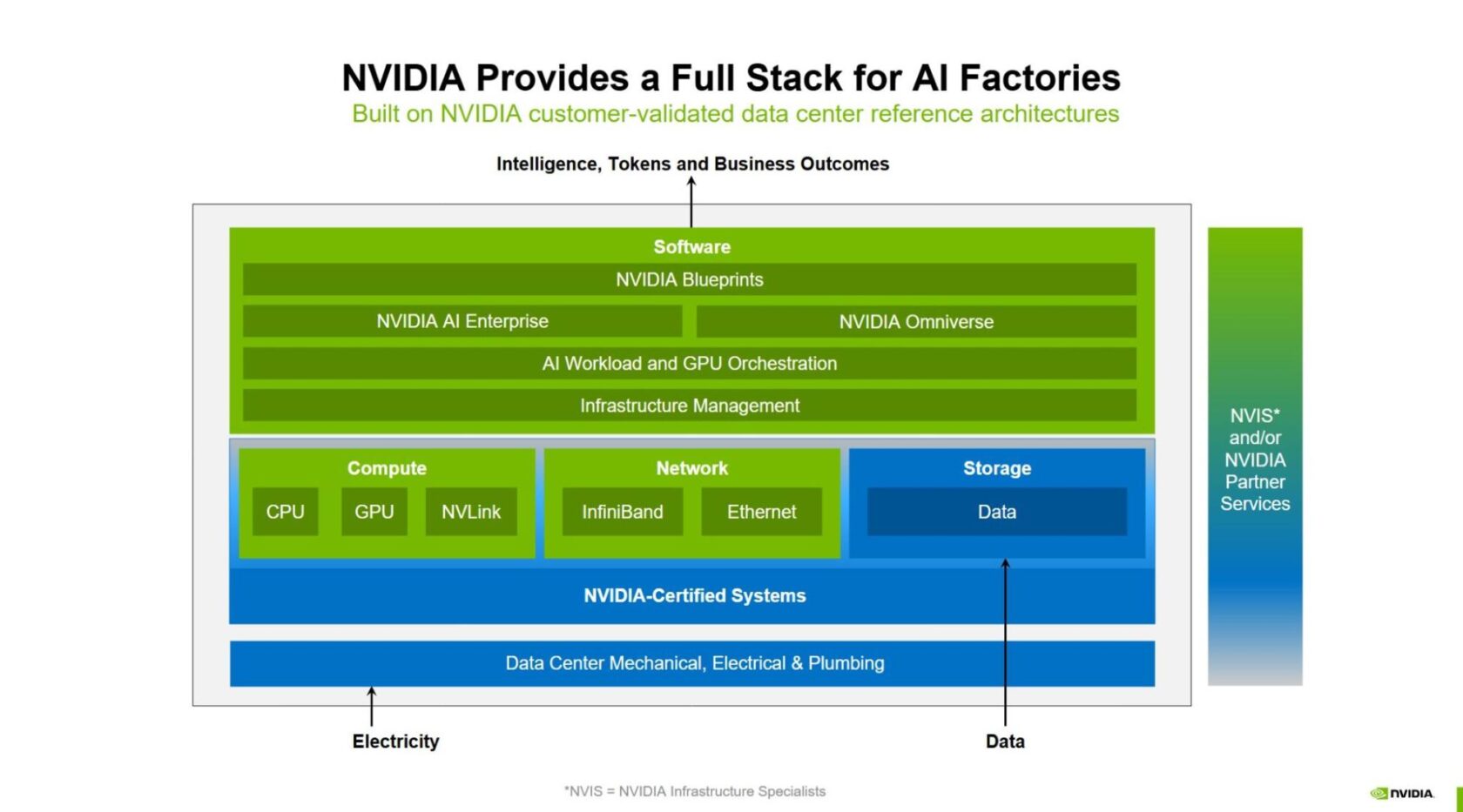

NVIDIA delivers a complete, integrated AI factory stack where every layer — from the silicon to the software — is optimized for training, fine-tuning, and inference at scale. This full-stack approach ensures enterprises can deploy AI factories that are cost effective, high-performing and future-proofed for the exponential growth of AI.

With its ecosystem partners, NVIDIA has created building blocks for the full-stack AI factory, offering:

- Powerful compute performance

- Advanced networking

- Infrastructure management and workload orchestration

- The largest AI inference ecosystem

- Storage and data platforms

- Blueprints for design and optimization

- Reference architectures

- Flexible deployment for every enterprise

Powerful Compute Performance

The heart of any AI factory is its compute power. From NVIDIA Hopper to NVIDIA Blackwell, NVIDIA provides the world’s most powerful accelerated computing for this new industrial revolution. With the NVIDIA Blackwell Ultra-based GB300 NVL72 rack-scale solution, AI factories can achieve up to 50X the output for AI reasoning, setting a new standard for efficiency and scale.

The NVIDIA DGX SuperPOD is the exemplar of the turnkey AI factory for enterprises, integrating the best of NVIDIA accelerated computing. NVIDIA DGX Cloud provides an AI factory that delivers NVIDIA accelerated compute with high performance in the cloud.

Global systems partners are building full-stack AI factories for their customers based on NVIDIA accelerated computing — now including the NVIDIA GB200 NVL72 and GB300 NVL72 rack-scale solutions.

Advanced Networking

Moving intelligence at scale requires seamless, high-performance connectivity across the entire AI factory stack. NVIDIA NVLink and NVLink Switch enable high-speed, multi-GPU communication, accelerating data movement within and across nodes.

AI factories also demand a robust network backbone. The NVIDIA Quantum InfiniBand, NVIDIA Spectrum-X Ethernet, and NVIDIA BlueField networking platforms reduce bottlenecks, ensuring efficient, high-throughput data exchange across massive GPU clusters. This end-to-end integration is essential for scaling out AI workloads to million-GPU levels, enabling breakthrough performance in training and inference.

Infrastructure Management and Workload Orchestration

Businesses need a way to harness the power of AI infrastructure with the agility, efficiency and scale of a hyperscaler, but without the burdens of cost, complexity and expertise placed on IT.

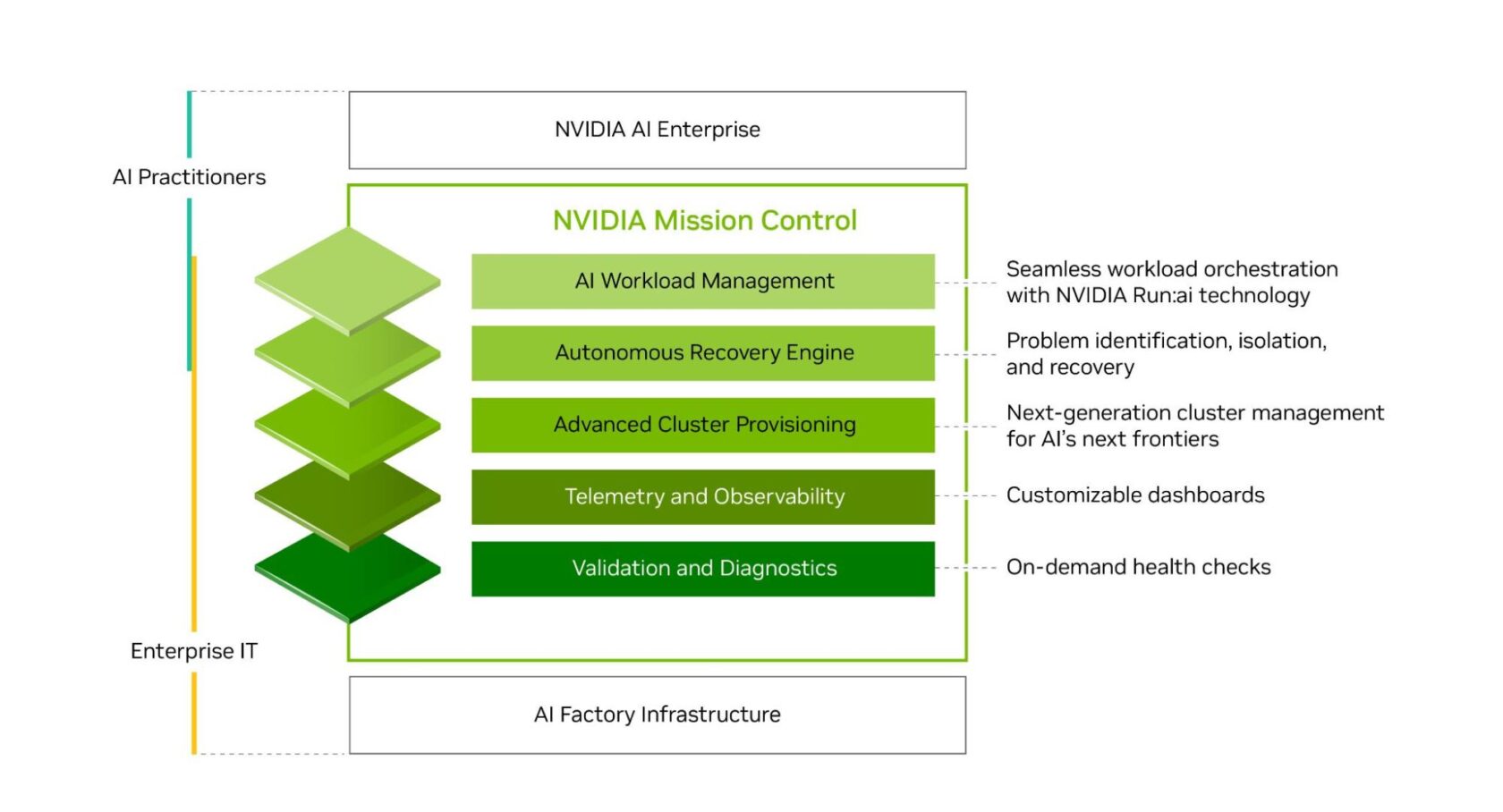

With NVIDIA Run:ai, organizations can benefit from seamless AI workload orchestration and GPU management, optimizing resource utilization while accelerating AI experimentation and scaling workloads. NVIDIA Mission Control software, which includes NVIDIA Run:ai technology, streamlines AI factory operations from workloads to infrastructure while providing full-stack intelligence that delivers world-class infrastructure resiliency.

The Largest AI Inference Ecosystem

AI factories need the right tools to turn data into intelligence. The NVIDIA AI inference platform, spanning the NVIDIA TensorRT ecosystem, NVIDIA Dynamo and NVIDIA NIM microservices — all part (or soon to be part) of the NVIDIA AI Enterprise software platform — provides the industry’s most comprehensive suite of AI acceleration libraries and optimized software. It delivers maximum inference performance, ultra-low latency and high throughput.

Storage and Data Platforms

Data fuels AI applications, but the rapidly growing scale and complexity of enterprise data often make it too costly and time-consuming to harness effectively. To thrive in the AI era, enterprises must unlock the full potential of their data.

The NVIDIA AI Data Platform is a customizable reference design to build a new class of AI infrastructure for demanding AI inference workloads. NVIDIA-Certified Storage partners are collaborating with NVIDIA to create customized AI data platforms that can harness enterprise data to reason and respond to complex queries.

Blueprints for Design and Optimization

To design and optimize AI factories, teams can use the NVIDIA Omniverse Blueprint for AI factory design and operations. The blueprint enables engineers to design, test and optimize AI factory infrastructure before deployment using digital twins. By reducing risk and uncertainty, the blueprint helps prevent costly downtime — a critical factor for AI factory operators.

For a 1 gigawatt-scale AI factory, every day of downtime can cost over $100 million. By solving complexity upfront and enabling siloed teams in IT, mechanical, electrical, power and network engineering to work in parallel, the blueprint accelerates deployment and ensures operational resilience.

Reference Architectures

NVIDIA Enterprise Reference Architectures and NVIDIA Cloud Partner Reference Architectures provide a roadmap for partners designing and deploying AI factories. They help enterprises and cloud providers build scalable, high-performance and secure AI infrastructure based on NVIDIA-Certified Systems with the NVIDIA AI software stack and partner ecosystem.

Every layer of the AI factory stack relies on efficient computing to meet growing AI demands. NVIDIA accelerated computing serves as the foundation across the stack, delivering the highest performance per watt to ensure AI factories operate at peak energy efficiency. With energy-efficient architecture and liquid cooling, businesses can scale AI while keeping energy costs in check.

Flexible Deployment for Every Enterprise

With NVIDIA’s full-stack technologies, enterprises can easily build and deploy AI factories, aligning with customers’ preferred IT consumption models and operational needs.

Some organizations opt for on-premises AI factories to maintain full control over data and performance, while others use cloud-based solutions for scalability and flexibility. Many also turn to their trusted global systems partners for pre-integrated solutions that accelerate deployment.

On Premises

NVIDIA DGX SuperPOD is a turnkey AI factory infrastructure solution that provides accelerated infrastructure with scalable performance for the most demanding AI training and inference workloads. It features a design-optimized combination of AI compute, network fabric, storage and NVIDIA Mission Control software, empowering enterprises to get AI factories up and running in weeks instead of months — and with best-in-class uptime, resiliency and utilization.

AI factory solutions are also offered through the NVIDIA global ecosystem of enterprise technology partners with NVIDIA-Certified Systems. They deliver leading hardware and software technology, combined with data center systems expertise and liquid-cooling innovations, to help enterprises de-risk their AI endeavors and accelerate the return on investment of their AI factory implementations.

These global systems partners are providing full-stack solutions based on NVIDIA reference architectures — integrated with NVIDIA accelerated computing, high-performance networking and AI software — to help customers successfully deploy AI factories and manufacture intelligence at scale.

In the Cloud

For enterprises looking to use a cloud-based solution for their AI factory, NVIDIA DGX Cloud delivers a unified platform on leading clouds to build, customize and deploy AI applications. Every layer of DGX Cloud is optimized and fully managed by NVIDIA, offering the best of NVIDIA AI in the cloud, and features enterprise-grade software and large-scale, contiguous clusters on leading cloud providers, offering scalable compute resources ideal for even the most demanding AI training workloads.

DGX Cloud also includes a dynamic and scalable serverless inference platform that delivers high throughput for AI tokens across hybrid and multi-cloud environments, significantly reducing infrastructure complexity and operational overhead.

By providing a full-stack platform that integrates hardware, software, ecosystem partners and reference architectures, NVIDIA is helping enterprises build AI factories that are cost effective, scalable and high-performing — equipping them to meet the next industrial revolution.

Learn more about NVIDIA AI factories.

See notice regarding software product information.