NVIDIA AI tools are enabling deep learning-powered performance capture for creators at every level: visual effects and animation studios, creative professionals — even any enthusiast with a camera.

With NVIDIA Vid2Vid Cameo, creators can harness AI to capture their facial movements and expressions from any standard 2D video taken with a professional camera or smartphone. The performance can be applied in real time to animate an avatar, character or painting.

And with 3D body-pose estimation software, creators can capture full-body movements like walking, dancing and performing martial arts — bringing virtual characters to life with AI.

For individuals without 3D experience, these tools make it easy to animate creative projects, even using smartphone footage. Professionals can take it a step further, combining the pose estimation and Vid2Vid Cameo software to transfer their own movements to virtual characters for live streams or animation projects.

And creative studios can harness AI-powered performance capture for concept design or previsualization — to quickly convey an idea of how certain movements look on a digital character.

NVIDIA Demonstrates Performance Capture With Vid2Vid Cameo

NVIDIA Vid2Vid Cameo, available through a demo on the NVIDIA AI Playground, needs just two elements to generate a talking-head video: a still image of the avatar or painting to be animated, plus footage of the original performer speaking, singing or moving their head.

Based on generative adversarial networks, or GANs, the model maps facial movements to capture real-time motion, transferring that motion to the virtual character. Trained on 180,000 videos, the network learned to identify 20 key points to model facial motion — encoding the location of the eyes, mouth, nose, eyebrows and more.

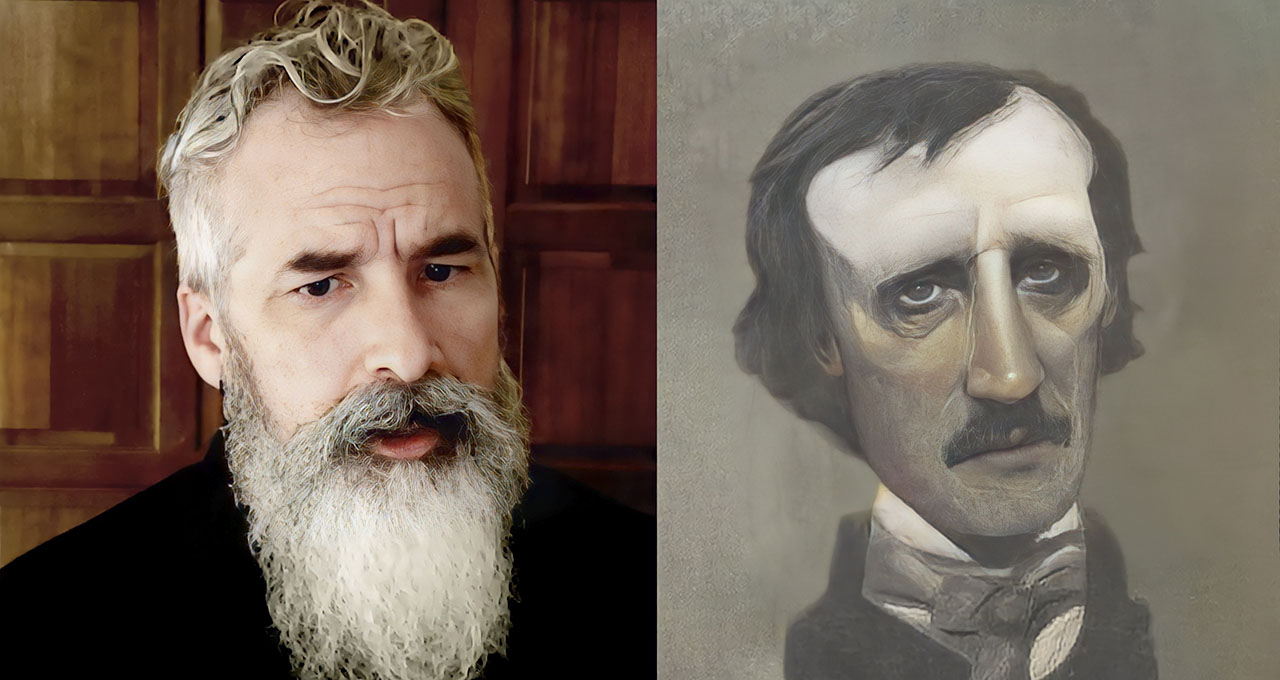

These points are extracted from the video stream of the performer and applied to the avatar or digital character. See how it works in the demo below, which transfers a performance of Edgar Allan Poe’s “Sonnet — to Science” to a portrait of the writer by artist Gary Kelley.

Visual Platforms Integrate Vid2Vid Cameo, Pose Estimation by NVIDIA

While Vid2Vid Cameo captures detailed facial expressions, pose estimation AI tracks movement of the whole body — a key capability for creators working with virtual characters that perform complex motions or move around a digital scene.

Pose Tracker is a convolutional neural network model available as an Extension in the NVIDIA Omniverse 3D design collaboration and world simulation platform. It allows users to upload footage or stream live video as a motion source to animate a character in real time. Creators can download NVIDIA Omniverse for free and get started with step-by-step tutorials.

Companies that have integrated NVIDIA AI for performance capture into their products include:

- Derivative, maker of TouchDesigner, a node-based real-time visual development platform, has implemented Vid2Vid Cameo as a way to provide easy-to-use facial tracking.

- Notch, a company offering a real-time graphics tool for 3D, visual effects and live-events visuals, uses body-pose estimation AI from NVIDIA to help artists simplify stage setups. Instead of relying on custom hardware-tracking systems, Notch users can work with standard camera equipment to control 3D character animation in real time.

- Pixotope, a leading virtual production company, uses NVIDIA AI-powered real-time talent tracking to drive interactive elements for live productions. The Norway-based company shared its work enabling interaction between real and virtual elements on screen at the most recent NVIDIA GTC.

Learn more about NVIDIA’s latest advances in AI, digital humans and virtual worlds at SIGGRAPH, the world’s largest gathering of computer graphics experts, running through Thursday, Aug. 11.