NVIDIA Wins Every MLPerf Training v5.1 Benchmark

In the age of AI reasoning, training smarter, more capable models is critical to scaling intelligence. Delivering the massive performance to meet this new age requires breakthroughs across GPUs, CPUs,… Read Article

NVIDIA Blackwell Ultra Sets the Bar in New MLPerf Inference Benchmark

Inference performance is critical, as it directly influences the economics of an AI factory. The higher the throughput of AI factory infrastructure, the more tokens it can produce at a… Read Article

Hot Topics at Hot Chips: Inference, Networking, AI Innovation at Every Scale — All Built on NVIDIA

AI reasoning, inference and networking will be top of mind for attendees of next week’s Hot Chips conference. A key forum for processor and system architects from industry and academia,… Read Article

NVIDIA Blackwell Delivers Breakthrough Performance in Latest MLPerf Training Results

NVIDIA is working with companies worldwide to build out AI factories — speeding the training and deployment of next-generation AI applications that use the latest advancements in training and inference…. Read Article

NVIDIA and Microsoft Accelerate Agentic AI Innovation, From Cloud to PC

Agentic AI is redefining scientific discovery and unlocking research breakthroughs and innovations across industries. Through deepened collaboration, NVIDIA and Microsoft are delivering advancements that accelerate agentic AI-powered applications from the… Read Article

Speed Demon: NVIDIA Blackwell Takes Pole Position in Latest MLPerf Inference Results

In the latest MLPerf Inference V5.0 benchmarks, which reflect some of the most challenging inference scenarios, the NVIDIA Blackwell platform set records — and marked NVIDIA’s first MLPerf submission using… Read Article

Explaining Tokens — the Language and Currency of AI

Under the hood of every AI application are algorithms that churn through data in their own language, one based on a vocabulary of tokens. Tokens are tiny units of data… Read Article

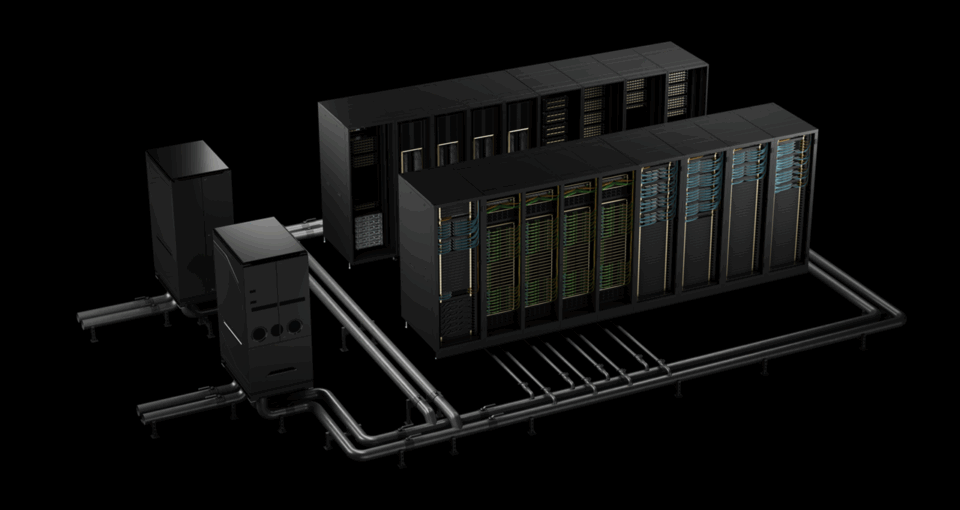

NVIDIA Blackwell Now Generally Available in the Cloud

AI reasoning models and agents are set to transform industries, but delivering their full potential at scale requires massive compute and optimized software. The “reasoning” process involves multiple models, generating… Read Article

Fast, Low-Cost Inference Offers Key to Profitable AI

Businesses across every industry are rolling out AI services this year. For Microsoft, Oracle, Perplexity, Snap and hundreds of other leading companies, using the NVIDIA AI inference platform — a… Read Article