NVIDIA will be showcased next week as the winner of the fiercely contested 3D Occupancy Prediction Challenge for autonomous driving development at the Computer Vision and Pattern Recognition Conference (CVPR), in Vancouver, Canada.

The competition had more than 400 submissions from nearly 150 teams across 10 regions.

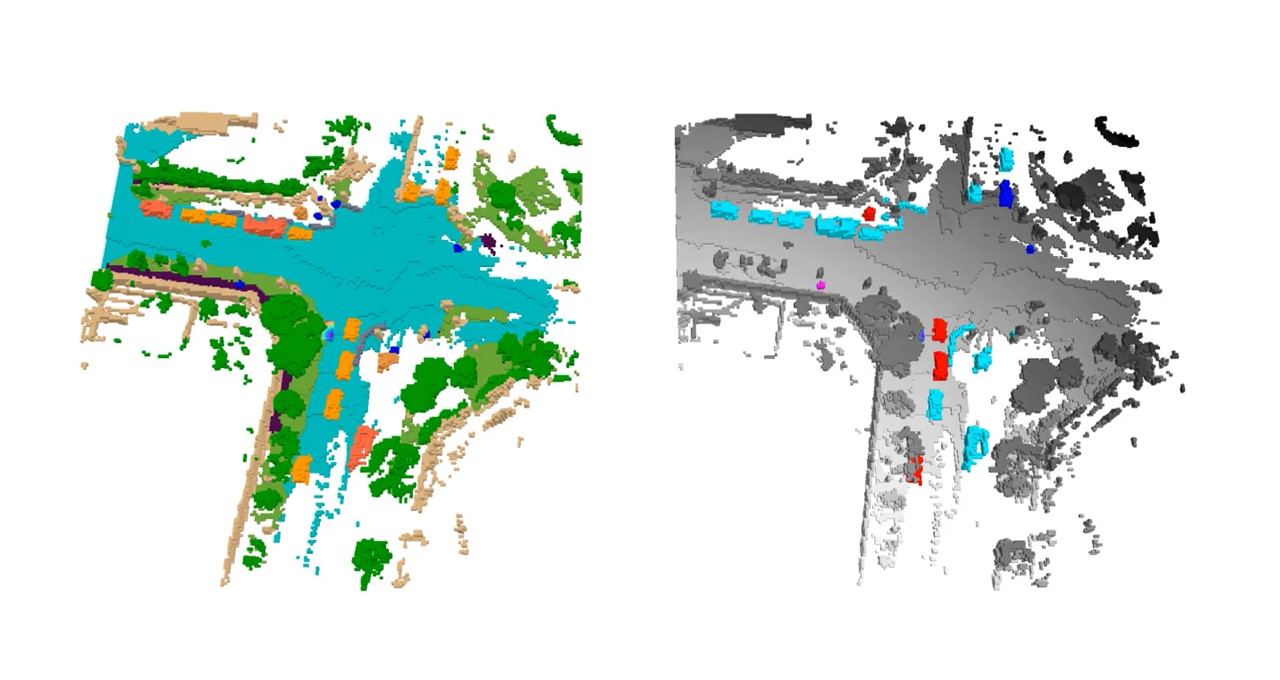

3D occupancy prediction is the process of forecasting the status of each voxel in a scene, that is, each data point on a 3D bird’s-eye-view grid. Voxels can be identified as free, occupied or unknown.

Watch the NVIDIA DRIVE Labs video on 3D occupancy prediction:

Critical to the development of safe and robust self-driving systems, 3D occupancy grid prediction provides information to autonomous vehicle (AV) planning and control stacks using state-of-the-art convolutional neural networks and transformer models, which are enabled by the NVIDIA DRIVE platform.

“NVIDIA’s winning solution features two important AV advancements,” said Zhiding Yu, senior research scientist for learning and perception at NVIDIA. “It demonstrates a state-of-the-art model design that yields excellent bird’s-eye-view perception. It also shows the effectiveness of visual foundation models with up to 1 billion parameters and large-scale pretraining in 3D occupancy prediction.”

Perception for autonomous driving has evolved over the past years from handling 2D tasks, such as detecting objects or free spaces in images, to reasoning about the world in 3D with multiple input images.

This now provides a flexible and precise fine-grained representation of objects in complex traffic scenes, which is “critical for achieving the safety perception requirements for autonomous driving,” according to Jose Alvarez, director of AV applied research and distinguished scientist at NVIDIA.

Yu will present the NVIDIA Research team’s award-winning work at CVPR’s End-to-End Autonomous Driving Workshop on Sunday, June 18, at 10:20 a.m. PT, as well as at the Vision-Centric Autonomous Driving Workshop on Monday, June 19, at 4:00 p.m. PT.

In addition to winning first place in the challenge, NVIDIA will receive at the event an Innovation Award, recognizing its “fresh insights into the development of view transformation modules,” with “substantially improved performance” compared to previous approaches, according to the CVPR workshop committee.

Read NVIDIA’s technical report on the submission.

Safer Vehicles With 3D Occupancy Prediction

While traditional 3D object detection — detecting and representing objects in a scene, often using 3D bounding boxes — is a core task in AV perception, it has its limitations. For example, it lacks expressiveness, meaning the bounding boxes might not represent enough real-world information. It also requires defining taxonomies and ground truths for all possible objects, even ones rarely seen in the real world, such as road hazards that may have fallen off a truck.

In contrast, 3D occupancy prediction provides rich information about the world to a self-driving vehicle’s planning stack, which is necessary for end-to-end autonomous driving.

Software-defined vehicles can be continuously upgraded with new developments that are proven and validated over time. State-of-the-art software updates that evolve from research initiatives, such as the ones recognized at CVPR, are enabling new features and safer driving capabilities.

The NVIDIA DRIVE platform offers a path to production for automakers, providing full-stack hardware and software for safe and secure AV development, from the car to the data center.

More on the CVPR Challenge

The 3D Occupancy Prediction Challenge at CVPR required participants to develop algorithms that solely used camera input during inference. Participants could use open-source datasets and models, facilitating the exploration of data-driven algorithms and large-scale models. The organizers provided a baseline sandbox for the latest state-of-the-art 3D occupancy prediction algorithms in real-world scenarios.

NVIDIA at CVPR

NVIDIA is presenting nearly 30 papers and presentations at CVPR. Experts who’ll discuss autonomous driving include:

- Jose Alvarez on emerging challenges for 3D perception in AVs during the End-to-End Autonomous Driving Workshop: Emerging Tasks and Challenges Workshop; and on optimizing large deep models for real-time inference at the Embedded Vision Workshop.

- Nikolai Smolyanskiy, director of deep learning at NVIDIA, on real-time traffic prediction for AVs during the End-to-End Autonomous Driving Workshop: Perception, Prediction, Planning and Simulation.

- Robin Jenkin, distinguished engineer at NVIDIA, on image quality in fisheye cameras at the OmniCV Workshop, held in conjunction with CVPR.

- Xinshuo Weng, research scientist for AV research at NVIDIA, on vision solutions for autonomous driving during the Vision-Centric Autonomous Driving Workshop.

View other talks on the agenda and learn more about NVIDIA at CVPR, which runs June 18-22.

Featured image courtesy of OccNet and Occ3D.