Today’s landscape of free, open-source large language models (LLMs) is like an all-you-can-eat buffet for enterprises. This abundance can be overwhelming for developers building custom generative AI applications, as they need to navigate unique project and business requirements, including compatibility, security and the data used to train the models.

NVIDIA AI Foundation Models — a curated collection of enterprise-grade pretrained models — give developers a running start for bringing custom generative AI to their enterprise applications.

NVIDIA-Optimized Foundation Models Speed Up Innovation

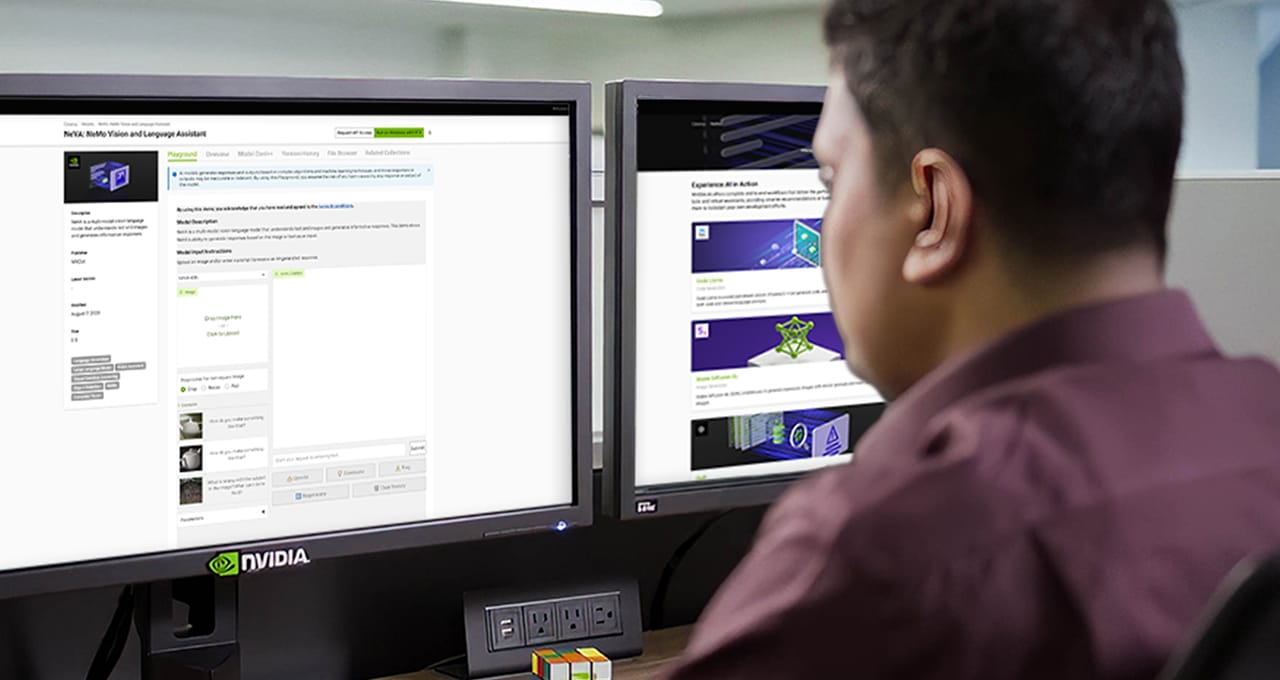

NVIDIA AI Foundation Models can be experienced through a simple user interface or API, directly from a browser. Additionally, these models can be accessed from NVIDIA AI Foundation Endpoints to test model performance from within their enterprise applications.

Available models include leading community models such as Llama 2, Stable Diffusion XL and Mistral, which are formatted to help developers streamline customization with proprietary data. Additionally, models have been optimized with NVIDIA TensorRT-LLM to deliver the highest throughput and lowest latency and to run at scale on any NVIDIA GPU-accelerated stack. For instance, the Llama 2 model optimized with TensorRT-LLM runs nearly 2x faster on NVIDIA H100.

The new NVIDIA family of Nemotron-3 8B foundation models supports the creation of today’s most advanced enterprise chat and Q&A applications for a broad range of industries, including healthcare, telecommunications and financial services.

The models are a starting point for customers building secure, production-ready generative AI applications, are trained on responsibly sourced datasets and operate at comparable performance to much larger models. This makes them ideal for enterprise deployments.

Multilingual capabilities are a key differentiator of the Nemotron-3 8B models. Out of the box, the models are proficient in over 50 languages, including English, German, Russian, Spanish, French, Japanese, Chinese, Korean, Italian and Dutch.

Fast-Track Customization to Deployment

Enterprises leveraging generative AI across business functions need an AI foundry to customize models for their unique applications. NVIDIA’s AI foundry features three elements — NVIDIA AI Foundation Models, NVIDIA NeMo framework and tools, and NVIDIA DGX Cloud AI supercomputing services. Together, these provide an end-to-end enterprise offering for creating custom generative AI models.

Importantly, enterprises own their customized models and can deploy them virtually anywhere on accelerated computing with enterprise-grade security, stability and support using NVIDIA AI Enterprise software.

NVIDIA AI Foundation Models are freely available to experiment with now on the NVIDIA NGC catalog and Hugging Face, and are also hosted in the Microsoft Azure AI model catalog.

Explore generative AI sessions and experiences at NVIDIA GTC, the global conference on AI and accelerated computing, running March 18-21 in San Jose, Calif., and online.