Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how NVIDIA DRIVE addresses them. Catch up on all our automotive posts, here.

Lidar can give autonomous vehicles laser focus.

By bouncing laser signals off the surrounding environment, these sensors can enable a self-driving car to construct a detailed and accurate 3D picture of what’s around it.

However, traditional methods for processing lidar data pose significant challenges. These include limitations in the ability to detect and classify different types of objects, scenes and weather conditions, as well as limitations in performance and robustness.

In this DRIVE Labs episode, we introduce our multi-view LidarNet deep neural network, which uses multiple perspectives, or views, of the scene around the car to overcome the traditional limitations of lidar-based processing.

AI-Powered Solutions

AI in the form of DNN-based approaches has become the go-to solution to address traditional lidar perception challenges.

One AI method uses lidar DNNs that perform top‐down or “bird’s eye view” (BEV) object detection on lidar point cloud data. A virtual camera positioned at some height above the scene, similar to a bird flying overhead, reprojects 3D coordinates of each data point into that virtual camera view via orthogonal projection.

BEV lidar DNNs use 2D convolutions in their layers to detect dynamic objects such as cars, trucks, buses, pedestrians, cyclists, and other road users. 2D convolutions work fast, so they are well-suited for use in real-time autonomous driving applications.

However, this approach can get tricky when objects look alike top-down. For example, in BEV, pedestrians or bikes may appear similar to objects like poles, tree trunks or bushes, resulting in perception errors.

Another AI method uses 3D lidar point cloud data as input to a DNN that uses 3D convolutions in its layers to detect objects. This improves accuracy since a DNN can detect objects using their 3D shapes. However, 3D convolutional DNN processing of lidar point clouds is difficult to run in real-time for autonomous driving applications.

Enter Multi-View LidarNet

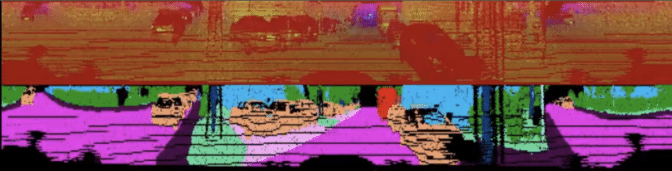

To overcome the limitations of both of these AI-based approaches, we developed our multi‐view LidarNet DNN, which acts in two stages. The first stage extracts semantic information about the scene using lidar scan data in perspective view (Figure 1). This “unwraps” a 360-degree surround lidar range scan so it looks as though the entire panorama is in front of the self-driving car.

This first-stage semantic segmentation approach performs very well for predicting object classes. This is because the DNN can better observe object shapes in perspective view (for example, the shape of a walking human).

The first stage segments the scene both into dynamic objects of different classes, such as cars, trucks, busses, pedestrians, cyclists and motorcyclists, as well as static road scene components, such as the road surface, sidewalks, buildings, trees, and traffic signs.

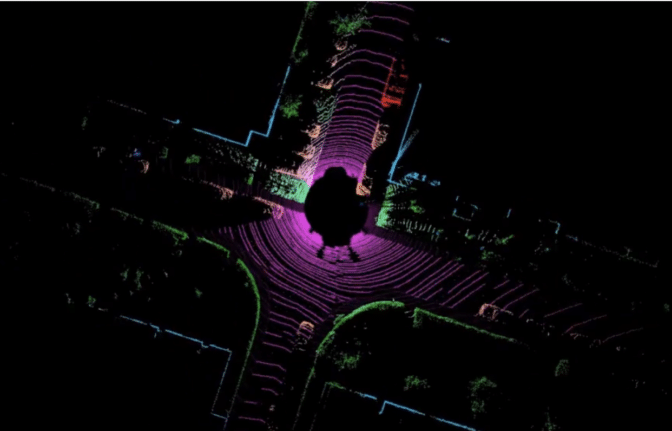

The semantic segmentation output of LidarNet’s first stage is then projected into BEV and combined with height data at each location, which is obtained from the lidar point cloud. The resulting output is applied as input to the second stage (Figure 2).

The second stage DNN is trained on BEV-labeled data to predict top-down 2D bounding boxes around objects identified by the first stage. This stage also uses semantic and height information to extract object instances. This is easier in BEV since objects are not occluding each other in this view.

The result of chaining these two DNN stages together is a lidar DNN that consumes only lidar data. It uses end-to-end deep learning to output a rich semantic segmentation of the scene, complete with 2D bounding boxes for objects. By using such methods, it can detect vulnerable road users, such as motorcyclists, bicyclists, and pedestrians, with high accuracy and completeness. Additionally, the DNN is very efficient — inference runs at 7ms per lidar scan on the NVIDIA DRIVE™AGX platform.

In addition to multi-view LidarNet, our lidar processing software stack includes a lidar object tracker. The tracker is a computer vision-based post-processing system that uses the BEV 2D bounding box information and lidar point geometry to compute 3D bounding boxes for each object instance. The tracker also helps stabilize per-frame DNN misdetections and, along with a low-level lidar processor, computes geometric fences that represent hard physical boundaries that a car should avoid.

This combination of AI-based and traditional computer vision-based methods increases the robustness of our lidar perception software stack. Moreover, the rich perception information provided by lidar perception can be combined with camera and radar detections to design even more robust Level 4 to Level 5 autonomous systems.