Editor’s note: This is the latest post in our NVIDIA DRIVE Labs series, which takes an engineering-focused look at individual autonomous vehicle challenges and how NVIDIA DRIVE addresses them. Catch up on all of our automotive posts, here.

A self-driving car’s view of the world often includes bounding boxes — cars, pedestrians and stop signs neatly encased in red and green rectangles.

In the real world, however, not everything fits in a box.

For highly complex driving scenarios, such as a construction zone marked by traffic cones, a sofa chair or other road debris in the middle of the highway, or a pedestrian unloading a moving van with cargo sticking out the back, it’s helpful for the vehicle’s perception software to provide a more detailed understanding of its surroundings.

Such fine-grained results can be obtained by segmenting image content with pixel-level accuracy, an approach known as panoptic segmentation.

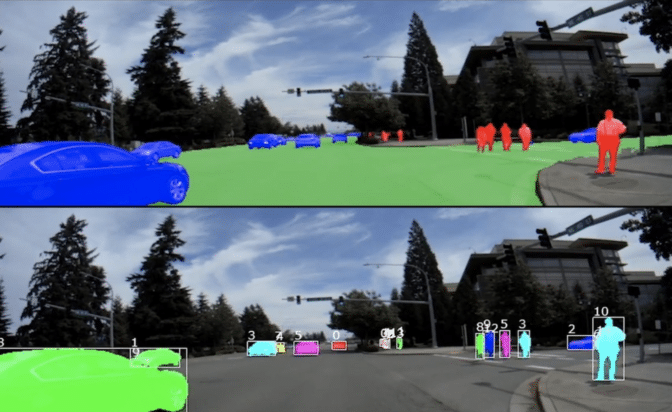

With panoptic segmentation, the image can be accurately parsed for both semantic content (which pixels represent cars vs. pedestrians vs. drivable space), as well as instance content (which pixels represent the same car vs. different car objects).

Planning and control modules can use panoptic segmentation results from the perception system to better inform autonomous driving decisions. For example, the detailed object shape and silhouette information helps improve object tracking, resulting in a more accurate input for both steering and acceleration. It can also be used in conjunction with dense (pixel-level) distance-to-object estimation methods to help enable high-resolution 3D depth estimation of a scene.

Single DNN Approach

NVIDIA’s approach achieves pixel-level semantic and instance segmentation of a camera image using a single, multi-task learning deep neural network. This approach enables us to train a panoptic segmentation DNN that understands the scene as a whole versus piecewise.

It’s also efficient. Just one end-to-end DNN can extract all this rich perception information while achieving per-frame inference times of approximately 5ms on our embedded in-car NVIDIA DRIVE AGX platform. This is much faster than state-of-the-art segmentation methods.

DRIVE AGX makes it possible to simultaneously run the panoptic segmentation DNN along with many other DNN networks and perception functions, localization, and planning and control software in real time.

As shown above, the DNN is able to segment a scene into several object classes, as well as detect different instances of these object classes, as shown with the unique colors and numbers in the bottom panel.

On-Point Training and Perception

The rich pixel-level information provided by each frame also reduces training data volume requirements. Specifically, because more pixels per training image represent useful information, the DNN is able to learn using fewer training images.

Moreover, based on the pixel-level detection results and post-processing, we’re also able to compute the bounding box for each object detection. All the perception advantages offered by pixel-level segmentations require processing, which is why we developed the powerful NVIDIA DRIVE AGX Xavier.

As a result, the pixel-level details panoptic segmentation provides make it possible to better perceive the visual richness of the real world in support of safe and reliable autonomous driving.