Editor’s note: The name of the NVIDIA CUDA Quantum platform was changed to NVIDIA CUDA-Q in April 2024. All references to the name have been updated in this blog.

NVIDIA today unveiled at SC23 the next wave of technologies that will lift scientific and industrial research centers worldwide to new levels of performance and energy efficiency.

“NVIDIA hardware and software innovations are creating a new class of AI supercomputers,” said Ian Buck, vice president of the company’s high performance computing and hyperscale data center business, in a special address at the conference.

Some of the systems will pack memory-enhanced NVIDIA Hopper accelerators, others a new NVIDIA Grace Hopper systems architecture. All will use the expanded parallelism to run a full stack of accelerated software for generative AI, HPC and hybrid quantum computing.

Buck described the new NVIDIA HGX H200 as “the world’s leading AI computing platform.”

It packs up to 141GB of HBM3e, the first AI accelerator to use the ultrafast technology. Running models like GPT-3, NVIDIA H200 Tensor Core GPUs provide an 18x performance increase over prior-generation accelerators.

Among other generative AI benchmarks, they zip through 12,000 tokens per second on a Llama2-13B large language model (LLM).

Buck also revealed a server platform that links four NVIDIA GH200 Grace Hopper Superchips on an NVIDIA NVLink interconnect. The quad configuration puts in a single compute node a whopping 288 Arm Neoverse cores and 16 petaflops of AI performance with up to 2.3 terabytes of high-speed memory.

Demonstrating its efficiency, one GH200 Superchip using the NVIDIA TensorRT-LLM open-source library is 100x faster than a dual-socket x86 CPU system and nearly 2x more energy efficient than an X86 + H100 GPU server.

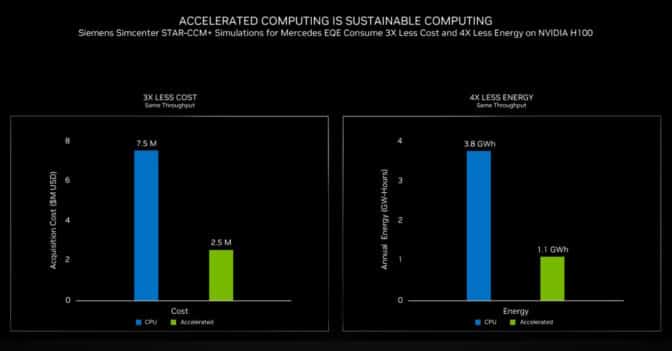

“Accelerated computing is sustainable computing,” Buck said. “By harnessing the power of accelerated computing and generative AI, together we can drive innovation across industries while reducing our impact on the environment.”

NVIDIA Powers 38 of 49 New TOP500 Systems

The latest TOP500 list of the world’s fastest supercomputers reflects the shift toward accelerated, energy-efficient supercomputing.

Thanks to new systems powered by NVIDIA H100 Tensor Core GPUs, NVIDIA now delivers more than 2.5 exaflops of HPC performance across these world-leading systems, up from 1.6 exaflops in the May rankings. NVIDIA’s contribution on the top 10 alone reaches nearly an exaflop of HPC and 72 exaflops of AI performance.

The new list contains the highest number of systems ever using NVIDIA technologies, 379 vs. 372 in May, including 38 of 49 new supercomputers on the list.

Microsoft Azure leads the newcomers with its Eagle system using H100 GPUs in NDv5 instances to hit No. 3 with 561 petaflops. Mare Nostrum5 in Barcelona ranked No. 8, and NVIDIA Eos — which recently set new AI training records on the MLPerf benchmarks — came in at No. 9.

Showing their energy efficiency, NVIDIA GPUs power 24 of the top 30 systems on the Green500. And they retained the No. 1 spot with the H100 GPU-based Henri system, which delivers 65.09 gigaflops per watt for the Flatiron Institute in New York.

Gen AI Explores COVID

Showing what’s possible, the Argonne National Laboratory used NVIDIA BioNeMo, a generative AI platform for biomolecular LLMs, to develop GenSLMs, a model that can generate gene sequences that closely resemble real-world variants of the coronavirus. Using NVIDIA GPUs and data from 1.5 million COVID genome sequences, it can also rapidly identify new virus variants.

The work won the Gordon Bell special prize last year and was trained on supercomputers, including Argonne’s Polaris system, the U.S. Department of Energy’s Perlmutter and NVIDIA’s Selene.

It’s “just the tip of the iceberg — the future is brimming with possibilities, as generative AI continues to redefine the landscape of scientific exploration,” said Kimberly Powell, vice president of healthcare at NVIDIA, in the special address.

Saving Time, Money and Energy

Using the latest technologies, accelerated workloads can see an order-of-magnitude reduction in system cost and energy used, Buck said.

For example, Siemens teamed with Mercedes to analyze aerodynamics and related acoustics for its new electric EQE vehicles. The simulations that took weeks on CPU clusters ran significantly faster using the latest NVIDIA H100 GPUs. In addition, Hopper GPUs let them reduce costs by 3x and reduce energy consumption by 4x (below).

Switching on 200 Exaflops Beginning Next Year

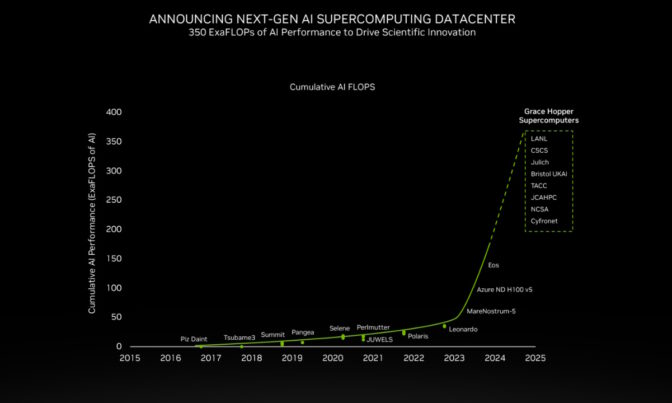

Scientific and industrial advances will come from every corner of the globe where the latest systems are being deployed.

“We already see a combined 200 exaflops of AI on Grace Hopper supercomputers going to production 2024,” Buck said.

They include the massive JUPITER supercomputer at Germany’s Jülich center. It can deliver 93 exaflops of performance for AI training and 1 exaflop for HPC applications, while consuming only 18.2 megawatts of power.

Based on Eviden’s BullSequana XH3000 liquid-cooled system, JUPITER will use the NVIDIA quad GH200 system architecture and NVIDIA Quantum-2 InfiniBand networking for climate and weather predictions, drug discovery, hybrid quantum computing and digital twins. JUPITER quad GH200 nodes will be configured with 864GB of high-speed memory.

It’s one of several new supercomputers using Grace Hopper that NVIDIA announced at SC23.

The HPE Cray EX2500 system from Hewlett Packard Enterprise will use the quad GH200 to power many AI supercomputers coming online next year.

For example, HPE uses the quad GH200 to power the DeltaAI system, which will triple computing capacity for the U.S. National Center for Supercomputing Applications.

HPE is also building the Venado system for the Los Alamos National Laboratory, the first GH200 to be deployed in the U.S. In addition, HPE is building GH200 supercomputers in the Middle East, Switzerland and the U.K.

Separately, Fujitsu will use the GH200 manufactured by Supermicro in the OFP-II system, an advanced HPC system in Japan shared by the University of Tsukuba and the University of Tokyo.

Grace Hopper in Texas and Beyond

At the Texas Advanced Computing Center (TACC), Dell Technologies is building the Vista supercomputer with NVIDIA Grace Hopper and Grace CPU Superchips.

More than 100 global enterprises and organizations, including NASA Ames Research Center and Total Energies, have already purchased Grace Hopper early-access systems, Buck said.

They join previously announced GH200 users such as SoftBank and the University of Bristol, as well as the massive Leonardo system with 14,000 NVIDIA A100 GPUs that delivers 10 exaflops of AI performance for Italy’s Cineca consortium.

The View From Supercomputing Centers

Leaders from supercomputing centers around the world shared their plans and work in progress with the latest systems.

“We’ve been collaborating with MeteoSwiss and ECMWF as well as scientists from ETH EXCLAIM and NVIDIA’s Earth-2 project to create an infrastructure that will push the envelope in all dimensions of big data analytics and extreme scale computing,” said Thomas Schultess, director of the Swiss National Supercomputing Centre of work on the Alps supercomputer.

“There’s really impressive energy-efficiency gains across our stacks,” Dan Stanzione, executive director of TACC, said of Vista.

It’s “really the stepping stone to move users from the kinds of systems we’ve done in the past to looking at this new Grace Arm CPU and Hopper GPU tightly coupled combination and … we’re looking to scale out by probably a factor of 10 or 15 from what we are deploying with Vista when we deploy Horizon in a couple years,” he said.

Accelerating the Quantum Journey

Researchers are also using today’s accelerated systems to pioneer a path to tomorrow’s supercomputers.

In Germany, JUPITER “will revolutionize scientific research across climate, materials, drug discovery and quantum computing,” said Kristel Michelson, who leads Julich’s research group on quantum information processing.

“JUPITER’s architecture also allows for the seamless integration of quantum algorithms with parallel HPC algorithms, and this is mandatory for effective quantum HPC hybrid simulations,” she said.

CUDA-Q Drives Progress

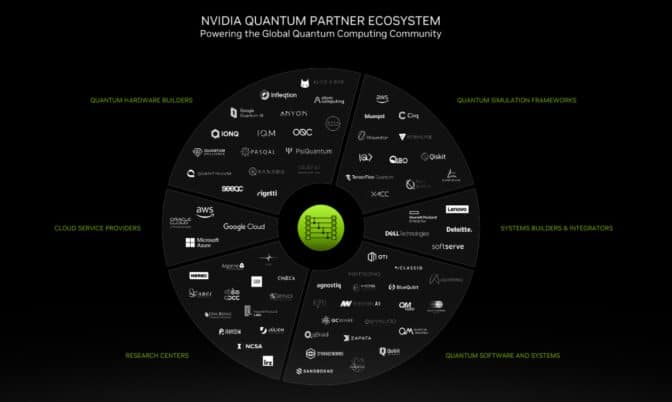

The special address also showed how NVIDIA CUDA-Q — a platform for programming CPUs, GPUs and quantum computers also known as QPUs — is advancing research in quantum computing.

For example, researchers at BASF, the world’s largest chemical company, pioneered a new hybrid quantum-classical method for simulating chemicals that can shield humans against harmful metals. They join researchers at Brookhaven National Laboratory and HPE who are separately pushing the frontiers of science with CUDA-Q.

NVIDIA also announced a collaboration with Classiq, a developer of quantum programming tools, to create a life sciences research center at the Tel Aviv Sourasky Medical Center, Israel’s largest teaching hospital. The center will use Classiq’s software and CUDA-Q running on an NVIDIA DGX H100 system.

Separately, Quantum Machines will deploy the first NVIDIA DGX Quantum, a system using Grace Hopper Superchips, at the Israel National Quantum Center that aims to drive advances across scientific fields. The DGX system will be connected to a superconducting QPU by Quantware and a photonic QPU from ORCA Computing, both powered by CUDA-Q.

“In just two years, our NVIDIA quantum computing platform has amassed over 120 partners [above], a testament to its open, innovative platform,” Buck said.

Overall, the work across many fields of discovery reveals a new trend that combines accelerated computing at data center scale with NVIDIA’s full-stack innovation.

“Accelerated computing is paving the path for sustainable computing with advancements that provide not just amazing technology but a more sustainable and impactful future,” he concluded.

Watch NVIDIA’s SC23 special address below.

Explore generative AI sessions and experiences at NVIDIA GTC, the global conference on AI and accelerated computing, running March 18-21 in San Jose, Calif., and online.

Feature image: Jupiter system at Jülich supercomputer center, courtesy of Eviden.