An international group of hospitals and medical imaging centers recently evaluated NVIDIA Clara Federated Learning software — and found that AI models for mammogram assessment trained with federated learning techniques outperformed neural networks trained on a single institution’s data.

Deep learning models depend on large, diverse datasets to achieve high accuracy. Developers often amass data from different sources — but central hosting of medical data is often not feasible due to patient data privacy policies.

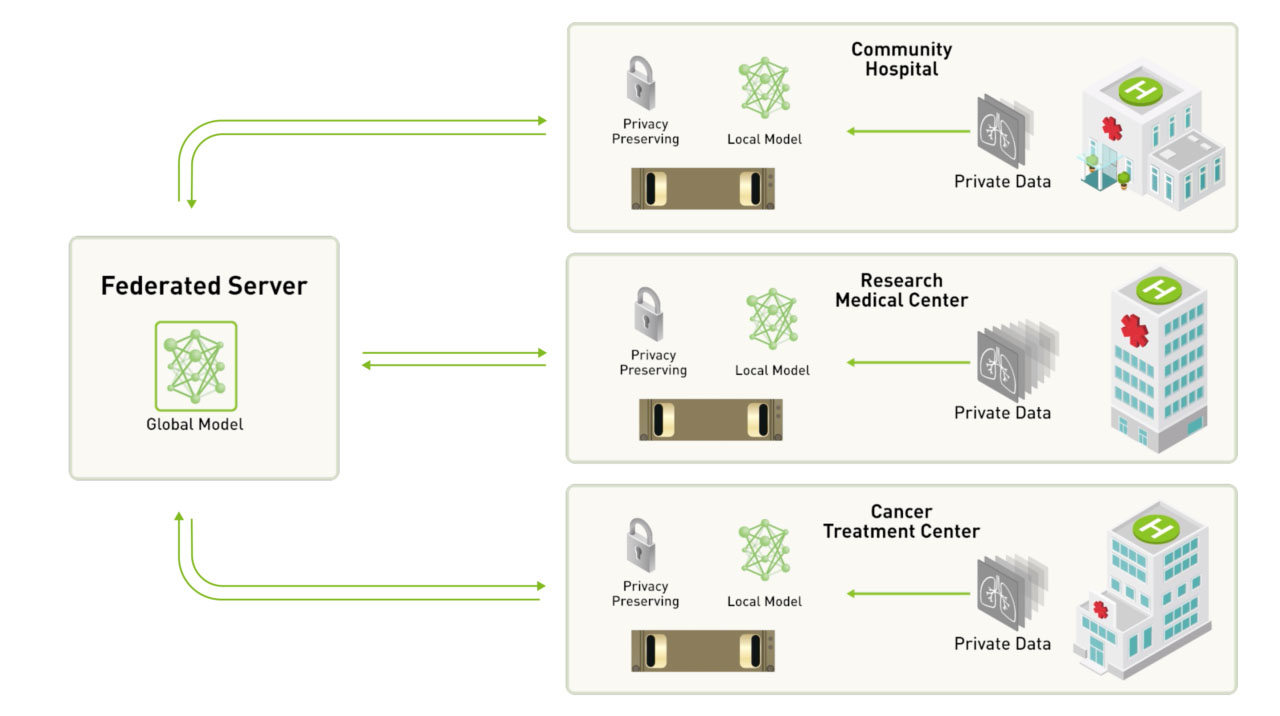

Federated learning addresses this challenge, enabling different institutions to collaborate on AI model development without sharing sensitive clinical data with each other. The goal is to end up with more generalizable models that perform well on any dataset, instead of an AI biased by the patient demographics or imaging equipment of one specific radiology department.

“Federated learning presents an opportunity for healthcare organizations worldwide to work together without compromising on the data security of patient records,” said Jayashree Kalpathy-Cramer, of Partners HealthCare, a collaborator on the project. “With this methodology, we can collectively raise the bar for AI tools in medicine.”

Researchers from the American College of Radiology, Brazilian imaging center Diagnosticos da America, Partners HealthCare, The Ohio State University and Stanford Medicine came together for a federated learning proof of concept. In under six weeks, each institution used federated learning to improve a 2D mammography classification model, achieving better predictive power on their local dataset than the original neural network trained on their local data alone.

By boosting model performance, federated learning enabled improved breast density classification from mammograms, which could lead to better breast cancer risk assessment.

Recognizing Risk

When radiologists analyze mammograms, they look for tumors, but also assess breast tissue density — a measure of the fibrous and glandular tissue that appears on a woman’s mammogram. Scans are categorized into one of four classifications: fatty, scattered, heterogeneously dense, and extremely dense.

Even if doctors don’t find a tumor on a standard screening mammogram, they’re required to report breast density assessment to patients. That’s because women with high breast density — roughly half of American women between the ages of 40 and 74 — have a four to five times greater risk of developing breast cancer.

Higher quality tools for classifying breast density from mammograms could help doctors better assess patients’ cancer risk.

The original mammography classification model used for this proof of concept was provided by Partners HealthCare, and was developed using the NVIDIA Clara Train SDK on NVIDIA GPUs. Each site then retrained the model using the Clara Federated Learning SDK, without any data being transferred between sites.

“Deep learning models are, by nature, constrained by the size and diversity of their training datasets,” said Mike Tilkin, chief information officer at the American College of Radiology, whose team developed its models on NVIDIA V100 Tensor Core GPUs. “The Clara Federated Learning software was critical in improving the performance of the breast density classification model, without any sharing of patient data.”

Forging Ahead with Federated Learning

Each of the five participating organizations contributed a 2D mammography dataset to the project. Combined, there were nearly 100,000 scans for training.

Rather than needing to pool these scans into a single location, each institution set up a secure, in-house server for its data. One centralized server held the global deep neural network, and each participating radiology department got a copy to train on their own dataset.

Once completing a predetermined number of passes through their own training data, each client automatically sent partial model weights back to the federated server. The server aggregated these partial weights and sent updated weights back to each client.

After several rounds of this weight exchange, each participating client site ended up with a better performing model using learnings from the aggregate dataset of all of five participating institutions — without ever seeing any data except their own.

This improved performance wasn’t just limited to each institution’s local dataset — it persisted when the model was tested on data from other participants’ sites. In this way, without ever moving clinical information out of the client’s data center, each institution benefited from other participants training the same model on their own datasets.

“We saw a significant jump in our AI model’s performance using federated learning,” said Richard White, chair of radiology at Ohio State. “This preliminary result is a promising indicator that training on decentralized data can set a new standard for automated classification models.”

The university’s researchers used the NVIDIA DGX-1 server for training and inference of its AI models.

Further research using NVIDIA Clara Federated Learning includes an effort to develop generalizable models that achieve high accuracy on any dataset, as well as a project applying federated learning to segmentation models.

To get started with NVIDIA Clara, download the latest Clara Train SDK.

Image by Bill Branson. Licensed from the National Cancer Institute under public domain.